[The article below is reprinted from the October 1997 edition of The International TNDM Newsletter.]

[The article below is reprinted from the October 1997 edition of The International TNDM Newsletter.]

Consistent Scoring of Weapons and Aggregation of Forces:

The Cornerstone of Dupuy’s Quantitative Analysis of Historical Land Battles

by

James G. Taylor, PhD,

Dept. of Operations Research, Naval Postgraduate School

Introduction

Col. Trevor N. Dupuy was an American original, especially as regards the quantitative study of warfare. As with many prophets, he was not entirely appreciated in his own land, particularly its Military Operations Research (OR) community. However, after becoming rather familiar with the details of his mathematical modeling of ground combat based on historical data, I became aware of the basic scientific soundness of his approach. Unfortunately, his documentation of methodology was not always accepted by others, many of whom appeared to confuse lack of mathematical sophistication in his documentation with lack of scientific validity of his basic methodology.

The purpose of this brief paper is to review the salient points of Dupuy’s methodology from a system’s perspective, i.e., to view his methodology as a system, functioning as an organic whole to capture the essence of past combat experience (with an eye towards extrapolation into the future). The advantage of this perspective is that it immediately leads one to the conclusion that if one wants to use some functional relationship derived from Dupuy’s work, then one should use his methodologies for scoring weapons, aggregating forces, and adjusting for operational circumstances; since this consistency is the only guarantee of being able to reproduce historical results and to project them into the future.

Implications (of this system’s perspective on Dupuy’s work) for current DOD models will be discussed. In particular, the Military OR community has developed quantitative methods for imputing values to weapon systems based on their attrition capability against opposing forces and force interactions.[1] One such approach is the so-called antipotential-potential method[2] used in TACWAR[3] to score weapons. However, one should not expect such scores to provide valid casualty estimates when combined with historically derived functional relationships such as the so-called ATLAS casualty-rate curves[4] used in TACWAR, because a different “yard-stick” (i.e. measuring system for estimating the relative combat potential of opposing forces) was used to develop such a curve.

Overview of Dupuy’s Approach

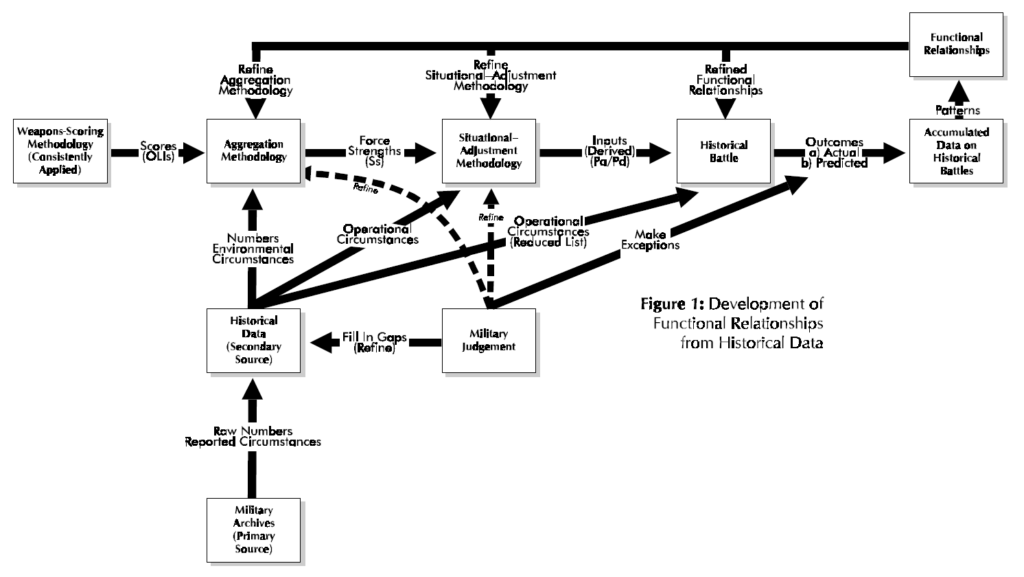

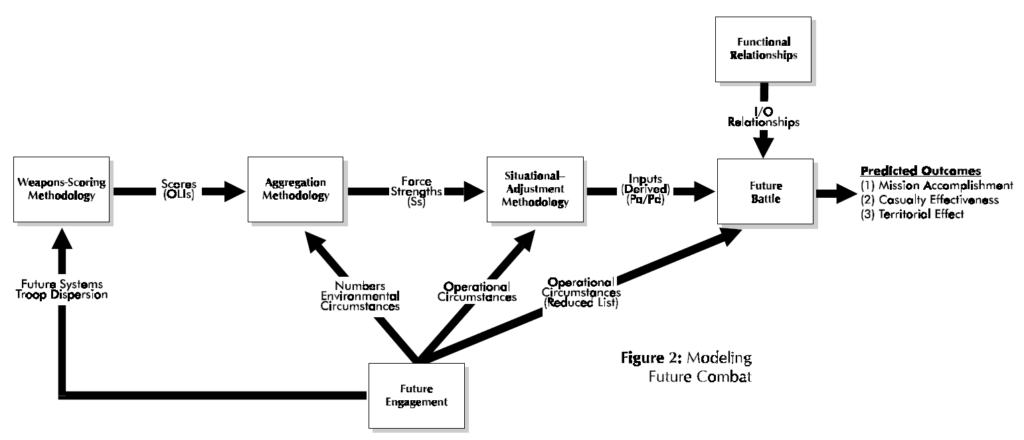

This section briefly outlines the salient features of Dupuy’s approach to the quantitative analysis and modeling of ground combat as embodied in his Tactical Numerical Deterministic Model (TNDM) and its predecessor the Quantified Judgment Model (QJM). The interested reader can find details in Dupuy [1979] (see also Dupuy [1985][5], [1987], [1990]). Here we will view Dupuy’s methodology from a system approach, which seeks to discern its various components and their interactions and to view these components as an organic whole. Essentially Dupuy’s approach involves the development of functional relationships from historical combat data (see Fig. 1) and then using these functional relationships to model future combat (see Fig, 2).

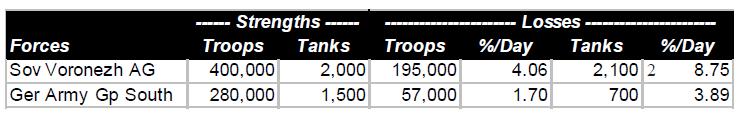

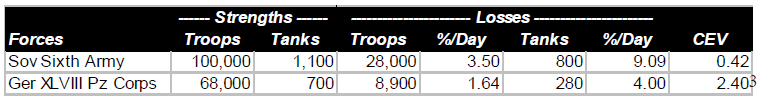

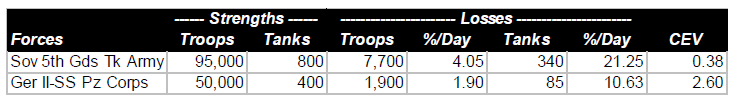

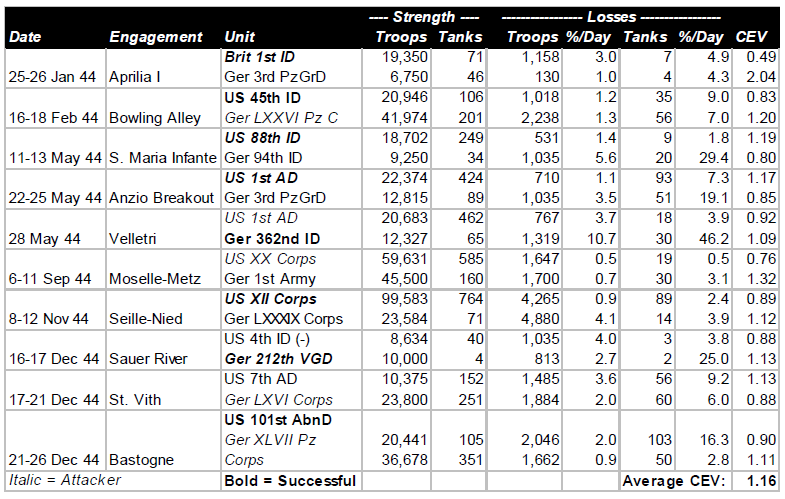

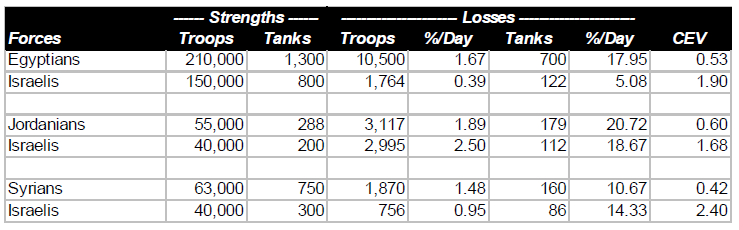

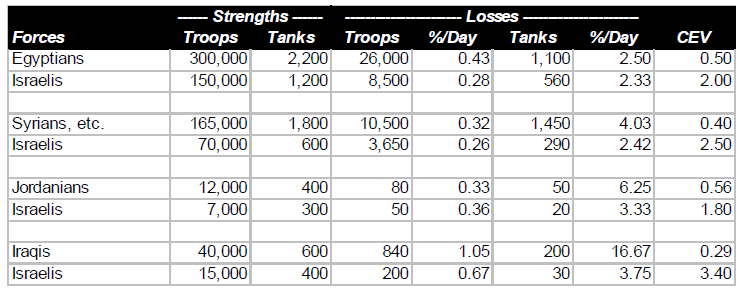

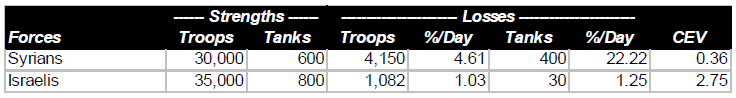

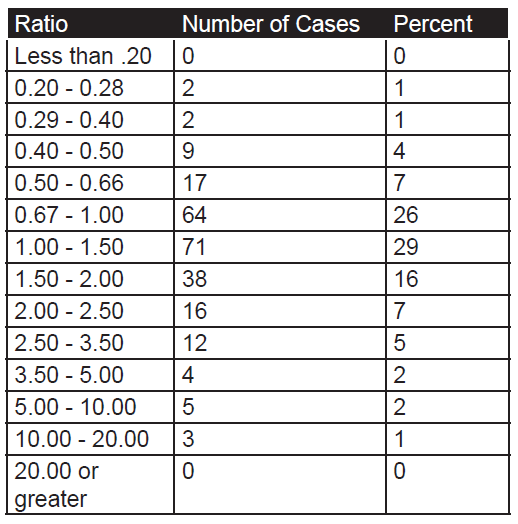

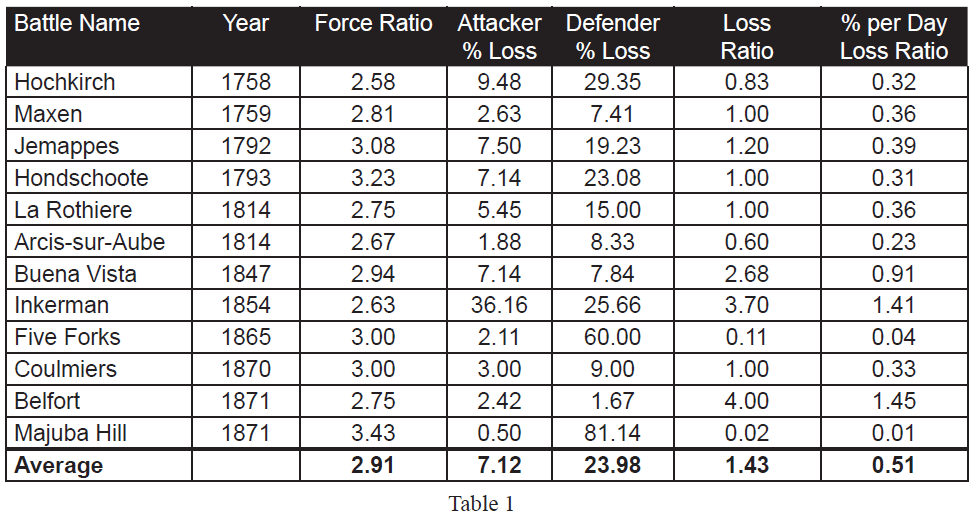

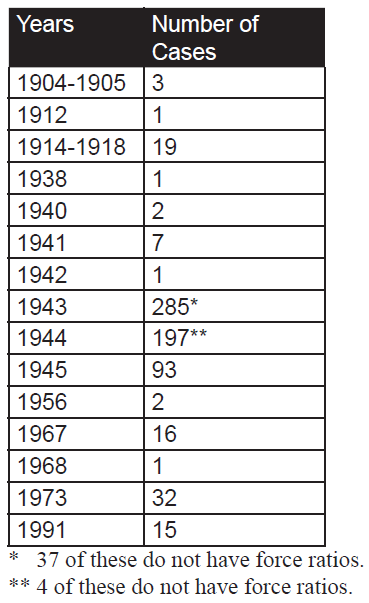

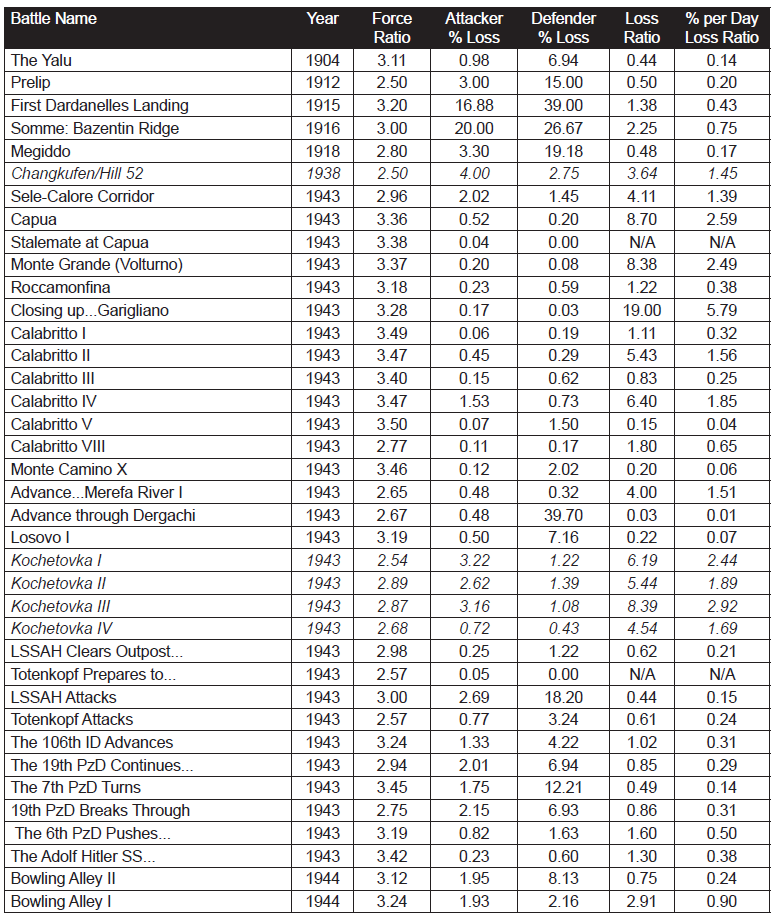

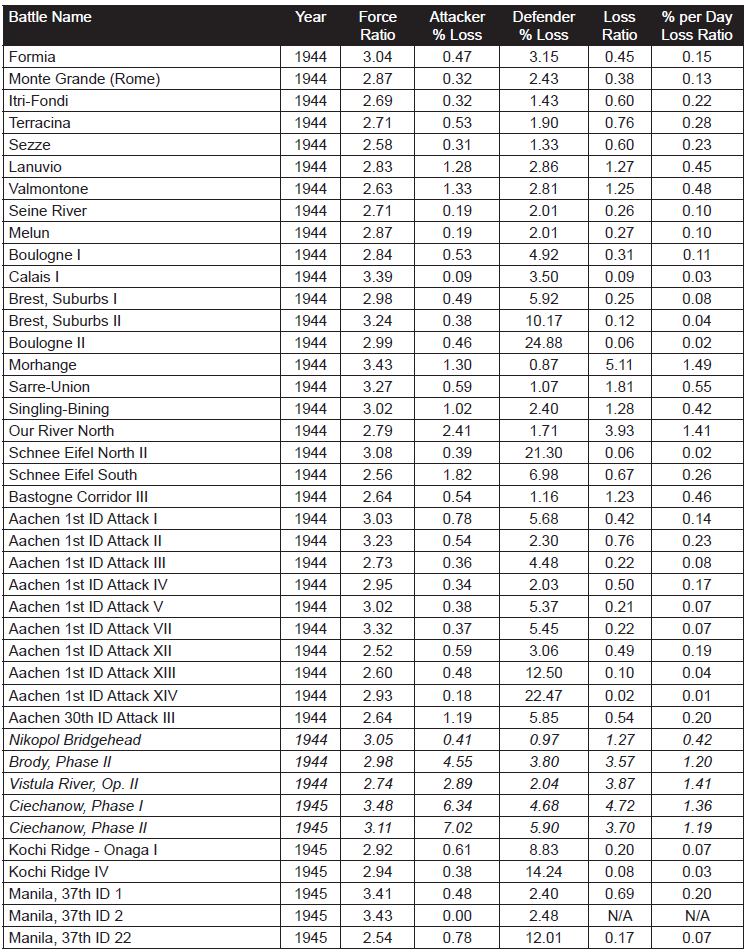

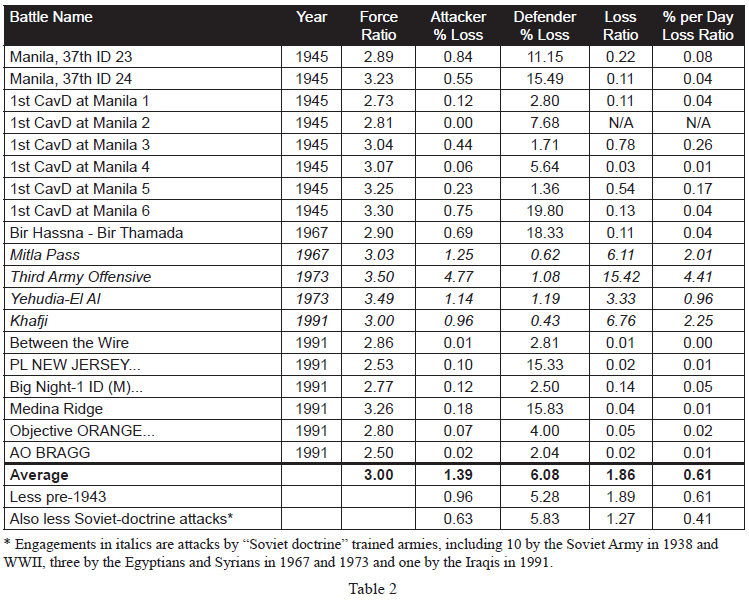

At the heart of Dupuy’s method is the investigation of historical battles and comparing the relationship of inputs (as quantified by relative combat power, denoted as Pa/Pd for that of the attacker relative to that of the defender in Fig. l)(e.g. see Dupuy [1979, pp. 59-64]) to outputs (as quantified by extent of mission accomplishment, casualty effectiveness, and territorial effectiveness; see Fig. 2) (e.g. see Dupuy [1979, pp. 47-50]), The salient point is that within this scheme, the main input[6] (i.e. relative combat power) to a historical battle is a derived quantity. It is computed from formulas that involve three essential aspects: (1) the scoring of weapons (e.g, see Dupuy [1979, Chapter 2 and also Appendix A]), (2) aggregation methodology for a force (e.g. see Dupuy [1979, pp. 43-46 and 202-203]), and (3) situational-adjustment methodology for determining the relative combat power of opposing forces (e.g. see Dupuy [1979, pp. 46-47 and 203-204]). In the force-aggregation step the effects on weapons of Dupuy’s environmental variables and one operational variable (air superiority) are considered[7], while in the situation-adjustment step the effects on forces of his behavioral variables[8] (aggregated into a single factor called the relative combat effectiveness value (CEV)) and also the other operational variables are considered (Dupuy [1987, pp. 86-89])

Moreover, any functional relationships developed by Dupuy depend (unless shown otherwise) on his computational system for derived quantities, namely OLls, force strengths, and relative combat power. Thus, Dupuy’s results depend in an essential manner on his overall computational system described immediately above. Consequently, any such functional relationship (e.g. casualty-rate curve) directly or indirectly derivative from Dupuy‘s work should still use his computational methodology for determination of independent-variable values.

Fig l also reveals another important aspect of Dupuy’s work, the development of reliable data on historical battles, Military judgment plays an essential role in this development of such historical data for a variety of reasons. Dupuy was essentially the only source of new secondary historical data developed from primary sources (see McQuie [1970] for further details). These primary sources are well known to be both incomplete and inconsistent, so that military judgment must be used to fill in the many gaps and reconcile observed inconsistencies. Moreover, military judgment also generates the working hypotheses for model development (e.g. identification of significant variables).

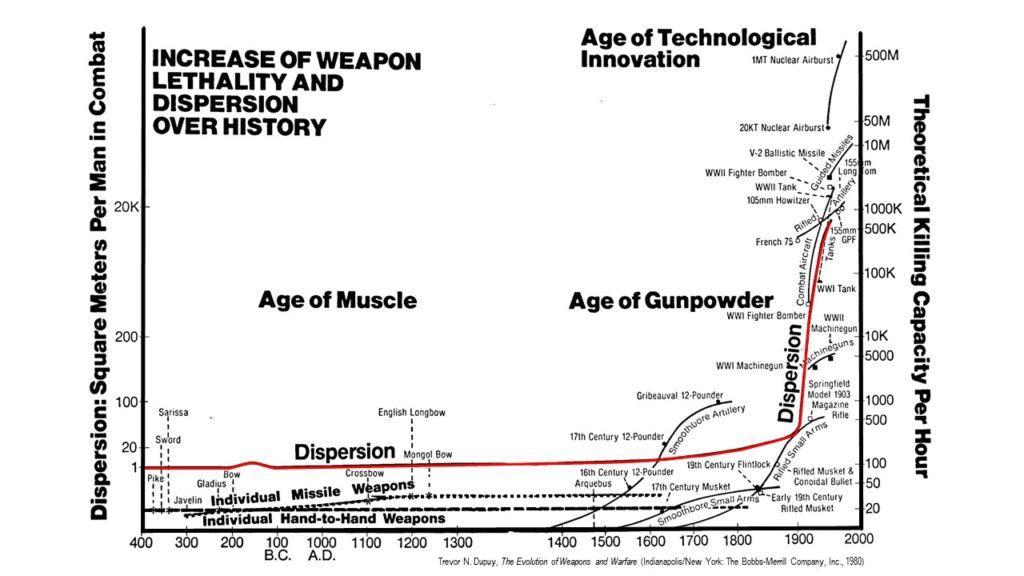

At the heart of Dupuy’s quantitative investigation of historical battles and subsequent model development is his own weapons-scoring methodology, which slowly evolved out of study efforts by the Historical Evaluation Research Organization (HERO) and its successor organizations (cf. HERO [1967] and compare with Dupuy [1979]). Early HERO [1967, pp. 7-8] work revealed that what one would today call weapons scores developed by other organizations were so poorly documented that HERO had to create its own methodology for developing the relative lethality of weapons, which eventually evolved into Dupuy’s Operational Lethality Indices (OLIs). Dupuy realized that his method was arbitrary (as indeed is its counterpart, called the operational definition, in formal scientific work), but felt that this would be ameliorated if the weapons-scoring methodology be consistently applied to historical battles. Unfortunately, this point is not clearly stated in Dupuy’s formal writings, although it was clearly (and compellingly) made by him in numerous briefings that this author heard over the years.

In other words, from a system’s perspective, the functional relationships developed by Colonel Dupuy are part of his analysis system that includes this weapons-scoring methodology consistently applied (see Fig. l again). The derived functional relationships do not stand alone (unless further empirical analysis shows them to hold for any weapons-scoring methodology), but function in concert with computational procedures. Another essential part of this system is Dupuy‘s aggregation methodology, which combines numbers, environmental circumstances, and weapons scores to compute the strength (S) of a military force. A key innovation by Colonel Dupuy [1979, pp. 202- 203] was to use a nonlinear (more precisely, a piecewise-linear) model for certain elements of force strength. This innovation precluded the occurrence of military absurdities such as air firepower being fully substitutable for ground firepower, antitank weapons being fully effective when armor targets are lacking, etc‘ The final part of this computational system is Dupuy’s situational-adjustment methodology, which combines the effects of operational circumstances with force strengths to determine relative combat power, e.g. Pa/Pd.

To recapitulate, the determination of an Operational Lethality Index (OLI) for a weapon involves the combination of weapon lethality, quantified in terms of a Theoretical Lethality Index (TLI) (e.g. see Dupuy [1987, p. 84]), and troop dispersion[9] (e.g. see Dupuy [1987, pp. 84- 85]). Weapons scores (i.e. the OLIs) are then combined with numbers (own side and enemy) and combat- environment factors to yield force strength. Six[10] different categories of weapons are aggregated, with nonlinear (i.e. piecewise-linear) models being used for the following three categories of weapons: antitank, air defense, and air firepower (i.e. c1ose—air support). Operational, e.g. mobility, posture, surprise, etc. (Dupuy [1987, p. 87]), and behavioral variables (quantified as a relative combat effectiveness value (CEV)) are then applied to force strength to determine a side’s combat-power potential.

Requirement for Consistent Scoring of Weapons, Force Aggregation, and Situational Adjustment for Operational Circumstances

The salient point to be gleaned from Fig.1 and 2 is that the same (or at least consistent) weapons—scoring, aggregation, and situational—adjustment methodologies be used for both developing functional relationships and then playing them to model future combat. The corresponding computational methods function as a system (organic whole) for determining relative combat power, e.g. Pa/Pd. For the development of functional relationships from historical data, a force ratio (relative combat power of the two opposing sides, e.g. attacker’s combat power divided by that of the defender, Pa/Pd is computed (i.e. it is a derived quantity) as the independent variable, with observed combat outcome being the dependent variable. Thus, as discussed above, this force ratio depends on the methodologies for scoring weapons, aggregating force strengths, and adjusting a force’s combat power for the operational circumstances of the engagement. It is a priori not clear that different scoring, aggregation, and situational-adjustment methodologies will lead to similar derived values. If such different computational procedures were to be used, these derived values should be recomputed and the corresponding functional relationships rederived and replotted.

However, users of the Tactical Numerical Deterministic Model (TNDM) (or for that matter, its predecessor, the Quantified Judgment Model (QJM)) need not worry about this point because it was apparently meticulously observed by Colonel Dupuy in all his work. However, portions of his work have found their way into a surprisingly large number of DOD models (usually not explicitly acknowledged), but the context and range of validity of historical results have been largely ignored by others. The need for recalibration of the historical data and corresponding functional relationships has not been considered in applying Dupuy’s results for some important current DOD models.

Implications for Current DOD Models

A number of important current DOD models (namely, TACWAR and JICM discussed below) make use of some of Dupuy’s historical results without recalibrating functional relationships such as loss rates and rates of advance as a function of some force ratio (e.g. Pa/Pd). As discussed above, it is not clear that such a procedure will capture the essence of past combat experience. Moreover, in calculating losses, Dupuy first determines personnel losses (expressed as a percent loss of personnel strength, i.e., number of combatants on a side) and then calculates equipment losses as a function of this casualty rate (e.g., see Dupuy [1971, pp. 219-223], also [1990, Chapters 5 through 7][11]). These latter functional relationships are apparently not observed in the models discussed below. In fact, only Dupuy (going back to Dupuy [1979][12] takes personnel losses to depend on a force ratio and other pertinent variables, with materiel losses being taken as derivative from this casualty rate.

For example, TACWAR determines personnel losses[13] by computing a force ratio and then consulting an appropriate casualty-rate curve (referred to as empirical data), much in the same fashion as ATLAS did[14]. However, such a force ratio is computed using a linear model with weapon values determined by the so-called antipotential-potential method[15]. Unfortunately, this procedure may not be consistent with how the empirical data (i.e. the casualty-rate curves) was developed. Further research is required to demonstrate that valid casualty estimates are obtained when different weapon scoring, aggregation, and situational-adjustment methodologies are used to develop casualty-rate curves from historical data and to use them to assess losses in aggregated combat models. Furthermore, TACWAR does not use Dupuy’s model for equipment losses (see above), although it does purport, as just noted above, to use “historical data” (e.g., see Kerlin et al. [1975, p. 22]) to compute personnel losses as a function (among other things) of a force ratio (given by a linear relationship), involving close air support values in a way never used by Dupuy. Although their force-ratio determination methodology does have logical and mathematical merit, it is not the way that the historical data was developed.

Moreover, RAND (Allen [1992]) has more recently developed what is called the situational force scoring (SFS) methodology for calculating force ratios in large-scale, aggregated-force combat situations to determine loss and movement rates. Here, SFS refers essentially to a force- aggregation and situation-adjustment methodology, which has many conceptual elements in common with Dupuy‘s methodology (except, most notably, extensive testing against historical data, especially documentation of such efforts). This SFS was originally developed for RSAS[16] and is today used in JICM[17]. It also apparently uses a weapon-scoring system developed at RAND[18]. It purports (no documentation given [citation of unpublished work]) to be consistent with historical data (including the ATLAS casualty-rate curves) (Allen [1992, p.41]), but again no consideration is given to recalibration of historical results for different weapon scoring, force-aggregation, and situational-adjustment methodologies. SFS emphasizes adjusting force strengths according to operational circumstances (the “situation”) of the engagement (including surprise), with many innovative ideas (but in some major ways has little connection with previous work of others[19]). The resulting model contains many more details than historical combat data would support. It also is methodology that differs in many essential ways from that used previously by any investigator. In particular, it is doubtful that it develops force ratios in a manner consistent with Dupuy’s work.

Final Comments

Use of (sophisticated) mathematics for modeling past historical combat (and extrapolating it into the future for planning purposes) is no reason for ignoring Dupuy’s work. One would think that the current Military OR community would try to understand Dupuy’s work before trying to improve and extend it. In particular, Colonel Dupuy’s various computational procedures (including constants) must be considered as an organic whole (i.e. a system) supporting the development of functional relationships. If one ignores this computational system and simply tries to use some isolated aspect, the result may be interesting and even logically sound, but it probably lacks any scientific validity.

REFERENCES

P. Allen, “Situational Force Scoring: Accounting for Combined Arms Effects in Aggregate Combat Models,” N-3423-NA, The RAND Corporation, Santa Monica, CA, 1992.

L. B. Anderson, “A Briefing on Anti-Potential Potential (The Eigen-value Method for Computing Weapon Values), WP-2, Project 23-31, Institute for Defense Analyses, Arlington, VA, March 1974.

B. W. Bennett, et al, “RSAS 4.6 Summary,” N-3534-NA, The RAND Corporation, Santa Monica, CA, 1992.

B. W. Bennett, A. M. Bullock, D. B. Fox, C. M. Jones, J. Schrader, R. Weissler, and B. A. Wilson, “JICM 1.0 Summary,” MR-383-NA, The RAND Corporation, Santa Monica, CA, 1994.

P. K. Davis and J. A. Winnefeld, “The RAND Strategic Assessment Center: An Overview and Interim Conclusions About Utility and Development Options,” R-2945-DNA, The RAND Corporation, Santa Monica, CA, March 1983.

T.N, Dupuy, Numbers. Predictions and War: Using History to Evaluate Combat Factors and Predict the Outcome of Battles, The Bobbs-Merrill Company, Indianapolis/New York, 1979,

T.N. Dupuy, Numbers Predictions and War, Revised Edition, HERO Books, Fairfax, VA 1985.

T.N. Dupuy, Understanding War: History and Theory of Combat, Paragon House Publishers, New York, 1987.

T.N. Dupuy, Attrition: Forecasting Battle Casualties and Equipment Losses in Modem War, HERO Books, Fairfax, VA, 1990.

General Research Corporation (GRC), “A Hierarchy of Combat Analysis Models,” McLean, VA, January 1973.

Historical Evaluation and Research Organization (HERO), “Average Casualty Rates for War Games, Based on Historical Data,” 3 Volumes in 1, Dunn Loring, VA, February 1967.

E. P. Kerlin and R. H. Cole, “ATLAS: A Tactical, Logistical, and Air Simulation: Documentation and User’s Guide,” RAC-TP-338, Research Analysis Corporation, McLean, VA, April 1969 (AD 850 355).

E.P. Kerlin, L.A. Schmidt, A.J. Rolfe, M.J. Hutzler, and D,L. Moody, “The IDA Tactical Warfare Model: A Theater-Level Model of Conventional, Nuclear, and Chemical Warfare, Volume II- Detailed Description” R-21 1, Institute for Defense Analyses, Arlington, VA, October 1975 (AD B009 692L).

R. McQuie, “Military History and Mathematical Analysis,” Military Review 50, No, 5, 8-17 (1970).

S.M. Robinson, “Shadow Prices for Measures of Effectiveness, I: Linear Model,” Operations Research 41, 518-535 (1993).

J.G. Taylor, Lanchester Models of Warfare. Vols, I & II. Operations Research Society of America, Alexandria, VA, 1983. (a)

J.G. Taylor, “A Lanchester-Type Aggregated-Force Model of Conventional Ground Combat,” Naval Research Logistics Quarterly 30, 237-260 (1983). (b)

NOTES

[1] For example, see Taylor [1983a, Section 7.18], which contains a number of examples. The basic references given there may be more accessible through Robinson [I993].

[2] This term was apparently coined by L.B. Anderson [I974] (see also Kerlin et al. [1975, Chapter I, Section D.3]).

[3] The Tactical Warfare (TACWAR) model is a theater-level, joint-warfare, computer-based combat model that is currently used for decision support by the Joint Staff and essentially all CINC staffs. It was originally developed by the Institute for Defense Analyses in the mid-1970s (see Kerlin et al. [1975]), originally referred to as TACNUC, which has been continually upgraded until (and including) the present day.

[4] For example, see Kerlin and Cole [1969], GRC [1973, Fig. 6-6], or Taylor [1983b, Fig. 5] (also Taylor [1983a, Section 7.13]).

[5] The only apparent difference between Dupuy [1979] and Dupuy [1985] is the addition of an appendix (Appendix C “Modified Quantified Judgment Analysis of the Bekaa Valley Battle”) to the end of the latter (pp. 241-251). Hence, the page content is apparently the same for these two books for pp. 1-239.

[6] Technically speaking, one also has the engagement type and possibly several other descriptors (denoted in Fig. 1 as reduced list of operational circumstances) as other inputs to a historical battle.

[7] In Dupuy [1979, e.g. pp. 43-46] only environmental variables are mentioned, although basically the same formulas underlie both Dupuy [1979] and Dupuy [1987]. For simplicity, Fig. 1 and 2 follow this usage and employ the term “environmental circumstances.”

[8] In Dupuy [1979, e.g. pp. 46-47] only operational variables are mentioned, although basically the same formulas underlie both Dupuy [1979] and Dupuy [1987]. For simplicity, Fig. 1 and 2 follow this usage and employ the term “operational circumstances.”

[9] Chris Lawrence has kindly brought to my attention that since the same value for troop dispersion from an historical period (e.g. see Dupuy [1987, p. 84]) is used for both the attacker and also the defender, troop dispersion does not actually affect the determination of relative combat power PM/Pd.

[10] Eight different weapon types are considered, with three being classified as infantry weapons (e.g. see Dupuy [1979, pp, 43-44], [1981 pp. 85-86]).

[11] Chris Lawrence has kindly informed me that Dupuy‘s work on relating equipment losses to personnel losses goes back to the early 1970s and even earlier (e.g. see HERO [1966]). Moreover, Dupuy‘s [1992] book Future Wars gives some additional empirical evidence concerning the dependence of equipment losses on casualty rates.

[12] But actually going back much earlier as pointed out in the previous footnote.

[13] See Kerlin et al. [1975, Chapter I, Section D.l].

[14] See Footnote 4 above.

[15] See Kerlin et al. [1975, Chapter I, Section D.3]; see also Footnotes 1 and 2 above.

[16] The RAND Strategy Assessment System (RSAS) is a multi-theater aggregated combat model developed at RAND in the early l980s (for further details see Davis and Winnefeld [1983] and Bennett et al. [1992]). It evolved into the Joint Integrated Contingency Model (JICM), which is a post-Cold War redesign of the RSAS (starting in FY92).

[17] The Joint Integrated Contingency Model (JICM) is a game-structured computer-based combat model of major regional contingencies and higher-level conflicts, covering strategic mobility, regional conventional and nuclear warfare in multiple theaters, naval warfare, and strategic nuclear warfare (for further details, see Bennett et al. [1994]).

[18] RAND apparently replaced one weapon-scoring system by another (e.g. see Allen [1992, pp. 9, l5, and 87-89]) without making any other changes in their SFS System.

[19] For example, both Dupuy’s early HERO work (e.g. see Dupuy [1967]), reworks of these results by the Research Analysis Corporation (RAC) (e.g. see RAC [1973, Fig. 6-6]), and Dupuy’s later work (e.g. see Dupuy [1979]) considered daily fractional casualties for the attacker and also for the defender as basic casualty-outcome descriptors (see also Taylor [1983b]). However, RAND does not do this, but considers the defender’s loss rate and a casualty exchange ratio as being the basic casualty-production descriptors (Allen [1992, pp. 41-42]). The great value of using the former set of descriptors (i.e. attacker and defender fractional loss rates) is that not only is casualty assessment more straight forward (especially development of functional relationships from historical data) but also qualitative model behavior is readily deduced (see Taylor [1983b] for further details).