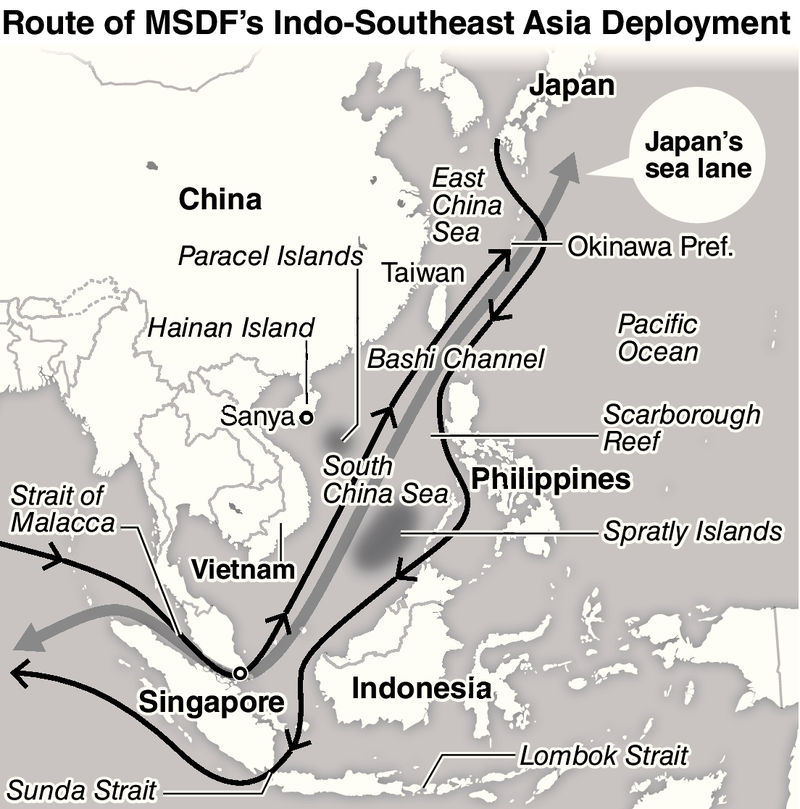

In my previous post, I looked at the Japanese Maritime Self-Defense Force (JMSDF) basic strategic missions of defending Japan from maritime invasion and securing the sea lines of communication (SLOC). This post will examine the basis for JMSDF’s approach to those tasks.

In 2011, JMSDF Vice Admiral (Ret.) Yoji Koda published an excellent article in the Naval War College Review, entitled “A New Carrier Race?.” Two passages therefrom are particular relevant and illuminating:

In 1952, … the Japan Maritime Guard (JMG) was established as a rudimentary defense organization for the nation. The leaders of the JMG were determined that the organization would be a navy, not a reinforced coast guard. Most were combat-experienced officers (captains and below) of the former Imperial Japanese Navy, and they had clear understanding of the difference between a coast guard–type law-enforcement force and a navy. Two years later, the JMG was transformed into the JMSDF, and with leaders whose dream to build a force that had a true naval function was stronger than ever. However, they also knew the difficulty of rebuilding a real navy, in light of strict constraints imposed by the new, postwar constitution. Nonetheless, the JMSDF has built its forces and trained its sailors vigorously, with this goal in view, and it is today one of the world’s truly capable maritime forces in both quality and size.

This continuity with the World War II-era Imperial Japanese Navy (IJN) is evident in several practices. The JMSDF generally re-uses IJN names of for new vessels, as well as its naval ensign, the Kyokujitsu-ki or “Rising Sun” flag. This flag is seen by some in South Korea and other countries as symbolic of Japan’s wartime militarism. In October 2018, the JMSDF declined an invitation to attend a naval review held by the Republic of Korea Navy (ROKN) at Jeju island, due to a request that only national flags be flown at the event. This type of disagreement may have a material impact on the ability of the JMSDF and the ROKN, both allies of the United States, to jointly operate effectively.

Koda continued:

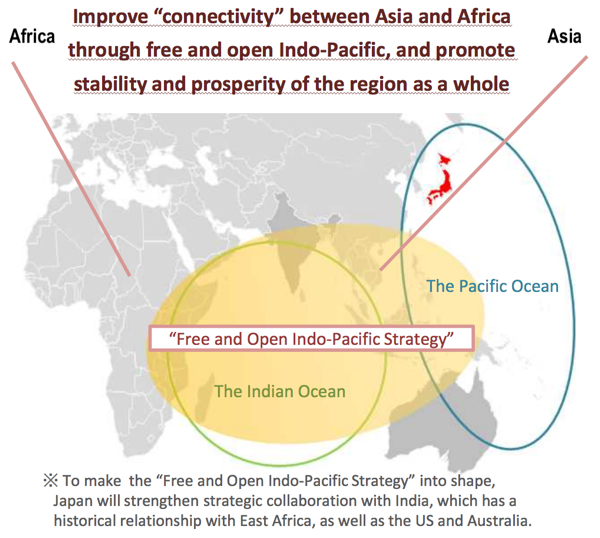

Since the founding of the Japan Self-Defense Force (JSDF) and within it the JMSDF, in 1954…the bases of Japan’s national security and defense are the capability of the JSDF and the Japanese-U.S. alliance… Thus the operational concept of the JSDF with respect to the U.S. armed forces has been one of complementary mission-sharing, in which U.S. forces concentrate on offensive operations, while the JSDF maximizes its capability for defensive operations. In other words, the two forces form what is known as a “spear and shield” relationship… [T]he JMSDF ensures that Japan can receive American reinforcements from across the Pacific Ocean, guarantees the safety of U.S. naval forces operating around Japan, and enables U.S. carrier strike groups (CSGs) to concentrate on strike operations against enemy naval forces and land targets…[so] the JMSDF has set antisubmarine warfare as its main task…ASW was made the main pillar of JMSDF missions. Even in the present security environment, twenty years after the end of the Cold War and the threat of invasion from the Soviet Union, two factors are unchanged—the Japanese-U.S. alliance and Japan’s dependence on imported natural resources. Therefore the protection of SLOCs has continued to be a main mission of the JMSDF.

It is difficult to overstate the degree to which the USN and JMSDF are integrated. The US Navy’s Seventh Fleet is headquartered in Yokosuka, Japan, where the U.S.S. Ronald Reagan, a Nimitz-class super carrier, is stationed. Historically, this position was filled by the U.S.S. George Washington, which is currently back in Virginia undergoing refueling and overhaul. According to the Stars and Stripes, she may return to Japan with a new air wing, incorporating the MQ-25A Stingray aerial refueling drones.

According to the Center for Naval Analysis (CNA), the USN has the following ships based in Japan:

- Yokosuka (south of Tokyo, in eastern Japan)

- One CVN (nuclear aircraft carrier), U.S.S. Ronald Reagan

- One AGC (amphibious command ship), U.S.S. Blue Ridge

- Three CG (guided missile cruisers)

- Seven DDG (guided missile destroyers)

- Sasebo (north of Nagasaki, in the southern island of Kyuushu)

- One LHD (amphibious assault ship, multi-purpose), U.S.S. Bon Home Richard

- One LPD (amphibious transport dock), U.S.S. Greenway

- Two LSD (dock landing ship)

- Four MCM (mine counter measure ship)

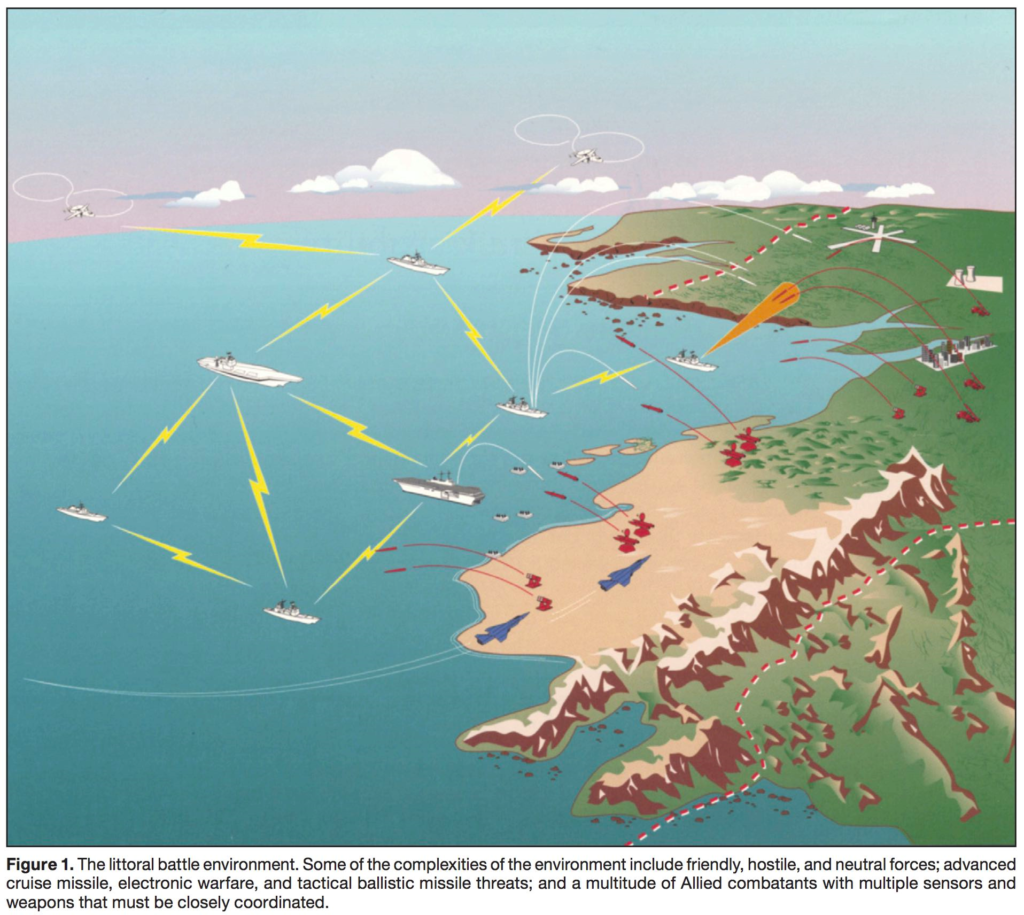

One example of this close integration is the JS Maya, a Guided Missile Destroyer (DDG), launched on 30 July 2018. The ship is currently outfitting and is expected to be commissioned in 2020. A notable feature is the Collective Engagement Capability (CEC) (see graphic above). CEC is a “revolutionary approach to air defense,” according to John Hopkins Applied Physics Lab (which is involved in the development), “it allows combat systems to share unfiltered sensor measurements data associated with tracks with rapid timing and precision to enable the [USN-JMSDF] battlegroup units to operate as one.”

Zhang Junshe, a senior research fellow at the China’s People’s Liberation Army Naval Military Studies Research Institute, expressed concern in Chinese Global Times about this capability for “potentially targeting China and threatening other countries… CEC will strengthen intelligence data sharing with the US…strengthen their [US and Japan] military alliance. From the US perspective, it can better control Japan… ‘Once absolute security is realized by Japan and the US, they could attack other countries without scruples, which will certainly destabilize other regions.’”

[This piece was originally posted on 13 July 2016.]

[This piece was originally posted on 13 July 2016.]