Mystics & Statistics reader Stiltzkin posed two interesting questions in response to my recent post on the new blog, Logistics in War:

Is there actually a reliable way of calculating logistical demand in correlation to “standing” ration strength/combat/daily strength army size?

Did Dupuy ever focus on logistics in any of his work?

The answer to his first question is, yes, there is. In fact, this has been a standard military staff function since before there were military staffs (Martin van Creveld’s book, Supplying War: Logistics from Wallenstein to Patton (2nd ed.) is an excellent general introduction). Staff officer’s guides and field manuals from various armies from the 19th century to the present are full of useful information on field supply allotments and consumption estimates intended to guide battlefield sustainment. The records of modern armies also contain reams of bureaucratic records documenting logistical functions as they actually occurred. Logistics and supply is a woefully under-studied aspect of warfare, but not because there are no sources upon which to draw.

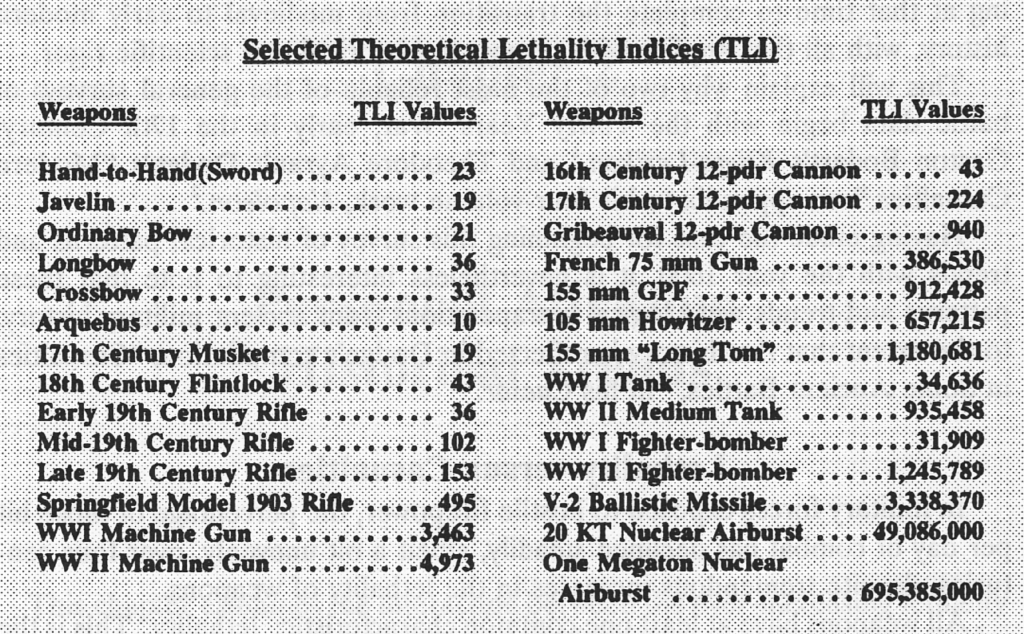

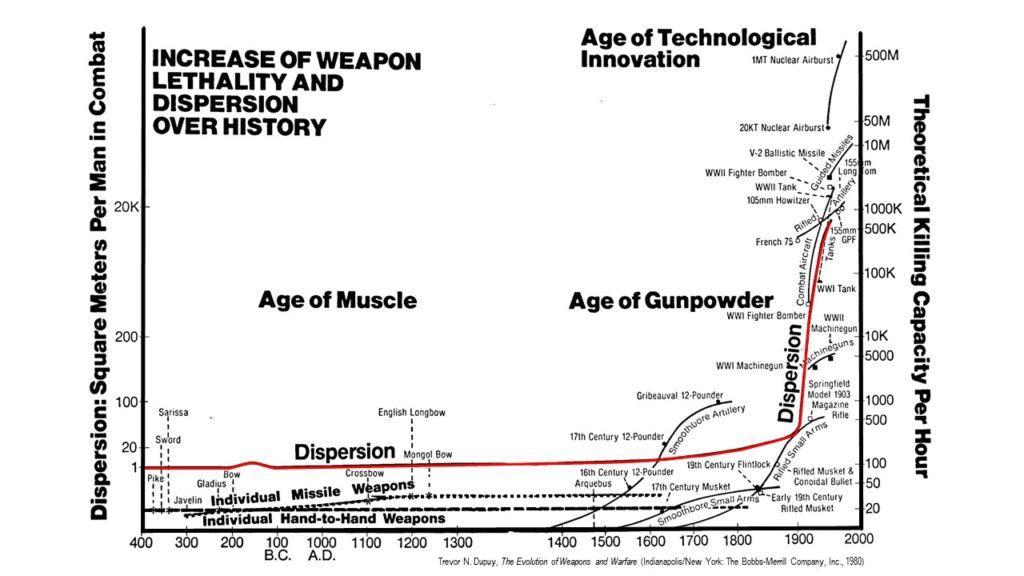

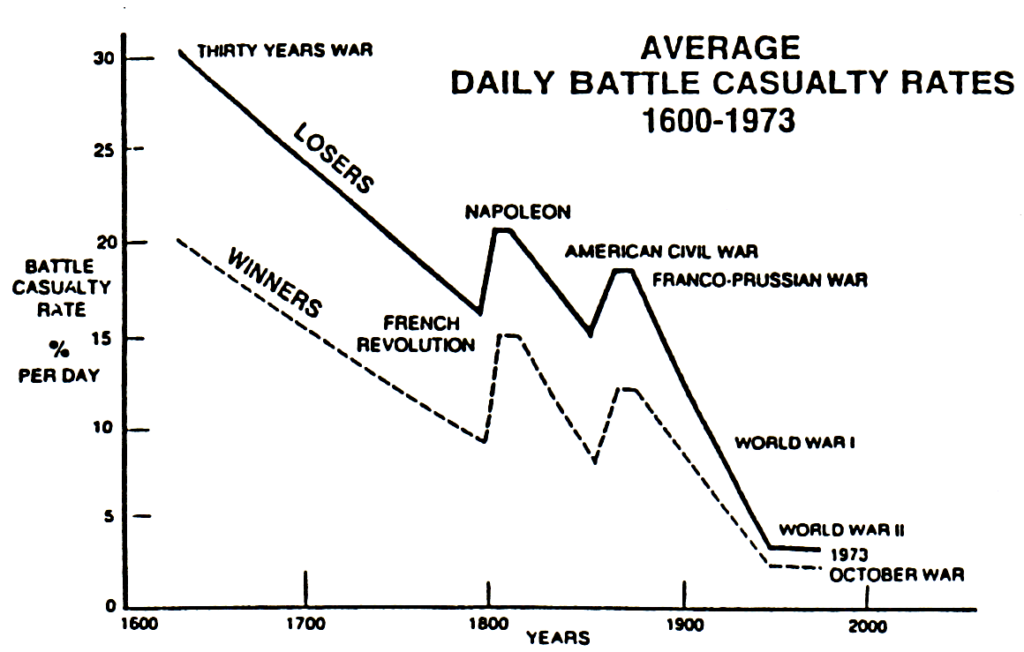

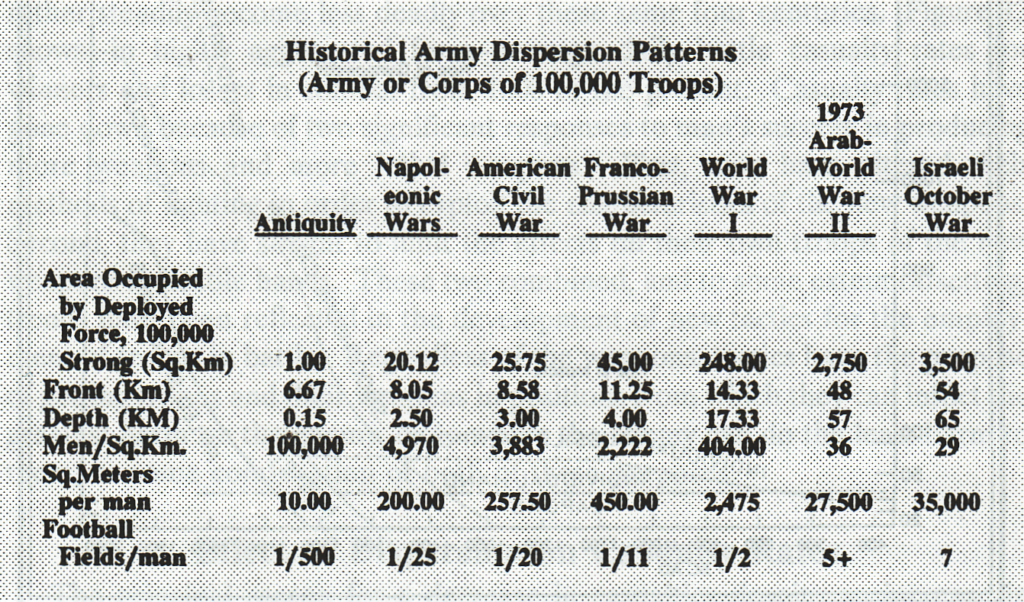

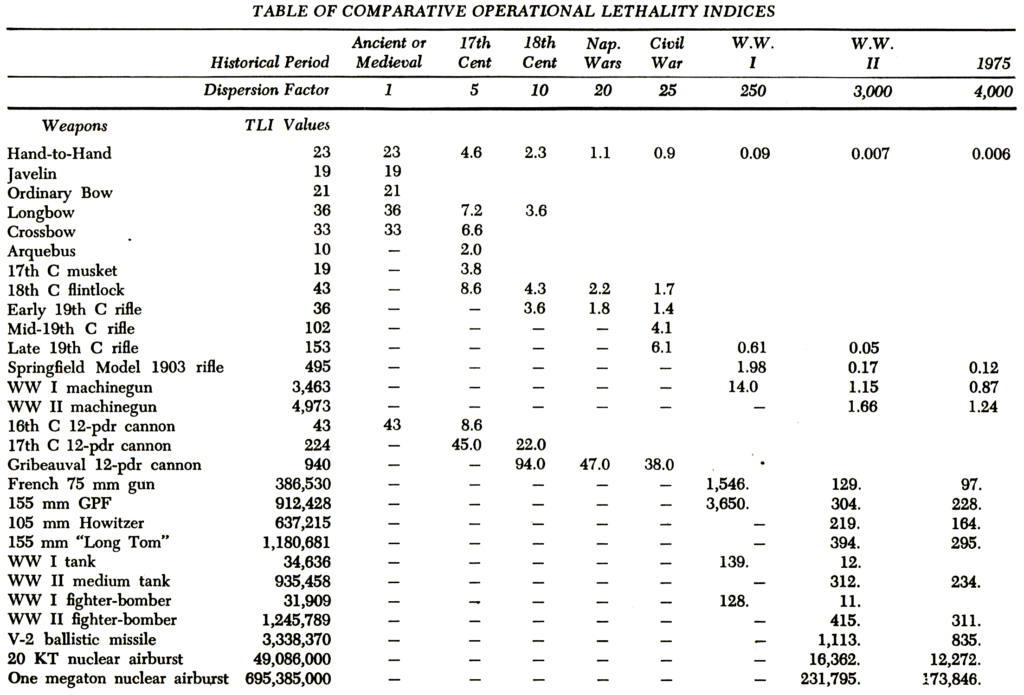

As to his second question, the answer is also yes. Dupuy addressed logistics in his work in a couple of ways. He included two logistics multipliers in his combat models, one in the calculation for the battlefield effects of weapons, the Operational Lethality Index (OLI), and also as one element of the value for combat effectiveness, which is a multiplier in his combat power formula.

Dupuy considered the impact of logistics on combat to be intangible, however. From his historical study of combat, Dupuy understood that logistics impacted both weapons and combat effectiveness, but in the absence of empirical data, he relied on subject matter expertise to assign it a specific value in his model.

Logistics or supply capability is basic in its importance to combat effectiveness. Yet, as in the case of the leadership, training, and morale factors, it is almost impossible to arrive at an objective numerical assessment of the absolute effectiveness of a military supply system. Consequently, this factor also can be applied only when solid historical data provides a basis for objective evaluation of the relative effectiveness of the opposing supply capabilities.[1]

His approach to this stands in contrast to other philosophies of combat model design, which hold that if a factor cannot be empirically measured, it should not be included in a model. (It is up to the reader to decide if this is a valid approach to modeling real-world phenomena or not.)

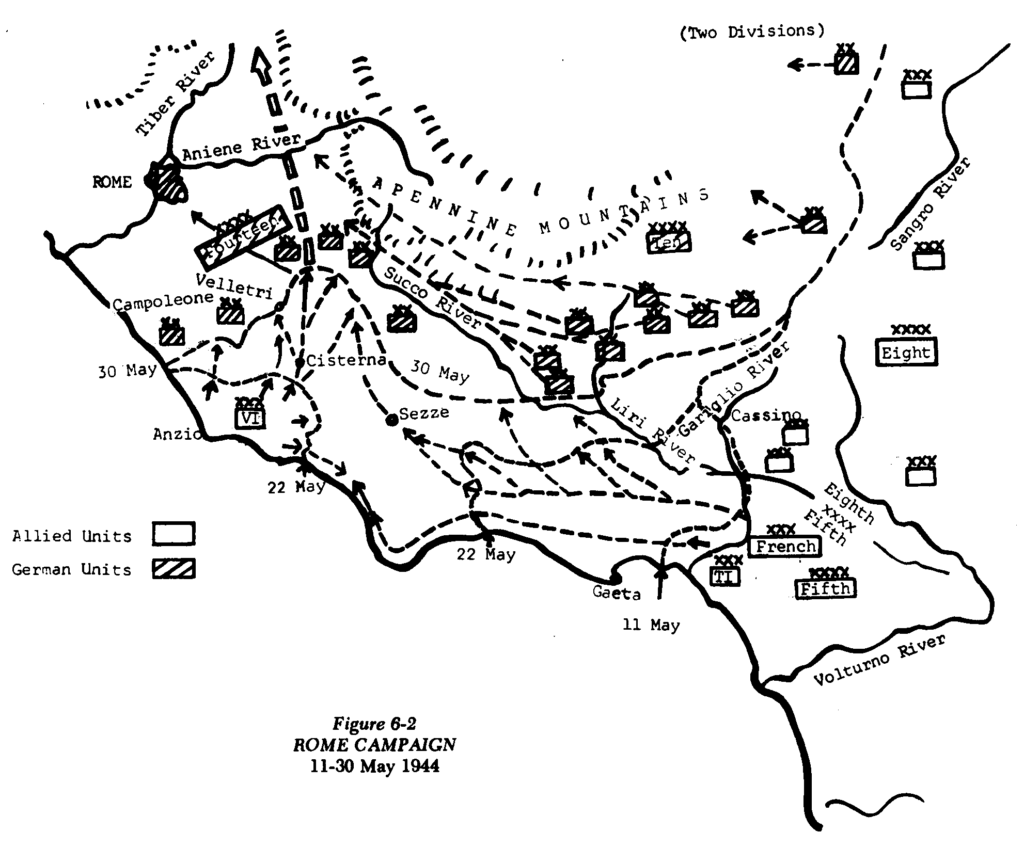

Yet, as with many aspects of the historical study of combat, Dupuy and his colleagues at the Historical Evaluation Research Organization (HERO) had taken an initial cut at empirical research on the subject. In the late 1960s and early 1970s, Dupuy and HERO conducted a series of studies for the U.S. Air Force on the historical use of air power in support of ground warfare. One line of inquiry looked at the effects of air interdiction on supply, specifically at Operation STRANGLE, an effort by the U.S. and British air forces to completely block the lines of communication and supply of German ground forces defending Rome in 1944.

Dupuy and HERO dug deeply into Allied and German primary source documentation to extract extensive data on combat strengths and losses, logistical capabilities and capacities, supply requirements, and aircraft sorties and bombing totals. Dupuy proceeded from a historically-based assumption that combat units, using expedients, experience, and training, could operate unimpaired while only receiving up to 65% of their normal supply requirements. If the level of supply dipped below 65%, the deficiency would begin impinging on combat power at a rate proportional to the percentage of loss (i.e., a 60% supply rate would impose a 5% decline, represented as a combat effectiveness multiplier of .95, and so on).

Using this as a baseline, Dupuy and HERO calculated the amount of aerial combat power the Allies needed to apply to impact German combat effectiveness. They determined that Operation STRANGLE was able to reduce German supply capacity to about 41.8% of normal, which yielded a reduction in the combat power of German ground combat forces by an average of 6.8%.

He cautioned that these calculations were “directly relatable only to the German situation as it existed in Italy in late March and early April 1944.” As detailed as the analysis was, Dupuy stated that it “may be an oversimplification of a most complex combination of elements, including road and railway nets, supply levels, distribution of targets, and tonnage on targets. This requires much further exhaustive analysis in order to achieve confidence in this relatively simple relationship of interdiction effort to supply capability.”[2]

The historical work done by Dupuy and HERO on logistics and combat appears unique, but it seems highly relevant. There is no lack of detailed data from which to conduct further inquiries. The only impediment appears to be lack of interest.

NOTES

[1] Trevor N. Dupuy, Numbers, Predictions and War: Using History to Evaluate Combat Factors and Predict the Outcome of Battles (Indianapolis; New York: The Bobbs-Merrill Co., 1979), p. 38.

[2] Ibid., pp. 78-94.

[NOTE: This post was edited to clarify the effect of supply reduction through aerial interdiction in the Operation STRANGLE study.]

The International Security Studies Forum (ISSF) has posted a

The International Security Studies Forum (ISSF) has posted a

U.S. Army Major Amos Fox, currently a student at the U.S. Army Command and General Staff College, has produced

U.S. Army Major Amos Fox, currently a student at the U.S. Army Command and General Staff College, has produced  Over at his

Over at his