[The article below is reprinted from History, Numbers And War: A HERO Journal, Vol. 1, No. 1, Spring 1977, pp. 34-52]

The Lanchester Equations and Historical Warfare: An Analysis of Sixty World War II Land Engagements

By Janice B. Fain

Background and Objectives

The method by which combat losses are computed is one of the most critical parts of any combat model. The Lanchester equations, which state that a unit’s combat losses depend on the size of its opponent, are widely used for this purpose.

In addition to their use in complex dynamic simulations of warfare, the Lanchester equations have also sewed as simple mathematical models. In fact, during the last decade or so there has been an explosion of theoretical developments based on them. By now their variations and modifications are numerous, and “Lanchester theory” has become almost a separate branch of applied mathematics. However, compared with the effort devoted to theoretical developments, there has been relatively little empirical testing of the basic thesis that combat losses are related to force sizes.

One of the first empirical studies of the Lanchester equations was Engel’s classic work on the Iwo Jima campaign in which he found a reasonable fit between computed and actual U.S. casualties (Note 1). Later studies were somewhat less supportive (Notes 2 and 3), but an investigation of Korean war battles showed that, when the simulated combat units were constrained to follow the tactics of their historical counterparts, casualties during combat could be predicted to within 1 to 13 percent (Note 4).

Taken together, these various studies suggest that, while the Lanchester equations may be poor descriptors of large battles extending over periods during which the forces were not constantly in combat, they may be adequate for predicting losses while the forces are actually engaged in fighting. The purpose of the work reported here is to investigate 60 carefully selected World War II engagements. Since the durations of these battles were short (typically two to three days), it was expected that the Lanchester equations would show a closer fit than was found in studies of larger battles. In particular, one of the objectives was to repeat, in part, Willard’s work on battles of the historical past (Note 3).

The Data Base

Probably the most nearly complete and accurate collection of combat data is the data on World War II compiled by the Historical Evaluation and Research Organization (HERO). From their data HERO analysts selected, for quantitative analysis, the following 60 engagements from four major Italian campaigns:

Salerno, 9-18 Sep 1943, 9 engagements

Volturno, 12 Oct-8 Dec 1943, 20 engagements

Anzio, 22 Jan-29 Feb 1944, 11 engagements

Rome, 14 May-4 June 1944, 20 engagements

The complete data base is described in a HERO report (Note 5). The work described here is not the first analysis of these data. Statistical analyses of weapon effectiveness and the testing of a combat model (the Quantified Judgment Method, QJM) have been carried out (Note 6). The work discussed here examines these engagements from the viewpoint of the Lanchester equations to consider the question: “Are casualties during combat related to the numbers of men in the opposing forces?”

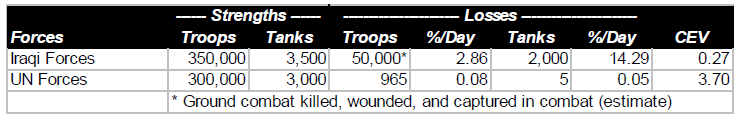

The variables chosen for this analysis are shown in Table 1. The “winners” of the engagements were specified by HERO on the basis of casualties suffered, distance advanced, and subjective estimates of the percentage of the commander’s objective achieved. Variable 12, the Combat Power Ratio, is based on the Operational Lethality Indices (OLI) of the units (Note 7).

The general characteristics of the engagements are briefly described. Of the 60, there were 19 attacks by British forces, 28 by U.S. forces, and 13 by German forces. The attacker was successful in 34 cases; the defender, in 23; and the outcomes of 3 were ambiguous. With respect to terrain, 19 engagements occurred in flat terrain; 24 in rolling, or intermediate, terrain; and 17 in rugged, or difficult, terrain. Clear weather prevailed in 40 cases; 13 engagements were fought in light or intermittent rain; and 7 in medium or heavy rain. There were 28 spring and summer engagements and 32 fall and winter engagements.

The general characteristics of the engagements are briefly described. Of the 60, there were 19 attacks by British forces, 28 by U.S. forces, and 13 by German forces. The attacker was successful in 34 cases; the defender, in 23; and the outcomes of 3 were ambiguous. With respect to terrain, 19 engagements occurred in flat terrain; 24 in rolling, or intermediate, terrain; and 17 in rugged, or difficult, terrain. Clear weather prevailed in 40 cases; 13 engagements were fought in light or intermittent rain; and 7 in medium or heavy rain. There were 28 spring and summer engagements and 32 fall and winter engagements.

Comparison of World War II Engagements With Historical Battles

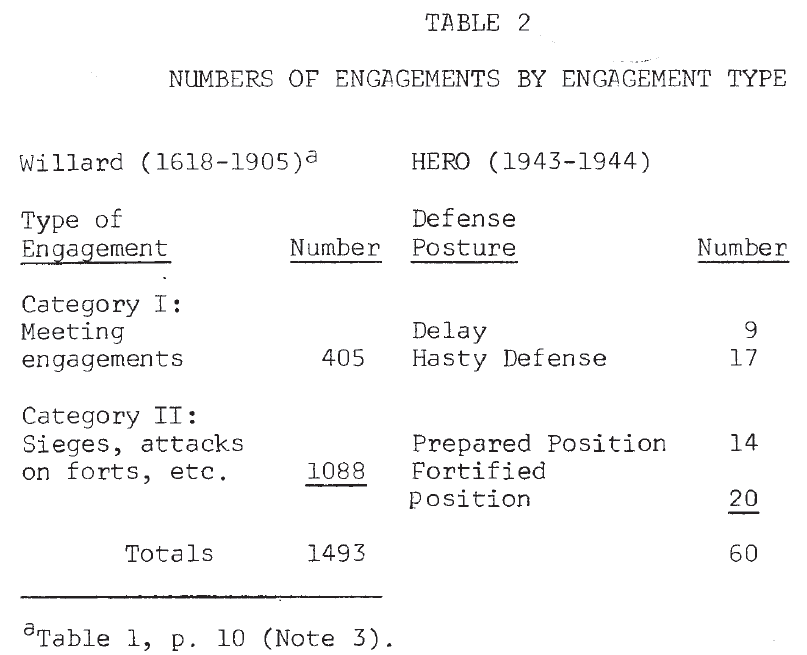

Since one purpose of this work is to repeat, in part, Willard’s analysis, comparison of these World War II engagements with the historical battles (1618-1905) studied by him will be useful. Table 2 shows a comparison of the distribution of battles by type. Willard’s cases were divided into two categories: I. meeting engagements, and II. sieges, attacks on forts, and similar operations. HERO’s World War II engagements were divided into four types based on the posture of the defender: 1. delay, 2. hasty defense, 3. prepared position, and 4. fortified position. If postures 1 and 2 are considered very roughly equivalent to Willard’s category I, then in both data sets the division into the two gross categories is approximately even.

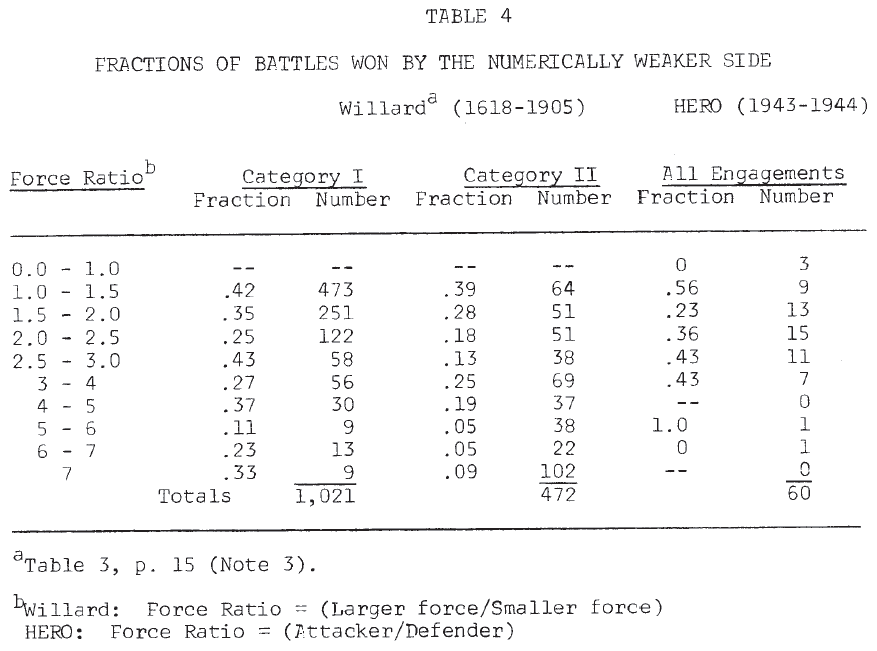

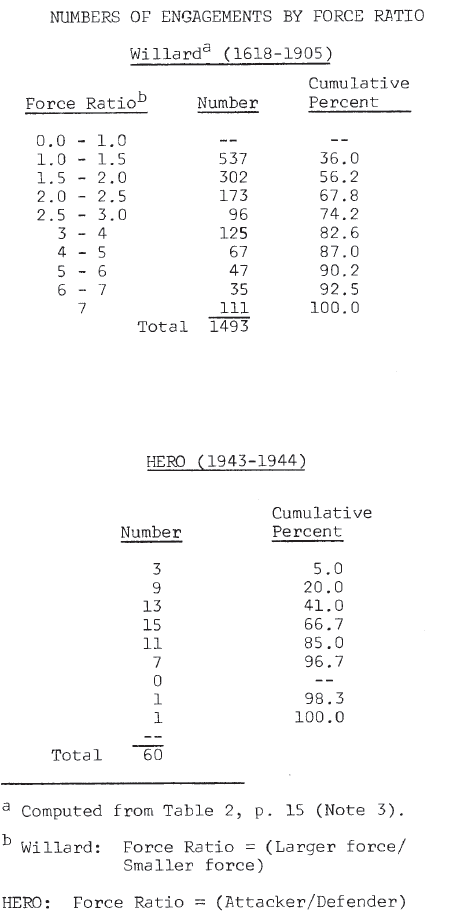

The distribution of engagements across force ratios, given in Table 3, indicated some differences. Willard’s engagements tend to cluster at the lower end of the scale (1-2) and at the higher end (4 and above), while the majority of the World War II engagements were found in mid-range (1.5 – 4) (Note 8). The frequency with which the numerically inferior force achieved victory is shown in Table 4. It is seen that in neither data set are force ratios good predictors of success in battle (Note 9).

The distribution of engagements across force ratios, given in Table 3, indicated some differences. Willard’s engagements tend to cluster at the lower end of the scale (1-2) and at the higher end (4 and above), while the majority of the World War II engagements were found in mid-range (1.5 – 4) (Note 8). The frequency with which the numerically inferior force achieved victory is shown in Table 4. It is seen that in neither data set are force ratios good predictors of success in battle (Note 9).

Results of the Analysis Willard’s Correlation Analysis

There are two forms of the Lanchester equations. One represents the case in which firing units on both sides know the locations of their opponents and can shift their fire to a new target when a “kill” is achieved. This leads to the “square” law where the loss rate is proportional to the opponent’s size. The second form represents that situation in which only the general location of the opponent is known. This leads to the “linear” law in which the loss rate is proportional to the product of both force sizes.

As Willard points out, large battles are made up of many smaller fights. Some of these obey one law while others obey the other, so that the overall result should be a combination of the two. Starting with a general formulation of Lanchester’s equations, where g is the exponent of the target unit’s size (that is, g is 0 for the square law and 1 for the linear law), he derives the following linear equation:

log (nc/mc) = log E + g log (mo/no) (1)

where nc and mc are the casualties, E is related to the exchange ratio, and mo and no are the initial force sizes. Linear regression produces a value for g. However, instead of lying between 0 and 1, as expected, the) g‘s range from -.27 to -.87, with the majority lying around -.5. (Willard obtains several values for g by dividing his data base in various ways—by force ratio, by casualty ratio, by historical period, and so forth.) A negative g value is unpleasant. As Willard notes:

Military theorists should be disconcerted to find g < 0, for in this range the results seem to imply that if the Lanchester formulation is valid, the casualty-producing power of troops increases as they suffer casualties (Note 3).

From his results, Willard concludes that his analysis does not justify the use of Lanchester equations in large-scale situations (Note 10).

Analysis of the World War II Engagements

Willard’s computations were repeated for the HERO data set. For these engagements, regression produced a value of -.594 for g (Note 11), in striking agreement with Willard’s results. Following his reasoning would lead to the conclusion that either the Lanchester equations do not represent these engagements, or that the casualty producing power of forces increases as their size decreases.

However, since the Lanchester equations are so convenient analytically and their use is so widespread, it appeared worthwhile to reconsider this conclusion. In deriving equation (1), Willard used binomial expansions in which he retained only the leading terms. It seemed possible that the poor results might he due, in part, to this approximation. If the first two terms of these expansions are retained, the following equation results:

log (nc/mc) = log E + log (Mo-mc)/(no-nc) (2)

Repeating this regression on the basis of this equation leads to g = -.413 (Note 12), hardly an improvement over the initial results.

A second attempt was made to salvage this approach. Starting with raw OLI scores (Note 7), HERO analysts have computed “combat potentials” for both sides in these engagements, taking into account the operational factors of posture, vulnerability, and mobility; environmental factors like weather, season, and terrain; and (when the record warrants) psychological factors like troop training, morale, and the quality of leadership. Replacing the factor (mo/no) in Equation (1) by the combat power ratio produces the result) g = .466 (Note 13).

While this is an apparent improvement in the value of g, it is achieved at the expense of somewhat distorting the Lanchester concept. It does preserve the functional form of the equations, but it requires a somewhat strange definition of “killing rates.”

Analysis Based on the Differential Lanchester Equations

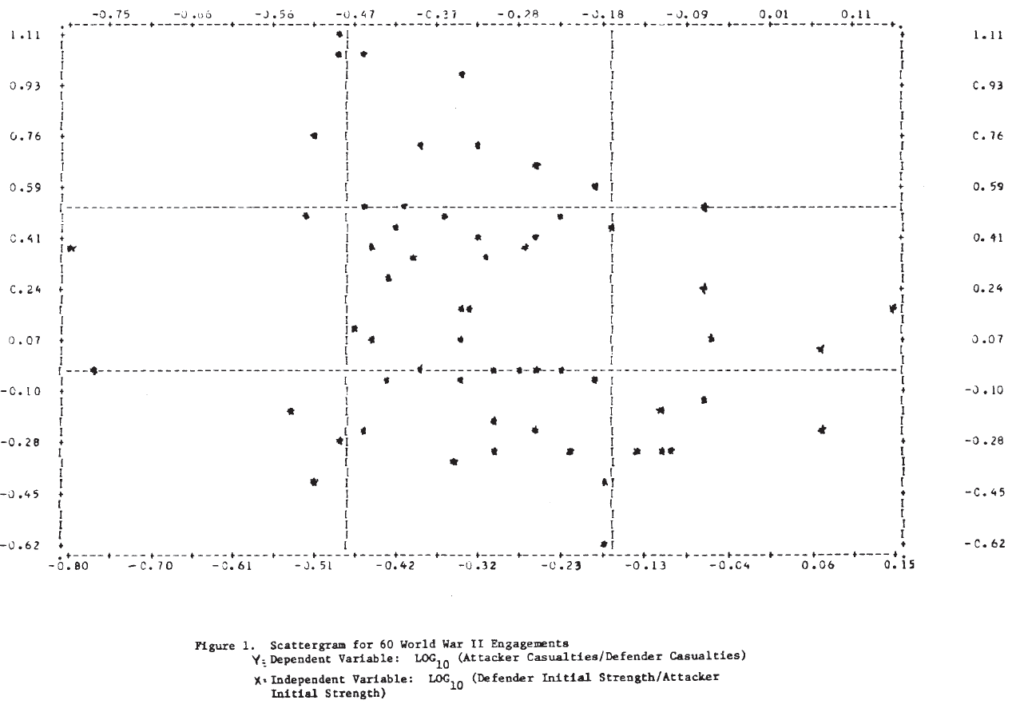

Analysis of the type carried out by Willard appears to produce very poor results for these World War II engagements. Part of the reason for this is apparent from Figure 1, which shows the scatterplot of the dependent variable, log (nc/mc), against the independent variable, log (mo/no). It is clear that no straight line will fit these data very well, and one with a positive slope would not be much worse than the “best” line found by regression. To expect the exponent to account for the wide variation in these data seems unreasonable.

Here, a simpler approach will be taken. Rather than use the data to attempt to discriminate directly between the square and the linear laws, they will be used to estimate linear coefficients under each assumption in turn, starting with the differential formulation rather than the integrated equations used by Willard.

Here, a simpler approach will be taken. Rather than use the data to attempt to discriminate directly between the square and the linear laws, they will be used to estimate linear coefficients under each assumption in turn, starting with the differential formulation rather than the integrated equations used by Willard.

In their simplest differential form, the Lanchester equations may be written;

Square Law; dA/dt = -kdD and dD/dt = kaA (3)

Linear law: dA/dt = -k’dAD and dD/dt = k’aAD (4)

where

A(D) is the size of the attacker (defender)

dA/dt (dD/dt) is the attacker’s (defender’s) loss rate,

ka, k’a (kd, k’d) are the attacker’s (defender’s) killing rates

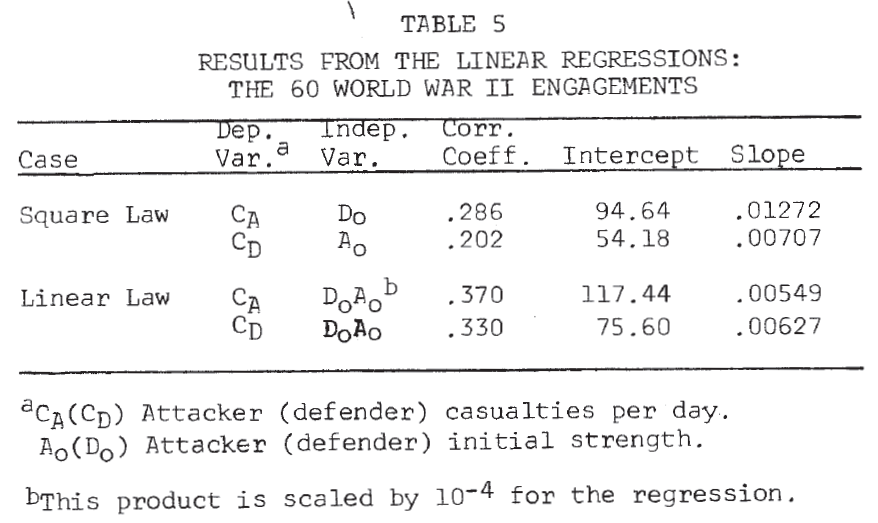

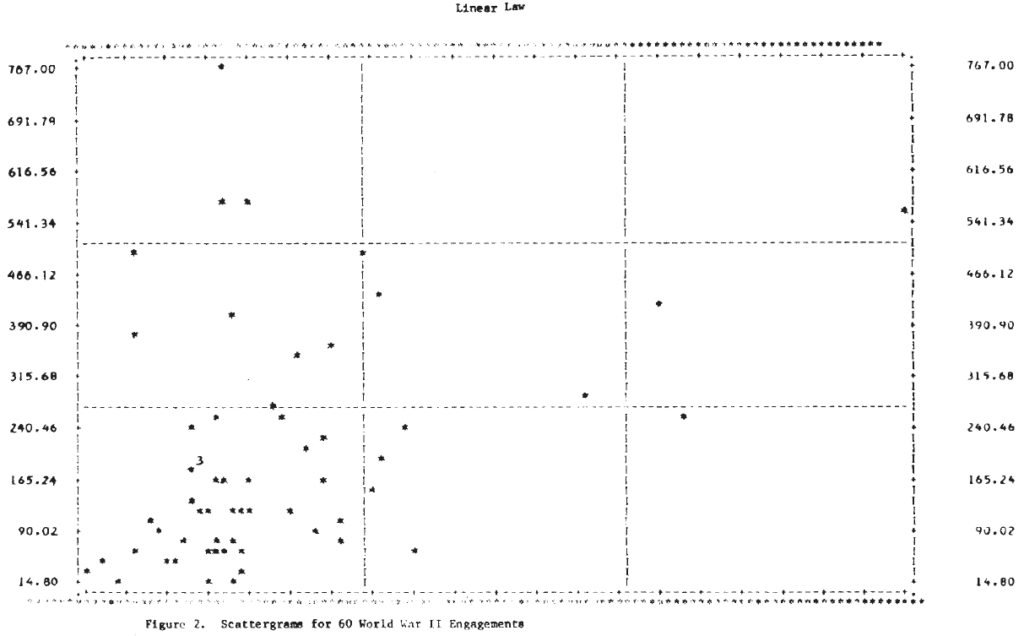

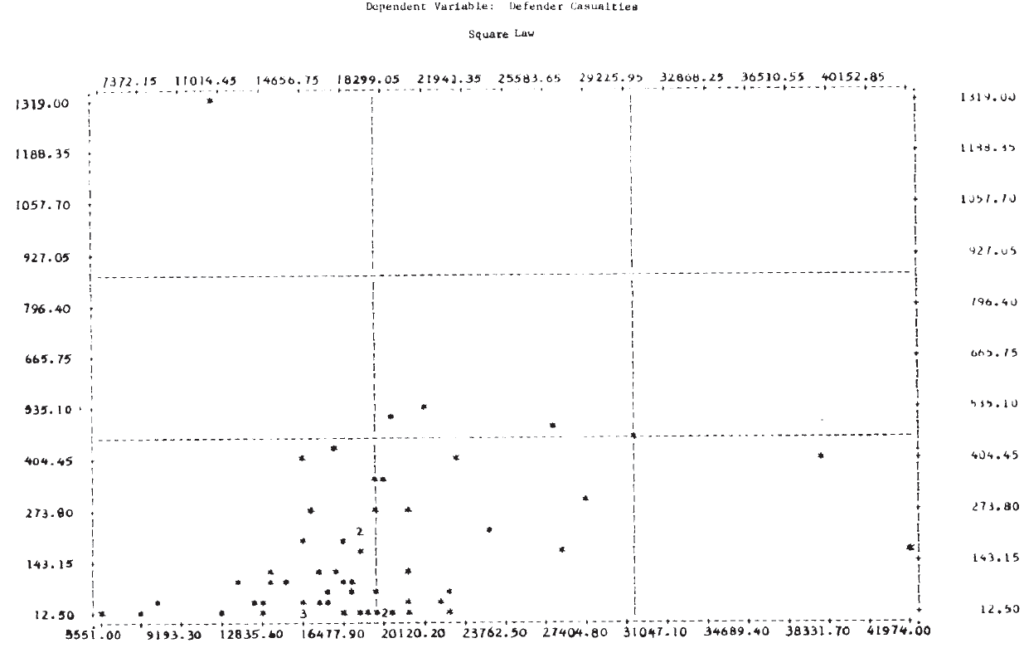

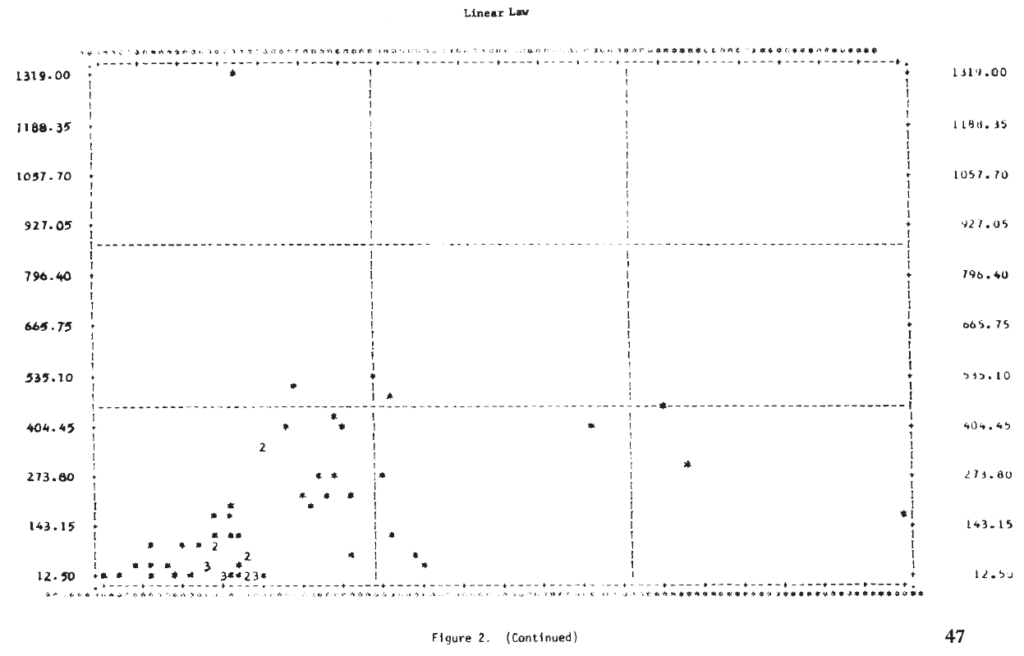

For this analysis, the day is taken as the basic time unit, and the loss rate per day is approximated by the casualties per day. Results of the linear regressions are given in Table 5. No conclusions should be drawn from the fact that the correlation coefficients are higher in the linear law case since this is expected for purely technical reasons (Note 14). A better picture of the relationships is again provided by the scatterplots in Figure 2. It is clear from these plots that, as in the case of the logarithmic forms, a single straight line will not fit the entire set of 60 engagements for either of the dependent variables.

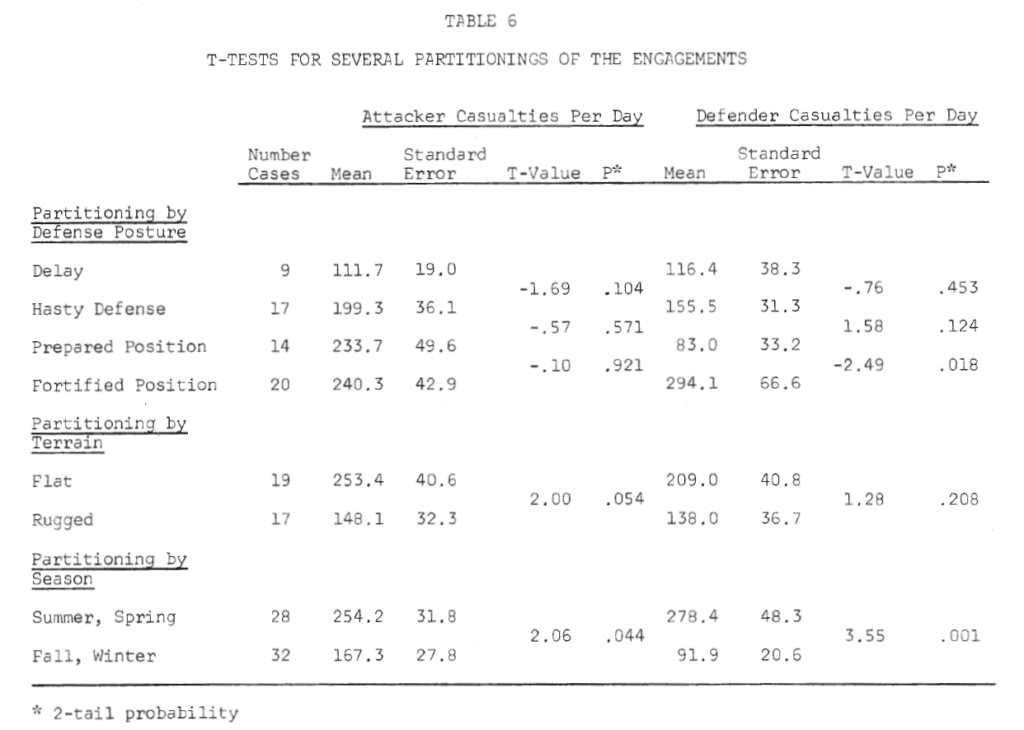

To investigate ways in which the data set might profitably be subdivided for analysis, T-tests of the means of the dependent variable were made for several partitionings of the data set. The results, shown in Table 6, suggest that dividing the engagements by defense posture might prove worthwhile.

To investigate ways in which the data set might profitably be subdivided for analysis, T-tests of the means of the dependent variable were made for several partitionings of the data set. The results, shown in Table 6, suggest that dividing the engagements by defense posture might prove worthwhile.

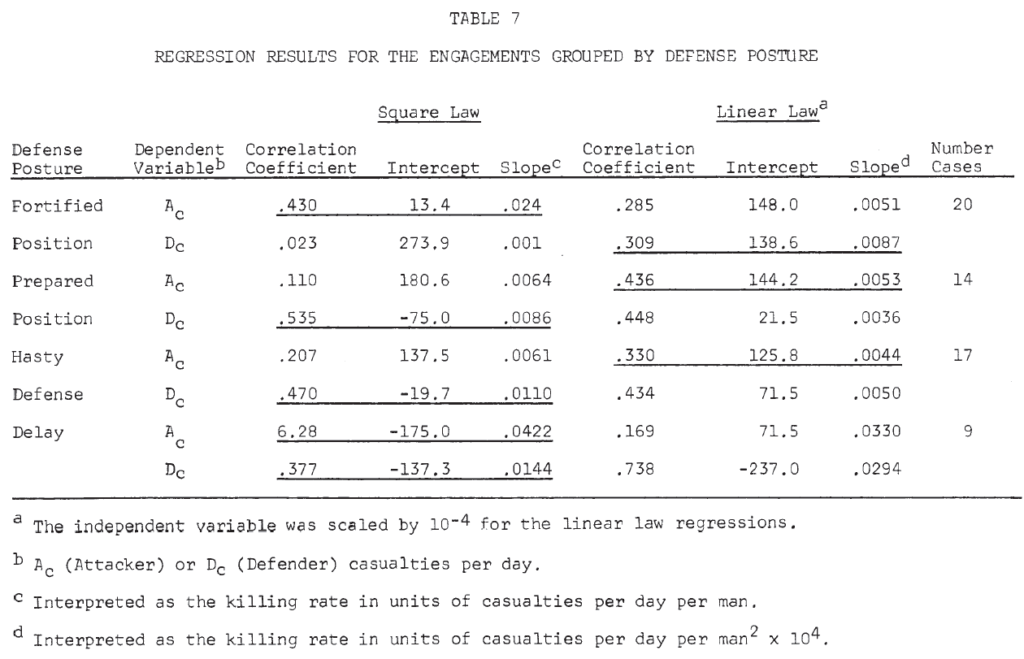

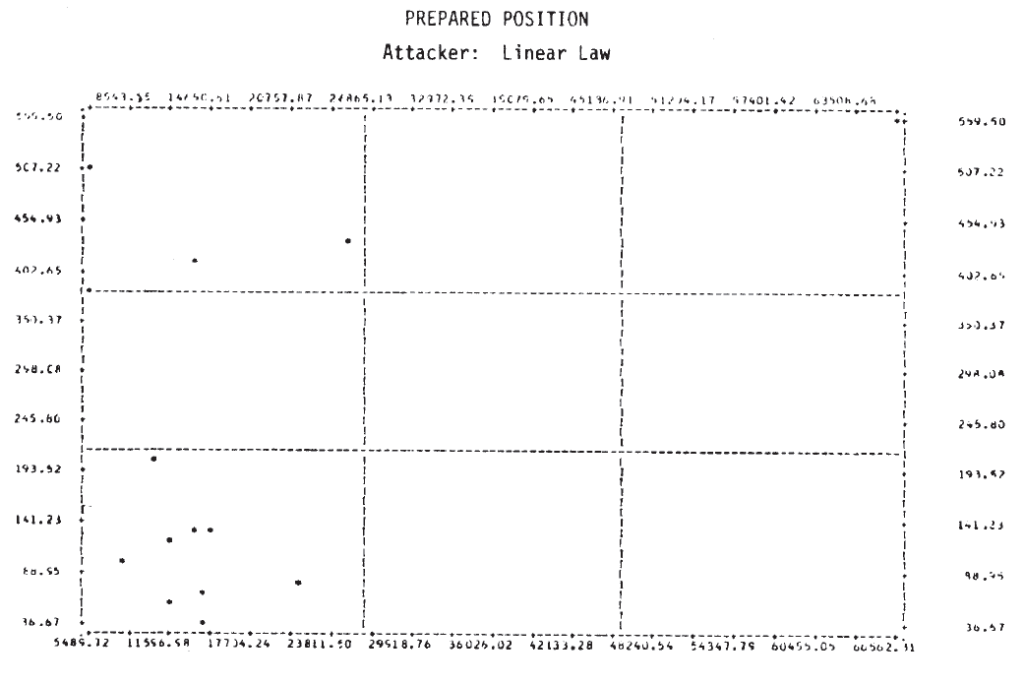

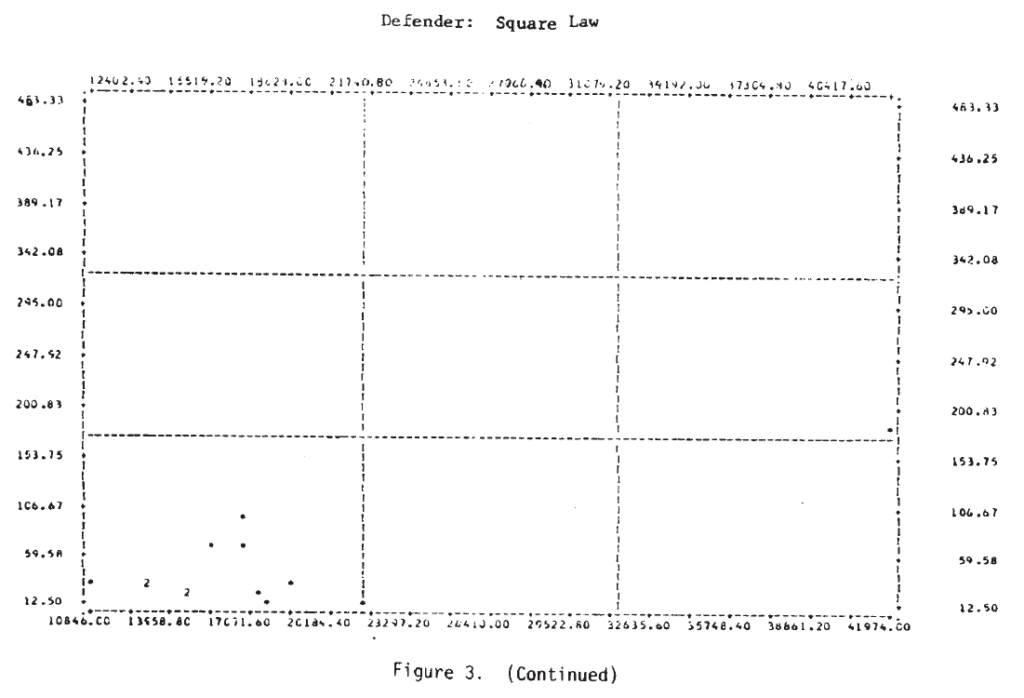

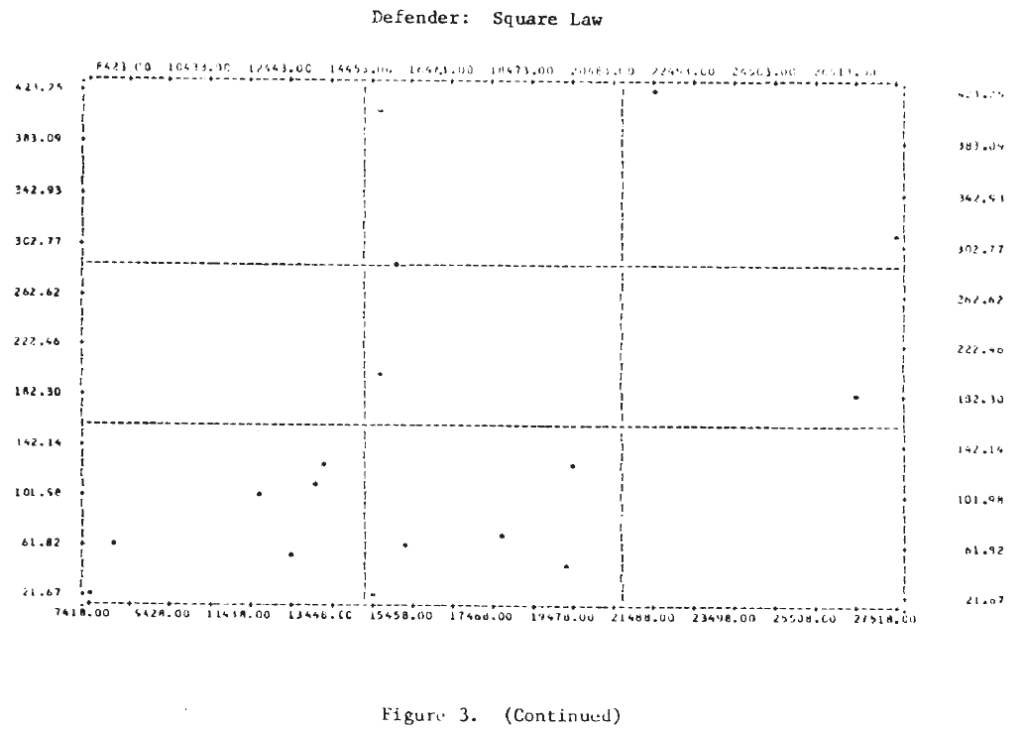

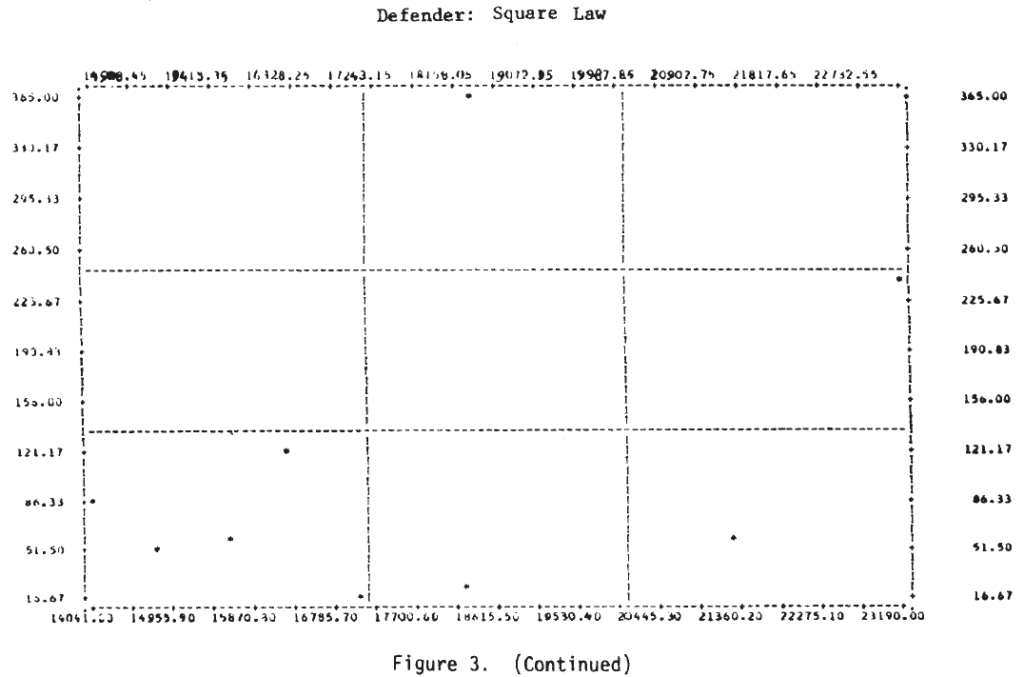

Results of the linear regressions by defense posture are shown in Table 7. For each posture, the equation that seemed to give a better fit to the data is underlined (Note 15). From this table, the following very tentative conclusions might be drawn:

Results of the linear regressions by defense posture are shown in Table 7. For each posture, the equation that seemed to give a better fit to the data is underlined (Note 15). From this table, the following very tentative conclusions might be drawn:

- In an attack on a fortified position, the attacker suffers casualties by the square law; the defender suffers casualties by the linear law. That is, the defender is aware of the attacker’s position, while the attacker knows only the general location of the defender. (This is similar to Deitchman’s guerrilla model. Note 16).

- This situation is apparently reversed in the cases of attacks on prepared positions and hasty defenses.

- Delaying situations seem to be treated better by the square law for both attacker and defender.

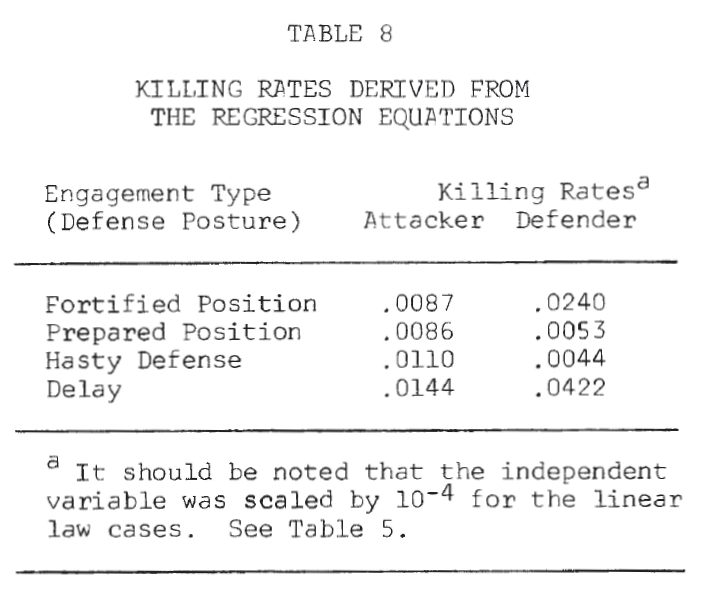

Table 8 summarizes the killing rates by defense posture. The defender has a much higher killing rate than the attacker (almost 3 to 1) in a fortified position. In a prepared position and hasty defense, the attacker appears to have the advantage. However, in a delaying action, the defender’s killing rate is again greater than the attacker’s (Note 17).

Table 8 summarizes the killing rates by defense posture. The defender has a much higher killing rate than the attacker (almost 3 to 1) in a fortified position. In a prepared position and hasty defense, the attacker appears to have the advantage. However, in a delaying action, the defender’s killing rate is again greater than the attacker’s (Note 17).

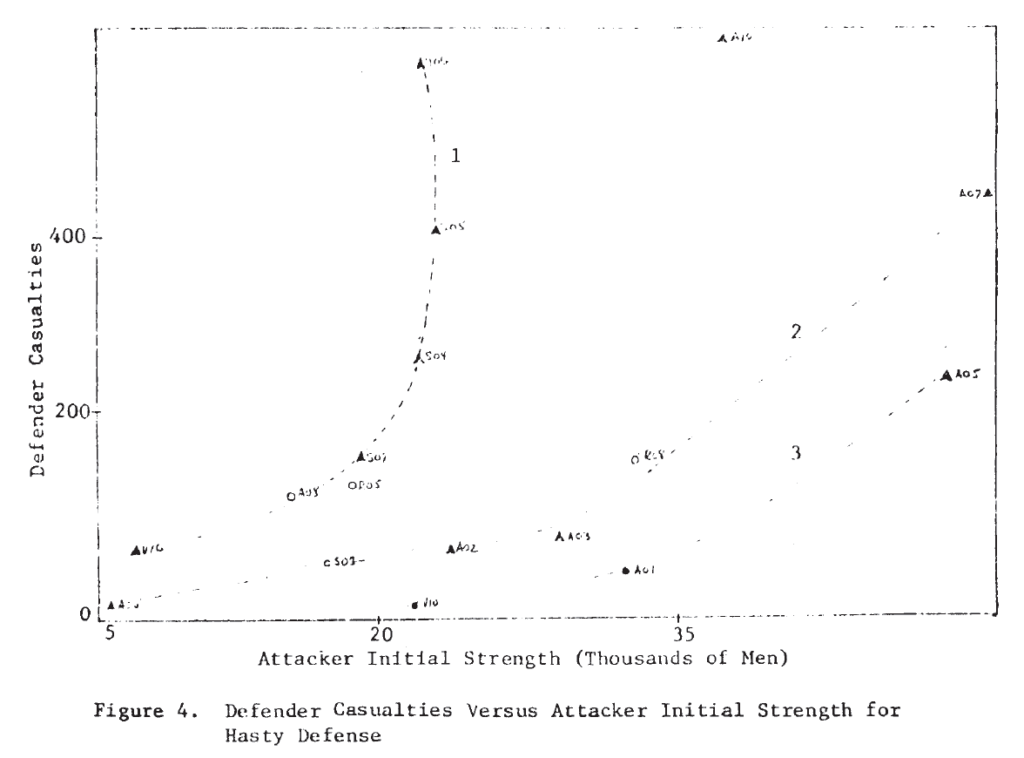

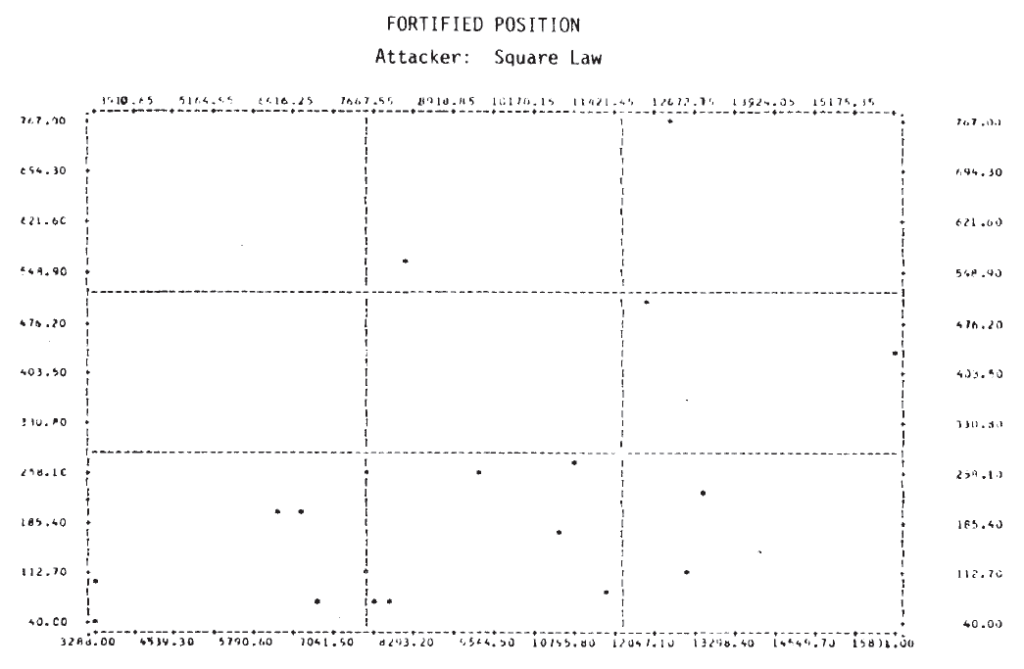

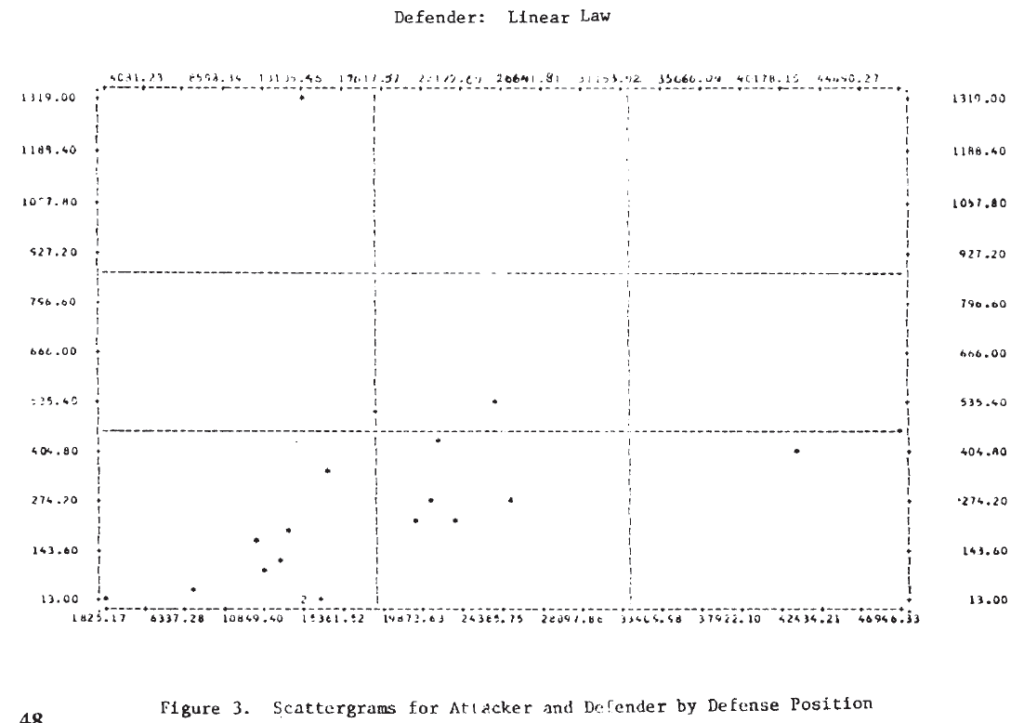

Figure 3 shows the scatterplots for these cases. Examination of these plots suggests that a tentative answer to the study question posed above might be: “Yes, casualties do appear to be related to the force sizes, but the relationship may not be a simple linear one.”

Figure 3 shows the scatterplots for these cases. Examination of these plots suggests that a tentative answer to the study question posed above might be: “Yes, casualties do appear to be related to the force sizes, but the relationship may not be a simple linear one.”

In several of these plots it appears that two or more functional forms may be involved. Consider, for example, the defender‘s casualties as a function of the attacker’s initial strength in the case of a hasty defense. This plot is repeated in Figure 4, where the points appear to fit the curves sketched there. It would appear that there are at least two, possibly three, separate relationships. Also on that plot, the individual engagements have been identified, and it is interesting to note that on the curve marked (1), five of the seven attacks were made by Germans—four of them from the Salerno campaign. It would appear from this that German attacks are associated with higher than average defender casualties for the attacking force size. Since there are so few data points, this cannot be more than a hint or interesting suggestion.

In several of these plots it appears that two or more functional forms may be involved. Consider, for example, the defender‘s casualties as a function of the attacker’s initial strength in the case of a hasty defense. This plot is repeated in Figure 4, where the points appear to fit the curves sketched there. It would appear that there are at least two, possibly three, separate relationships. Also on that plot, the individual engagements have been identified, and it is interesting to note that on the curve marked (1), five of the seven attacks were made by Germans—four of them from the Salerno campaign. It would appear from this that German attacks are associated with higher than average defender casualties for the attacking force size. Since there are so few data points, this cannot be more than a hint or interesting suggestion.

Future Research

This work suggests two conclusions that might have an impact on future lines of research on combat dynamics:

- Tactics appear to be an important determinant of combat results. This conclusion, in itself, does not appear startling, at least not to the military. However, it does not always seem to have been the case that tactical questions have been considered seriously by analysts in their studies of the effects of varying force levels and force mixes.

- Historical data of this type offer rich opportunities for studying the effects of tactics. For example, consideration of the narrative accounts of these battles might permit re-coding the engagements into a larger, more sensitive set of engagement categories. (It would, of course, then be highly desirable to add more engagements to the data set.)

While predictions of the future are always dangerous, I would nevertheless like to suggest what appears to be a possible trend. While military analysis of the past two decades has focused almost exclusively on the hardware of weapons systems, at least part of our future analysis will be devoted to the more behavioral aspects of combat.

Janice Bloom Fain, a Senior Associate of CACI, lnc., is a physicist whose special interests are in the applications of computer simulation techniques to industrial and military operations; she is the author of numerous reports and articles in this field. This paper was presented by Dr. Fain at the Military Operations Research Symposium at Fort Eustis, Virginia.

NOTES

[1.] J. H. Engel, “A Verification of Lanchester’s Law,” Operations Research 2, 163-171 (1954).

[2.] For example, see R. L. Helmbold, “Some Observations on the Use of Lanchester’s Theory for Prediction,” Operations Research 12, 778-781 (1964); H. K. Weiss, “Lanchester-Type Models of Warfare,” Proceedings of the First International Conference on Operational Research, 82-98, ORSA (1957); H. K. Weiss, “Combat Models and Historical Data; The U.S. Civil War,” Operations Research 14, 750-790 (1966).

[3.] D. Willard, “Lanchester as a Force in History: An Analysis of Land Battles of the Years 1618-1905,” RAC-TD-74, Research Analysis Corporation (1962). what appears to be a possible trend. While military analysis of the past two decades has focused almost exclusively on the hardware of weapons systems, at least part of our future analysis will be devoted to the more behavioral aspects of combat.

[4.] The method of computing the killing rates forced a fit at the beginning and end of the battles. See W. Fain, J. B. Fain, L. Feldman, and S. Simon, “Validation of Combat Models Against Historical Data,” Professional Paper No. 27, Center for Naval Analyses, Arlington, Virginia (1970).

[5.] HERO, “A Study of the Relationship of Tactical Air Support Operations to Land Combat, Appendix B, Historical Data Base.” Historical Evaluation and Research Organization, report prepared for the Defense Operational Analysis Establishment, U.K.T.S.D., Contract D-4052 (1971).

[6.] T. N. Dupuy, The Quantified Judgment Method of Analysis of Historical Combat Data, HERO Monograph, (January 1973); HERO, “Statistical Inference in Analysis in Combat,” Annex F, Historical Data Research on Tactical Air Operations, prepared for Headquarters USAF, Assistant Chief of Staff for Studies and Analysis, Contract No. F-44620-70-C-0058 (1972).

[7.] The Operational Lethality Index (OLI) is a measure of weapon effectiveness developed by HERO.

[8.] Since Willard’s data did not indicate which side was the attacker, his force ratio is defined to be (larger force/smaller force). The HERO force ratio is (attacker/defender).

[9.] Since the criteria for success may have been rather different for the two sets of battles, this comparison may not be very meaningful.

[10.] This work includes more complex analysis in which the possibility that the two forces may be engaging in different types of combat is considered, leading to the use of two exponents rather than the single one, Stochastic combat processes are also treated.

[11.] Correlation coefficient = -.262;

Intercept = .00115; slope = -.594.

[12.] Correlation coefficient = -.184;

Intercept = .0539; slope = -,413.

[13.] Correlation coefficient = .303;

Intercept = -.638; slope = .466.

[14.] Correlation coefficients for the linear law are inflated with respect to the square law since the independent variable is a product of force sizes and, thus, has a higher variance than the single force size unit in the square law case.

[15.] This is a subjective judgment based on the following considerations Since the correlation coefficient is inflated for the linear law, when it is lower the square law case is chosen. When the linear law correlation coefficient is higher, the case with the intercept closer to 0 is chosen.

[16.] S. J. Deitchman, “A Lanchester Model of Guerrilla Warfare,” Operations Research 10, 818-812 (1962).

[17.] As pointed out by Mr. Alan Washburn, who prepared a critique on this paper, when comparing numerical values of the square law and linear law killing rates, the differences in units must be considered. (See footnotes to Table 7).