Today’s edition of TDI Friday Read is a roundup of posts by TDI President Christopher Lawrence exploring the details of tank combat between German and Soviet forces at the Battle of Kursk in 1943. The prevailing historical interpretation of Kursk is of the Soviets using their material and manpower superiority to blunt and then overwhelm the German offensive. This view is often buttressed by looking at the ratio of the numbers of tanks destroyed in combat. Chris takes a deeper look at the data, the differences in the ways “destroyed” tanks were counted and reported, and the differing philosophies between the German and Soviet armies regarding damaged tank recovery and repair. This yields a much more nuanced perspective on the character of tank combat at Kursk that does not necessarily align with the prevailing historical interpretations. Historians often discount detailed observational data on combat as irrelevant or too difficult to collect and interpret. We at TDI believe that with history, the devil is always in the details.

Today’s edition of TDI Friday Read is a roundup of posts by TDI President Christopher Lawrence exploring the details of tank combat between German and Soviet forces at the Battle of Kursk in 1943. The prevailing historical interpretation of Kursk is of the Soviets using their material and manpower superiority to blunt and then overwhelm the German offensive. This view is often buttressed by looking at the ratio of the numbers of tanks destroyed in combat. Chris takes a deeper look at the data, the differences in the ways “destroyed” tanks were counted and reported, and the differing philosophies between the German and Soviet armies regarding damaged tank recovery and repair. This yields a much more nuanced perspective on the character of tank combat at Kursk that does not necessarily align with the prevailing historical interpretations. Historians often discount detailed observational data on combat as irrelevant or too difficult to collect and interpret. We at TDI believe that with history, the devil is always in the details.

Excellence in Historical Research and Analysis

Excellence in Historical Research and Analysis

Tag Force Ratios

What Multi-Domain Operations Wargames Are You Playing? [Updated]

[UPDATE] We had several readers recommend games they have used or would be suitable for simulating Multi-Domain Battle and Operations (MDB/MDO) concepts. These include several classic campaign-level board wargames:

The Next War (SPI, 1976)

NATO: The Next War in Europe (Victory Games, 1983)

For tactical level combat, there is Steel Panthers: Main Battle Tank (SSI/Shrapnel Games, 1996- )

There were also a couple of naval/air oriented games:

Asian Fleet (Kokusai-Tsushin Co., Ltd. (国際通信社) 2007, 2010)

Command: Modern Air Naval Operations (Matrix Games, 2014)

Are there any others folks are using out there?

A Mystics & Statistic reader wants to know what wargames are being used to simulate and explore Multi-Domain Battle and Operations (MDB/MDO) concepts?

There is a lot of MDB/MDO wargaming going on in at all levels in the U.S. Department of Defense. Much of this appears to use existing models, simulations, and wargames, such as the U.S. Army Center for Army Analysis’s unclassified Wargaming Analysis Model (C-WAM).

Chris Lawrence recently looked at C-WAM and found that it uses a lot of traditional board wargaming elements, including methodologies for determining combat results, casualties, and breakpoints that have been found unable to replicate real-world outcomes (aka “The Base of Sand” problem).

There is also the wargame used by RAND to look at possible scenarios for a potential Russian invasion of the Baltic States.

What other wargames, models, and simulations are there being used out there? Are there any commercial wargames incorporating MDB/MDO elements into their gameplay? What methodologies are being used to portray MDB/MDO effects?

Comparing Force Ratios to Casualty Exchange Ratios

Comparing Force Ratios to Casualty Exchange Ratios

Christopher A. Lawrence

[The article below is reprinted from the Summer 2009 edition of The International TNDM Newsletter.]

There are three versions of force ratio versus casualty exchange ratio rules, such as the three-to-one rule (3-to-1 rule), as it applies to casualties. The earliest version of the rule as it relates to casualties that we have been able to find comes from the 1958 version of the U.S. Army Maneuver Control manual, which states: “When opposing forces are in contact, casualties are assessed in inverse ratio to combat power. For friendly forces advancing with a combat power superiority of 5 to 1, losses to friendly forces will be about 1/5 of those suffered by the opposing force.”[1]

The RAND version of the rule (1992) states that: “the famous ‘3:1 rule ’, according to which the attacker and defender suffer equal fractional loss rates at a 3:1 force ratio the battle is in mixed terrain and the defender enjoys ‘prepared ’defenses…” [2]

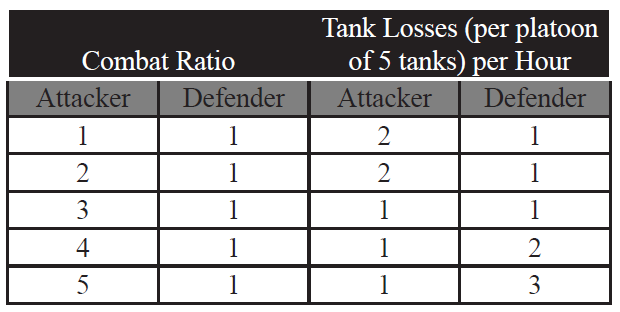

Finally, there is a version of the rule that dates from the 1967 Maneuver Control manual that only applies to armor that shows:

As the RAND construct also applies to equipment losses, then this formulation is directly comparable to the RAND construct.

As the RAND construct also applies to equipment losses, then this formulation is directly comparable to the RAND construct.

Therefore, we have three basic versions of the 3-to-1 rule as it applies to casualties and/or equipment losses. First, there is a rule that states that there is an even fractional loss ratio at 3-to-1 (the RAND version), Second, there is a rule that states that at 3-to-1, the attacker will suffer one-third the losses of the defender. And third, there is a rule that states that at 3-to-1, the attacker and defender will suffer the same losses as the defender. Furthermore, these examples are highly contradictory, with either the attacker suffering three times the losses of the defender, the attacker suffering the same losses as the defender, or the attacker suffering 1/3 the losses of the defender.

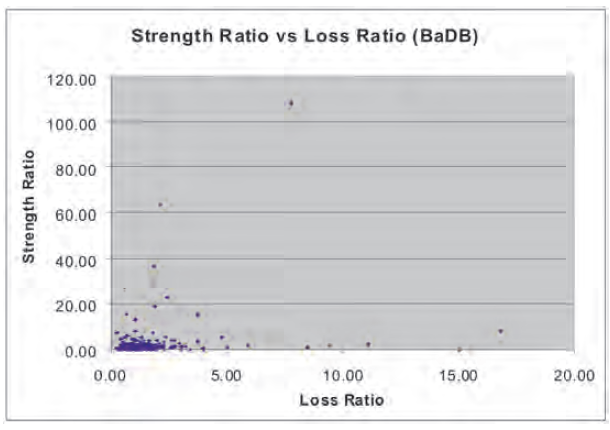

Therefore, what we will examine here is the relationship between force ratios and exchange ratios. In this case, we will first look at The Dupuy Institute’s Battles Database (BaDB), which covers 243 battles from 1600 to 1900. We will chart on the y-axis the force ratio as measured by a count of the number of people on each side of the forces deployed for battle. The force ratio is the number of attackers divided by the number of defenders. On the x-axis is the exchange ratio, which is a measured by a count of the number of people on each side who were killed, wounded, missing or captured during that battle. It does not include disease and non-battle injuries. Again, it is calculated by dividing the total attacker casualties by the total defender casualties. The results are provided below:

As can be seen, there are a few extreme outliers among these 243 data points. The most extreme, the Battle of Tippennuir (l Sep 1644), in which an English Royalist force under Montrose routed an attack by Scottish Covenanter militia, causing about 3,000 casualties to the Scots in exchange for a single (allegedly self-inflicted) casualty to the Royalists, was removed from the chart. This 3,000-to-1 loss ratio was deemed too great an outlier to be of value in the analysis.

As can be seen, there are a few extreme outliers among these 243 data points. The most extreme, the Battle of Tippennuir (l Sep 1644), in which an English Royalist force under Montrose routed an attack by Scottish Covenanter militia, causing about 3,000 casualties to the Scots in exchange for a single (allegedly self-inflicted) casualty to the Royalists, was removed from the chart. This 3,000-to-1 loss ratio was deemed too great an outlier to be of value in the analysis.

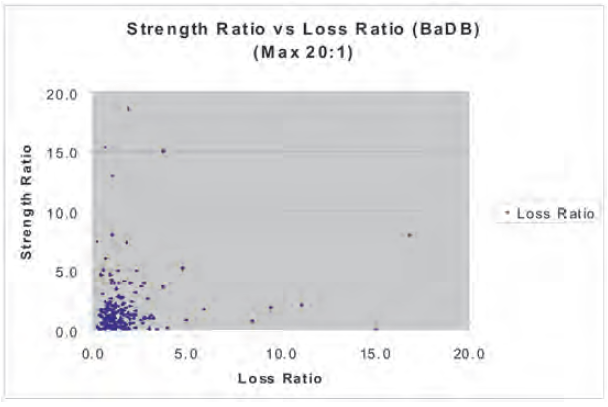

As it is, the vast majority of cases are clumped down into the corner of the graph with only a few scattered data points outside of that clumping. If one did try to establish some form of curvilinear relationship, one would end up drawing a hyperbola. It is worthwhile to look inside that clump of data to see what it shows. Therefore, we will look at the graph truncated so as to show only force ratios at or below 20-to-1 and exchange rations at or below 20-to-1.

As it is, the vast majority of cases are clumped down into the corner of the graph with only a few scattered data points outside of that clumping. If one did try to establish some form of curvilinear relationship, one would end up drawing a hyperbola. It is worthwhile to look inside that clump of data to see what it shows. Therefore, we will look at the graph truncated so as to show only force ratios at or below 20-to-1 and exchange rations at or below 20-to-1.

Again, the data remains clustered in one corner with the outlying data points again pointing to a hyperbola as the only real fitting curvilinear relationship. Let’s look at little deeper into the data by truncating the data on 6-to-1 for both force ratios and exchange ratios. As can be seen, if the RAND version of the 3-to-1 rule is correct, then the data should show at 3-to-1 force ratio a 3-to-1 casualty exchange ratio. There is only one data point that comes close to this out of the 243 points we examined.

Again, the data remains clustered in one corner with the outlying data points again pointing to a hyperbola as the only real fitting curvilinear relationship. Let’s look at little deeper into the data by truncating the data on 6-to-1 for both force ratios and exchange ratios. As can be seen, if the RAND version of the 3-to-1 rule is correct, then the data should show at 3-to-1 force ratio a 3-to-1 casualty exchange ratio. There is only one data point that comes close to this out of the 243 points we examined.

If the FM 105-5 version of the rule as it applies to armor is correct, then the data should show that at 3-to-1 force ratio there is a 1-to-1 casualty exchange ratio, at a 4-to-1 force ratio a 1-to-2 casualty exchange ratio, and at a 5-to-1 force ratio a 1-to-3 casualty exchange ratio. Of course, there is no armor in these pre-WW I engagements, but again no such exchange pattern does appear.

If the 1958 version of the FM 105-5 rule as it applies to casualties is correct, then the data should show that at a 3-to-1 force ratio there is 0.33-to-1 casualty exchange ratio, at a 4-to-1 force ratio a .25-to-1 casualty exchange ratio, and at a 5-to-1 force ratio a 0.20-to-5 casualty exchange ratio. As can be seen, there is not much indication of this pattern, or for that matter any of the three patterns.

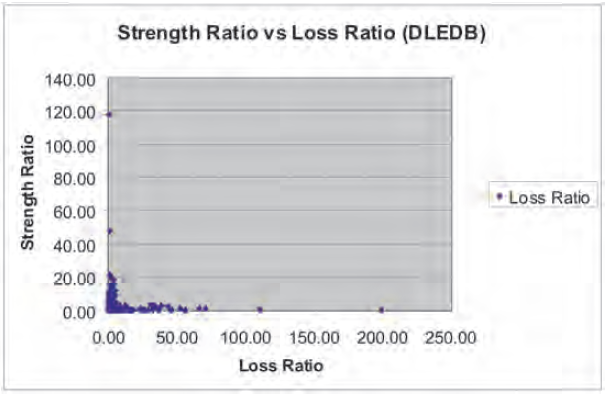

Still, such a construct may not be relevant to data before 1900. For example, Lanchester claimed in 1914 in Chapter V, “The Principal of Concentration,” of his book Aircraft in Warfare, that there is greater advantage to be gained in modern warfare from concentration of fire.[3] Therefore, we will tap our more modern Division-Level Engagement Database (DLEDB) of 675 engagements, of which 628 have force ratios and exchange ratios calculated for them. These 628 cases are then placed on a scattergram to see if we can detect any similar patterns.

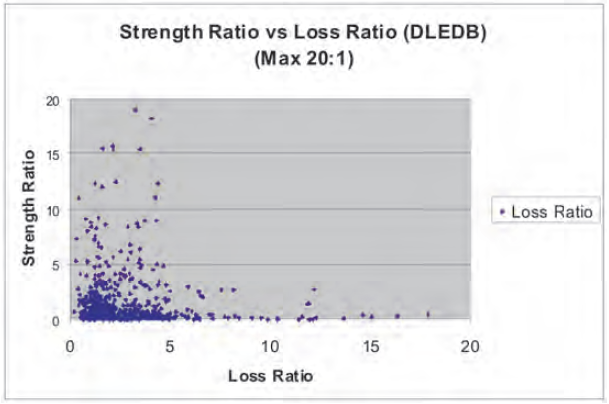

Even though this data covers from 1904 to 1991, with the vast majority of the data coming from engagements after 1940, one again sees the same pattern as with the data from 1600-1900. If there is a curvilinear relationship, it is again a hyperbola. As before, it is useful to look into the mass of data clustered into the corner by truncating the force and exchange ratios at 20-to-1. This produces the following:

Even though this data covers from 1904 to 1991, with the vast majority of the data coming from engagements after 1940, one again sees the same pattern as with the data from 1600-1900. If there is a curvilinear relationship, it is again a hyperbola. As before, it is useful to look into the mass of data clustered into the corner by truncating the force and exchange ratios at 20-to-1. This produces the following:

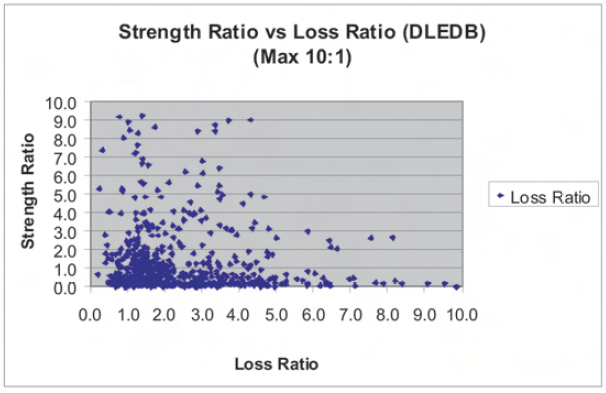

Again, one sees the data clustered in the corner, with any curvilinear relationship again being a hyperbola. A look at the data further truncated to a 10-to-1 force or exchange ratio does not yield anything more revealing.

Again, one sees the data clustered in the corner, with any curvilinear relationship again being a hyperbola. A look at the data further truncated to a 10-to-1 force or exchange ratio does not yield anything more revealing.

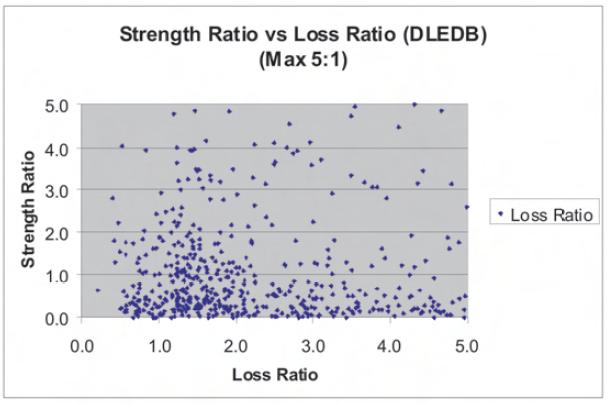

And, if this data is truncated to show only 5-to-1 force ratio and exchange ratios, one again sees:

And, if this data is truncated to show only 5-to-1 force ratio and exchange ratios, one again sees:

Again, this data appears to be mostly just noise, with no clear patterns here that support any of the three constructs. In the case of the RAND version of the 3-to-1 rule, there is again only one data point (out of 628) that is anywhere close to the crossover point (even fractional exchange rate) that RAND postulates. In fact, it almost looks like the data conspires to make sure it leaves a noticeable “hole” at that point. The other postulated versions of the 3-to-1 rules are also given no support in these charts.

Again, this data appears to be mostly just noise, with no clear patterns here that support any of the three constructs. In the case of the RAND version of the 3-to-1 rule, there is again only one data point (out of 628) that is anywhere close to the crossover point (even fractional exchange rate) that RAND postulates. In fact, it almost looks like the data conspires to make sure it leaves a noticeable “hole” at that point. The other postulated versions of the 3-to-1 rules are also given no support in these charts.

Also of note, that the relationship between force ratios and exchange ratios does not appear to significantly change for combat during 1600-1900 when compared to the data from combat from 1904-1991. This does not provide much support for the intellectual construct developed by Lanchester to argue for his N-square law.

While we can attempt to torture the data to find a better fit, or can try to argue that the patterns are obscured by various factors that have not been considered, we do not believe that such a clear pattern and relationship exists. More advanced mathematical methods may show such a pattern, but to date such attempts have not ferreted out these alleged patterns. For example, we refer the reader to Janice Fain’s article on Lanchester equations, The Dupuy Institute’s Capture Rate Study, Phase I & II, or any number of other studies that have looked at Lanchester.[4]

The fundamental problem is that there does not appear to be a direct cause and effect between force ratios and exchange ratios. It appears to be an indirect relationship in the sense that force ratios are one of several independent variables that determine the outcome of an engagement, and the nature of that outcome helps determines the casualties. As such, there is a more complex set of interrelationships that have not yet been fully explored in any study that we know of, although it is briefly addressed in our Capture Rate Study, Phase I & II.

NOTES

[1] FM 105-5, Maneuver Control (1958), 80.

[2] Patrick Allen, “Situational Force Scoring: Accounting for Combined Arms Effects in Aggregate Combat Models,” (N-3423-NA, The RAND Corporation, Santa Monica, CA, 1992), 20.

[3] F. W. Lanchester, Aircraft in Warfare: The Dawn of the Fourth Arm (Lanchester Press Incorporated, Sunnyvale, Calif., 1995), 46-60. One notes that Lanchester provided no data to support these claims, but relied upon an intellectual argument based upon a gross misunderstanding of ancient warfare.

[4] In particular, see page 73 of Janice B. Fain, “The Lanchester Equations and Historical Warfare: An Analysis of Sixty World War II Land Engagements,” Combat Data Subscription Service (HERO, Arlington, Va., Spring 1975).

The Great 3-1 Rule Debate

[This piece was originally posted on 13 July 2016.]

[This piece was originally posted on 13 July 2016.]

Trevor Dupuy’s article cited in my previous post, “Combat Data and the 3:1 Rule,” was the final salvo in a roaring, multi-year debate between two highly regarded members of the U.S. strategic and security studies academic communities, political scientist John Mearsheimer and military analyst/polymath Joshua Epstein. Carried out primarily in the pages of the academic journal International Security, Epstein and Mearsheimer argued the validity of the 3-1 rule and other analytical models with respect the NATO/Warsaw Pact military balance in Europe in the 1980s. Epstein cited Dupuy’s empirical research in support of his criticism of Mearsheimer’s reliance on the 3-1 rule. In turn, Mearsheimer questioned Dupuy’s data and conclusions to refute Epstein. Dupuy’s article defended his research and pointed out the errors in Mearsheimer’s assertions. With the publication of Dupuy’s rebuttal, the International Security editors called a time out on the debate thread.

The Epstein/Mearsheimer debate was itself part of a larger political debate over U.S. policy toward the Soviet Union during the administration of Ronald Reagan. This interdisciplinary argument, which has since become legendary in security and strategic studies circles, drew in some of the biggest names in these fields, including Eliot Cohen, Barry Posen, the late Samuel Huntington, and Stephen Biddle. As Jeffery Friedman observed,

These debates played a prominent role in the “renaissance of security studies” because they brought together scholars with different theoretical, methodological, and professional backgrounds to push forward a cohesive line of research that had clear implications for the conduct of contemporary defense policy. Just as importantly, the debate forced scholars to engage broader, fundamental issues. Is “military power” something that can be studied using static measures like force ratios, or does it require a more dynamic analysis? How should analysts evaluate the role of doctrine, or politics, or military strategy in determining the appropriate “balance”? What role should formal modeling play in formulating defense policy? What is the place for empirical analysis, and what are the strengths and limitations of existing data?[1]

It is well worth the time to revisit the contributions to the 1980s debate. I have included a bibliography below that is not exhaustive, but is a place to start. The collapse of the Soviet Union and the end of the Cold War diminished the intensity of the debates, which simmered through the 1990s and then were obscured during the counterterrorism/ counterinsurgency conflicts of the post-9/11 era. It is possible that the challenges posed by China and Russia amidst the ongoing “hybrid” conflict in Syria and Iraq may revive interest in interrogating the bases of military analyses in the U.S and the West. It is a discussion that is long overdue and potentially quite illuminating.

NOTES

[1] Jeffery A. Friedman, “Manpower and Counterinsurgency: Empirical Foundations for Theory and Doctrine,” Security Studies 20 (2011)

BIBLIOGRAPHY

(Note: Some of these are behind paywalls, but some are available in PDF format. Mearsheimer has made many of his publications freely available here.)

John J. Mearsheimer, “Why the Soviets Can’t Win Quickly in Central Europe,” International Security, Vol. 7, No. 1 (Summer 1982)

Samuel P. Huntington, “Conventional Deterrence and Conventional Retaliation in Europe,” International Security 8, no. 3 (Winter 1983/84)

Joshua Epstein, Strategy and Force Planning (Washington, DC: Brookings, 1987)

Joshua M. Epstein, “Dynamic Analysis and the Conventional Balance in Europe,” International Security 12, no. 4 (Spring 1988)

John J. Mearsheimer, “Numbers, Strategy, and the European Balance,” International Security 12, no. 4 (Spring 1988)

Stephen Biddle, “The European Conventional Balance,” Survival 30, no. 2 (March/April 1988)

Eliot A. Cohen, “Toward Better Net Assessment: Rethinking the European Conventional Balance,” International Security Vol. 13, No. 1 (Summer 1988)

Joshua M. Epstein, “The 3:1 Rule, the Adaptive Dynamic Model, and the Future of Security Studies,” International Security 13, no. 4 (Spring 1989)

John J. Mearsheimer, “Assessing the Conventional Balance,” International Security 13, no. 4 (Spring 1989)

John J. Mearsheimer, Barry R. Posen, Eliot A. Cohen, “Correspondence: Reassessing Net Assessment,” International Security 13, No. 4 (Spring 1989)

Trevor N. Dupuy, “Combat Data and the 3:1 Rule,” International Security 14, no. 1 (Summer 1989)

Stephen Biddle et al., Defense at Low Force Levels (Alexandria, VA: Institute for Defense Analyses, 1991)

Force Ratios in Conventional Combat

This post is a partial response to questions from one of our readers (Stilzkin). On the subject of force ratios in conventional combat….I know of no detailed discussion on the phenomenon published to date. It was clearly addressed by Clausewitz. For example:

At Leuthen Frederick the Great, with about 30,000 men, defeated 80,000 Austrians; at Rossbach he defeated 50,000 allies with 25,000 men. These however are the only examples of victories over an opponent two or even nearly three times as strong. Charles XII at the battle of Narva is not in the same category. The Russian at that time could hardly be considered as Europeans; moreover, we know too little about the main features of that battle. Bonaparte commanded 120,000 men at Dresden against 220,000—not quite half. At Kolin, Frederick the Great’s 30,000 men could not defeat 50,000 Austrians; similarly, victory eluded Bonaparte at the desperate battle of Leipzig, though with his 160,000 men against 280,000, his opponent was far from being twice as strong.

These examples may show that in modern Europe even the most talented general will find it very difficult to defeat an opponent twice his strength. When we observe that the skill of the greatest commanders may be counterbalanced by a two-to-one ratio in the fighting forces, we cannot doubt that superiority in numbers (it does not have to more than double) will suffice to assure victory, however adverse the other circumstances.

and:

If we thus strip the engagement of all the variables arising from its purpose and circumstance, and disregard the fighting value of the troops involved (which is a given quantity), we are left with the bare concept of the engagement, a shapeless battle in which the only distinguishing factors is the number of troops on either side.

These numbers, therefore, will determine victory. It is, of course, evident from the mass of abstractions I have made to reach this point that superiority of numbers in a given engagement is only one of the factors that determines victory. Superior numbers, far from contributing everything, or even a substantial part, to victory, may actually be contributing very little, depending on the circumstances.

But superiority varies in degree. It can be two to one, or three or four to one, and so on; it can obviously reach the point where it is overwhelming.

In this sense superiority of numbers admittedly is the most important factor in the outcome of an engagement, as long as it is great enough to counterbalance all other contributing circumstance. It thus follows that as many troops as possible should be brought into the engagement at the decisive point.

And, in relation to making a combat model:

Numerical superiority was a material factor. It was chosen from all elements that make up victory because, by using combinations of time and space, it could be fitted into a mathematical system of laws. It was thought that all other factors could be ignored if they were assumed to be equal on both sides and thus cancelled one another out. That might have been acceptable as a temporary device for the study of the characteristics of this single factor; but to make the device permanent, to accept superiority of numbers as the one and only rule, and to reduce the whole secret of the art of war to a formula of numerical superiority at a certain time and a certain place was an oversimplification that would not have stood up for a moment against the realities of life.

Force ratios were discussed in various versions of FM 105-5 Maneuver Control, but as far as I can tell, this was not material analytically developed. It was a set of rules, pulled together by a group of anonymous writers for the sake of being able to adjudicate wargames.

The only detailed quantification of force ratios was provided in Numbers, Predictions and War by Trevor Dupuy. Again, these were modeling constructs, not something that was analytically developed (although there was significant background research done and the model was validated multiple times). He then discusses the subject in his book Understanding War, which I consider the most significant book of the 90+ that he wrote or co-authored.

The only analytically based discussion of force ratios that I am aware of (or at least can think of at this moment) is my discussion in my upcoming book War by Numbers: Understanding Conventional Combat. It is the second chapter of the book: https://dupuyinstitute.dreamhosters.com/2016/02/17/war-by-numbers-iii/

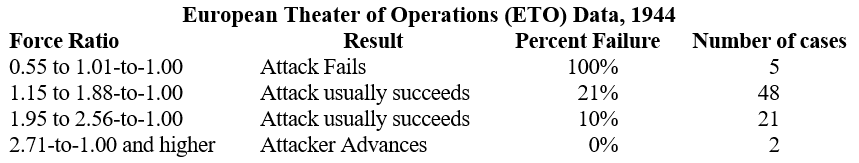

In this book, I assembled the force ratios required to win a battle based upon a large number of cases from World War II division-level combat. For example (page 18 of the manuscript):

I did this for the ETO, for the battles of Kharkov and Kursk (Eastern Front 1943, divided by when the Germans are attacking and when the Soviets are attacking) and for PTO (Manila and Okinawa 1945).

I did this for the ETO, for the battles of Kharkov and Kursk (Eastern Front 1943, divided by when the Germans are attacking and when the Soviets are attacking) and for PTO (Manila and Okinawa 1945).

There is more than can be done on this, and we do have the data assembled to do this, but as always, I have not gotten around to it. This is why I am already considering a War by Numbers II, as I am already thinking about all the subjects I did not cover in sufficient depth in my first book.

Questioning The Validity Of The 3-1 Rule Of Combat

How many troops are needed to successfully attack or defend on the battlefield? There is a long-standing rule of thumb that holds that an attacker requires a 3-1 preponderance over a defender in combat in order to win. The aphorism is so widely accepted that few have questioned whether it is actually true or not.

Trevor Dupuy challenged the validity of the 3-1 rule on empirical grounds. He could find no historical substantiation to support it. In fact, his research on the question of force ratios suggested that there was a limit to the value of numerical preponderance on the battlefield.

TDI President Chris Lawrence has also challenged the 3-1 rule in his own work on the subject.

The validity of the 3-1 rule is no mere academic question. It underpins a great deal of U.S. military policy and warfighting doctrine. Yet, the only time the matter was seriously debated was in the 1980s with reference to the problem of defending Western Europe against the threat of Soviet military invasion.

It is probably long past due to seriously challenge the validity and usefulness of the 3-1 rule again.

The Origins Of The U.S. Army’s Concept Of Combat Power

In a previous post, I critiqued the existing U.S. Army doctrinal method for calculating combat power. The ideas associated with the term “combat power” have been a part of U.S Army doctrine since the 1920s. However, the Army did not specifically define what combat power actually meant until the 1982 edition of FM 100-5 Operations, which introduced the AirLand Battle concept. So where did the Army’s notion of the concept originate? This post will trace the way it has been addressed in the capstone Field Manual (FM) 100-5 Operations series.

As then-U.S. Army Major David Boslego explained in a 1995 School of Advanced Military Studies (SAMS) thesis[1], the Army’s original idea of combat power most likely derived from the work of British military theorist J.F.C. Fuller. In the late 1910s and early 1920s, Fuller articulated the first modern definitions of the principles of war, which he developed from his conception of force on the battlefield as something more than just the tangible effects of shock and firepower. Fuller’s principles were adopted in the 1920 edition of the British Army Field Service Regulations (FSR), which was the likely vector of influence on the U.S. Army’s 1923 FSR. While the term “combat power” does not appear in the 1923 FSR, the influence of Fullerian thinking is evident.

The first use of the phrase itself by the Army can be found in the 1939 edition of FM 100-5 Tentative Field Service Regulations, Operations, which replaced and updated the 1923 FSR. It appears just twice and was not explicitly defined in the text. As Boslego noted, however, even then the use of the term

highlighted a holistic view of combat power. This power was the sum of all factors which ultimately affected the ability of the soldiers to accomplish the mission. Interestingly, the authors of the 1939 edition did not focus solely on the physical objective of destroying the enemy. Instead, they sought to break the enemy’s power of resistance which connotes moral as well as physical factors.

This basic, implied definition of combat power as a combination of interconnected tangible physical and intangible moral factors could be found in all successive editions of FM 100-5 through 1968. The type and character of the factors comprising combat power evolved along with the Army’s experience of combat through this period, however. In addition to leadership, mobility, and firepower, the 1941 edition of FM 100-5 included “better armaments and equipment,” which reflected the Army’s initial impressions of the early “blitzkrieg” battles of World War II.

From World War II Through Korea

While FM 100-5 (1944) and FM 100-5 (1949) made no real changes with respect to describing combat power, the 1954 edition introduced significant new ideas in the wake of major combat operations in Korea, albeit still without actually defining the term. As with its predecessors, FM 100-5 (1954) posited combat power as a combination of firepower, maneuver, and leadership. For the first time, it defined the principles of mass, unity of command, maneuver, and surprise in terms of combat power. It linked the principle of the offensive, “only offensive action achieves decisive results,” with the enduring dictum that “offensive action requires the concentration of superior combat power at the decisive point and time.”

Boslego credited the authors of FM 100-5 (1954) with recognizing the non-linear nature of warfare and advising commanders to take a holistic perspective. He observed that they introduced the subtle but important understanding of combat power not as a fixed value, but as something relative and interactive between two forces in battle. Any calculation of combat power would be valid only in relation to the opposing combat force. “Relative combat power is dynamic and can be directly influenced by opposing commanders. It therefore must be analyzed by the commander in its potential relation to all other factors.” One of the fundamental ways a commander could shift the balance of combat power against an enemy was through maneuver: “Maneuver must be used to alter the relative combat power of military forces.”

[As I mentioned in a previous post, Trevor Dupuy considered FM 100-5 (1954)’s list and definitions of the principles of war to be the best version.]

Into the “Pentomic Era”

The 1962 edition of FM 100-5 supplied a general definition of combat power that articulated the way the Army had been thinking about it since 1939.

Combat power is a combination of the physical means available to a commander and the moral strength of his command. It is significant only in relation to the combat power of the opposing forces. In applying the principles of war, the development and application of combat power are essential to decisive results.

It further refined the elements of combat power by redefining the principles of economy of force and security in terms of it as well.

By the early 1960s, however, the Army’s thinking about force on the battlefield was dominated by the prospect of the use of nuclear weapons. As Boslego noted, both FM 100-5 (1962) and FM 100-5 (1968)

dwelt heavily on the importance of dispersing forces to prevent major losses from a single nuclear strike, being highly mobile to mass at decisive points and being flexible in adjusting forces to the current situation. The terms dispersion, flexibility, and mobility were repeated so frequently in speeches, articles, and congressional testimony, that…they became a mantra. As a result, there was a lack of rigor in the Army concerning what they meant in general and how they would be applied on the tactical battlefield in particular.

The only change the 1968 edition made was to expand the elements of combat power to include “firepower, mobility, communications, condition of equipment, and status of supply,” which presaged an increasing focus on the technological aspects of combat and warfare.

The first major modification in the way the Army thought about combat power since before World War II was reflected in FM 100-5 (1976). These changes in turn prompted a significant reevaluation of the concept by then-U.S. Army Major Huba Wass de Czege. I will tackle how this resulted in the way combat power was redefined in the 1982 edition of FM 100-5 in a future post.

Notes

[1] David V. Boslego, “The Relationship of Information to the Relative Combat Power Model in Force XXI Engagements,” School of Advanced Military Studies Monograph, U.S. Army Command and General Staff College, Fort Leavenworth, Kansas, 1995.

Are There Only Three Ways of Assessing Military Power?

[This article was originally posted on 11 October 2016]

[This article was originally posted on 11 October 2016]

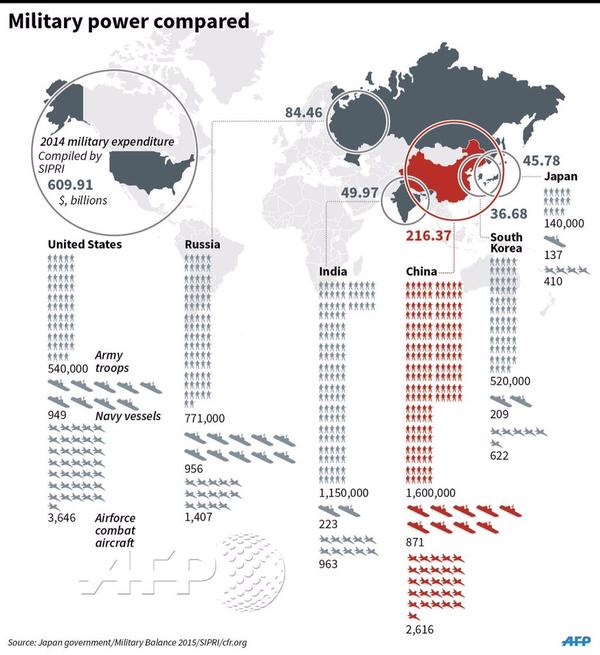

In 2004, military analyst and academic Stephen Biddle published Military Power: Explaining Victory and Defeat in Modern Battle, a book that addressed the fundamental question of what causes victory and defeat in battle. Biddle took to task the study of the conduct of war, which he asserted was based on “a weak foundation” of empirical knowledge. He surveyed the existing literature on the topic and determined that the plethora of theories of military success or failure fell into one of three analytical categories: numerical preponderance, technological superiority, or force employment.

Numerical preponderance theories explain victory or defeat in terms of material advantage, with the winners possessing greater numbers of troops, populations, economic production, or financial expenditures. Many of these involve gross comparisons of numbers, but some of the more sophisticated analyses involve calculations of force density, force-to-space ratios, or measurements of quality-adjusted “combat power.” Notions of threshold “rules of thumb,” such as the 3-1 rule, arise from this. These sorts of measurements form the basis for many theories of power in the study of international relations.

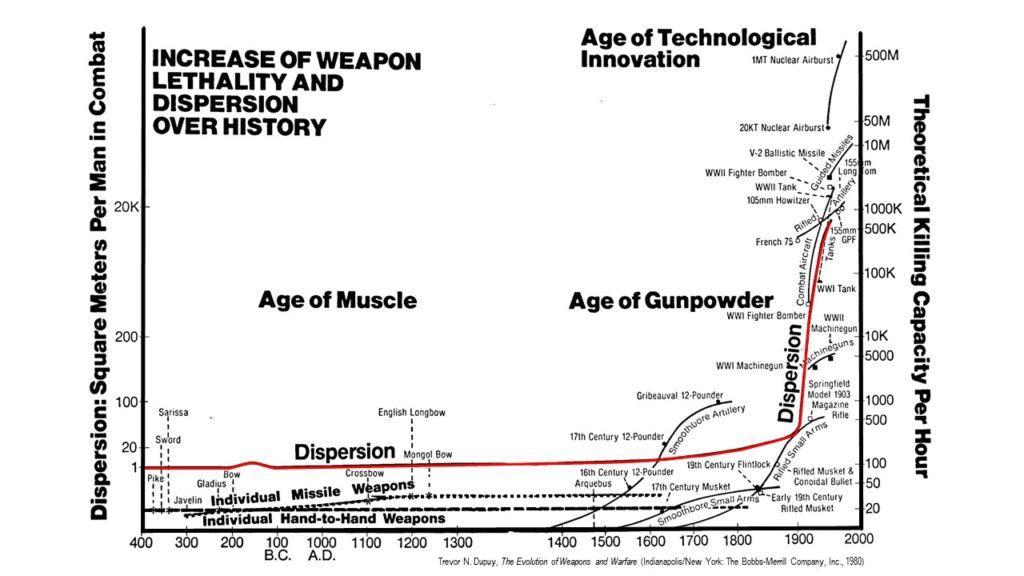

The next most influential means of assessment, according to Biddle, involve views on the primacy of technology. One school, systemic technology theory, looks at how technological advances shift balances within the international system. The best example of this is how the introduction of machine guns in the late 19th century shifted the advantage in combat to the defender, and the development of the tank in the early 20th century shifted it back to the attacker. Such measures are influential in international relations and political science scholarship.

The other school of technological determinacy is dyadic technology theory, which looks at relative advantages between states regardless of posture. This usually involves detailed comparisons of specific weapons systems, tanks, aircraft, infantry weapons, ships, missiles, etc., with the edge going to the more sophisticated and capable technology. The use of Lanchester theory in operations research and combat modeling is rooted in this thinking.

Biddle identified the third category of assessment as subjective assessments of force employment based on non-material factors including tactics, doctrine, skill, experience, morale or leadership. Analyses on these lines are the stock-in-trade of military staff work, military historians, and strategic studies scholars. However, international relations theorists largely ignore force employment and operations research combat modelers tend to treat it as a constant or omit it because they believe its effects cannot be measured.

The common weakness of all of these approaches, Biddle argued, is that “there are differing views, each intuitively plausible but none of which can be considered empirically proven.” For example, no one has yet been able to find empirical support substantiating the validity of the 3-1 rule or Lanchester theory. Biddle notes that the track record for predictions based on force employment analyses has also been “poor.” (To be fair, the problem of testing theory to see if applies to the real world is not limited to assessments of military power, it afflicts security and strategic studies generally.)

So, is Biddle correct? Are there only three ways to assess military outcomes? Are they valid? Can we do better?

Scoring Weapons And Aggregation In Trevor Dupuy’s Combat Models

[The article below is reprinted from the October 1997 edition of The International TNDM Newsletter.]

[The article below is reprinted from the October 1997 edition of The International TNDM Newsletter.]

Consistent Scoring of Weapons and Aggregation of Forces:

The Cornerstone of Dupuy’s Quantitative Analysis of Historical Land Battles

by

James G. Taylor, PhD,

Dept. of Operations Research, Naval Postgraduate School

Introduction

Col. Trevor N. Dupuy was an American original, especially as regards the quantitative study of warfare. As with many prophets, he was not entirely appreciated in his own land, particularly its Military Operations Research (OR) community. However, after becoming rather familiar with the details of his mathematical modeling of ground combat based on historical data, I became aware of the basic scientific soundness of his approach. Unfortunately, his documentation of methodology was not always accepted by others, many of whom appeared to confuse lack of mathematical sophistication in his documentation with lack of scientific validity of his basic methodology.

The purpose of this brief paper is to review the salient points of Dupuy’s methodology from a system’s perspective, i.e., to view his methodology as a system, functioning as an organic whole to capture the essence of past combat experience (with an eye towards extrapolation into the future). The advantage of this perspective is that it immediately leads one to the conclusion that if one wants to use some functional relationship derived from Dupuy’s work, then one should use his methodologies for scoring weapons, aggregating forces, and adjusting for operational circumstances; since this consistency is the only guarantee of being able to reproduce historical results and to project them into the future.

Implications (of this system’s perspective on Dupuy’s work) for current DOD models will be discussed. In particular, the Military OR community has developed quantitative methods for imputing values to weapon systems based on their attrition capability against opposing forces and force interactions.[1] One such approach is the so-called antipotential-potential method[2] used in TACWAR[3] to score weapons. However, one should not expect such scores to provide valid casualty estimates when combined with historically derived functional relationships such as the so-called ATLAS casualty-rate curves[4] used in TACWAR, because a different “yard-stick” (i.e. measuring system for estimating the relative combat potential of opposing forces) was used to develop such a curve.

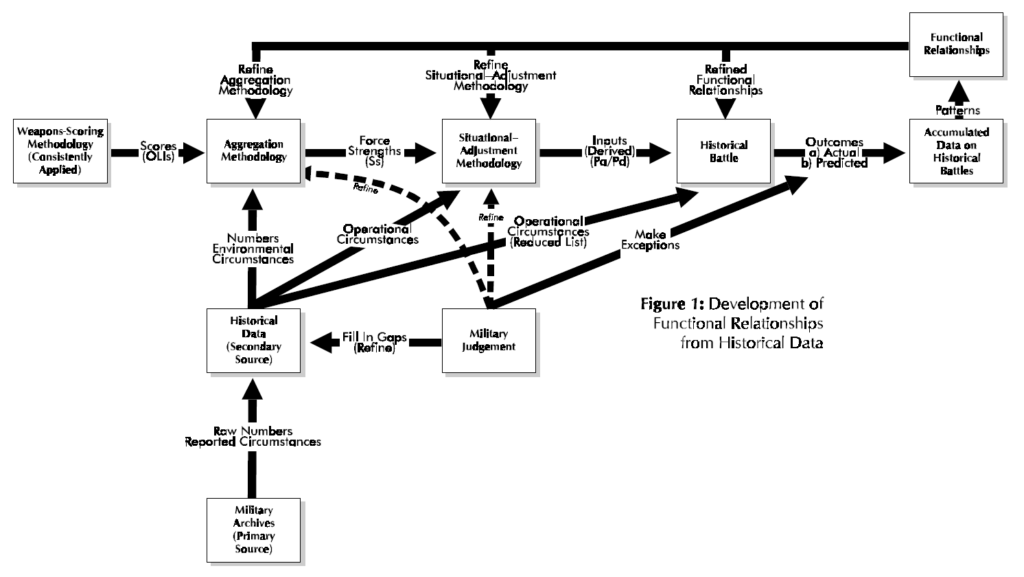

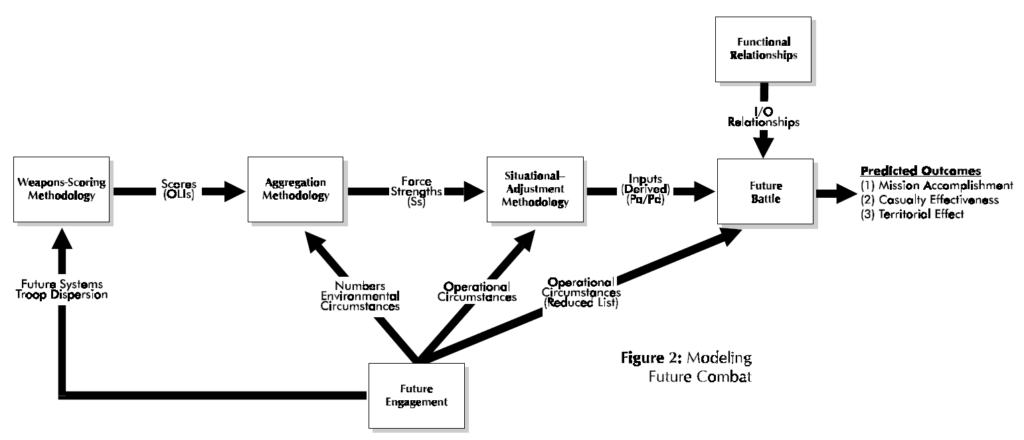

Overview of Dupuy’s Approach

This section briefly outlines the salient features of Dupuy’s approach to the quantitative analysis and modeling of ground combat as embodied in his Tactical Numerical Deterministic Model (TNDM) and its predecessor the Quantified Judgment Model (QJM). The interested reader can find details in Dupuy [1979] (see also Dupuy [1985][5], [1987], [1990]). Here we will view Dupuy’s methodology from a system approach, which seeks to discern its various components and their interactions and to view these components as an organic whole. Essentially Dupuy’s approach involves the development of functional relationships from historical combat data (see Fig. 1) and then using these functional relationships to model future combat (see Fig, 2).

At the heart of Dupuy’s method is the investigation of historical battles and comparing the relationship of inputs (as quantified by relative combat power, denoted as Pa/Pd for that of the attacker relative to that of the defender in Fig. l)(e.g. see Dupuy [1979, pp. 59-64]) to outputs (as quantified by extent of mission accomplishment, casualty effectiveness, and territorial effectiveness; see Fig. 2) (e.g. see Dupuy [1979, pp. 47-50]), The salient point is that within this scheme, the main input[6] (i.e. relative combat power) to a historical battle is a derived quantity. It is computed from formulas that involve three essential aspects: (1) the scoring of weapons (e.g, see Dupuy [1979, Chapter 2 and also Appendix A]), (2) aggregation methodology for a force (e.g. see Dupuy [1979, pp. 43-46 and 202-203]), and (3) situational-adjustment methodology for determining the relative combat power of opposing forces (e.g. see Dupuy [1979, pp. 46-47 and 203-204]). In the force-aggregation step the effects on weapons of Dupuy’s environmental variables and one operational variable (air superiority) are considered[7], while in the situation-adjustment step the effects on forces of his behavioral variables[8] (aggregated into a single factor called the relative combat effectiveness value (CEV)) and also the other operational variables are considered (Dupuy [1987, pp. 86-89])

Moreover, any functional relationships developed by Dupuy depend (unless shown otherwise) on his computational system for derived quantities, namely OLls, force strengths, and relative combat power. Thus, Dupuy’s results depend in an essential manner on his overall computational system described immediately above. Consequently, any such functional relationship (e.g. casualty-rate curve) directly or indirectly derivative from Dupuy‘s work should still use his computational methodology for determination of independent-variable values.

Fig l also reveals another important aspect of Dupuy’s work, the development of reliable data on historical battles, Military judgment plays an essential role in this development of such historical data for a variety of reasons. Dupuy was essentially the only source of new secondary historical data developed from primary sources (see McQuie [1970] for further details). These primary sources are well known to be both incomplete and inconsistent, so that military judgment must be used to fill in the many gaps and reconcile observed inconsistencies. Moreover, military judgment also generates the working hypotheses for model development (e.g. identification of significant variables).

At the heart of Dupuy’s quantitative investigation of historical battles and subsequent model development is his own weapons-scoring methodology, which slowly evolved out of study efforts by the Historical Evaluation Research Organization (HERO) and its successor organizations (cf. HERO [1967] and compare with Dupuy [1979]). Early HERO [1967, pp. 7-8] work revealed that what one would today call weapons scores developed by other organizations were so poorly documented that HERO had to create its own methodology for developing the relative lethality of weapons, which eventually evolved into Dupuy’s Operational Lethality Indices (OLIs). Dupuy realized that his method was arbitrary (as indeed is its counterpart, called the operational definition, in formal scientific work), but felt that this would be ameliorated if the weapons-scoring methodology be consistently applied to historical battles. Unfortunately, this point is not clearly stated in Dupuy’s formal writings, although it was clearly (and compellingly) made by him in numerous briefings that this author heard over the years.

In other words, from a system’s perspective, the functional relationships developed by Colonel Dupuy are part of his analysis system that includes this weapons-scoring methodology consistently applied (see Fig. l again). The derived functional relationships do not stand alone (unless further empirical analysis shows them to hold for any weapons-scoring methodology), but function in concert with computational procedures. Another essential part of this system is Dupuy‘s aggregation methodology, which combines numbers, environmental circumstances, and weapons scores to compute the strength (S) of a military force. A key innovation by Colonel Dupuy [1979, pp. 202- 203] was to use a nonlinear (more precisely, a piecewise-linear) model for certain elements of force strength. This innovation precluded the occurrence of military absurdities such as air firepower being fully substitutable for ground firepower, antitank weapons being fully effective when armor targets are lacking, etc‘ The final part of this computational system is Dupuy’s situational-adjustment methodology, which combines the effects of operational circumstances with force strengths to determine relative combat power, e.g. Pa/Pd.

To recapitulate, the determination of an Operational Lethality Index (OLI) for a weapon involves the combination of weapon lethality, quantified in terms of a Theoretical Lethality Index (TLI) (e.g. see Dupuy [1987, p. 84]), and troop dispersion[9] (e.g. see Dupuy [1987, pp. 84- 85]). Weapons scores (i.e. the OLIs) are then combined with numbers (own side and enemy) and combat- environment factors to yield force strength. Six[10] different categories of weapons are aggregated, with nonlinear (i.e. piecewise-linear) models being used for the following three categories of weapons: antitank, air defense, and air firepower (i.e. c1ose—air support). Operational, e.g. mobility, posture, surprise, etc. (Dupuy [1987, p. 87]), and behavioral variables (quantified as a relative combat effectiveness value (CEV)) are then applied to force strength to determine a side’s combat-power potential.

Requirement for Consistent Scoring of Weapons, Force Aggregation, and Situational Adjustment for Operational Circumstances

The salient point to be gleaned from Fig.1 and 2 is that the same (or at least consistent) weapons—scoring, aggregation, and situational—adjustment methodologies be used for both developing functional relationships and then playing them to model future combat. The corresponding computational methods function as a system (organic whole) for determining relative combat power, e.g. Pa/Pd. For the development of functional relationships from historical data, a force ratio (relative combat power of the two opposing sides, e.g. attacker’s combat power divided by that of the defender, Pa/Pd is computed (i.e. it is a derived quantity) as the independent variable, with observed combat outcome being the dependent variable. Thus, as discussed above, this force ratio depends on the methodologies for scoring weapons, aggregating force strengths, and adjusting a force’s combat power for the operational circumstances of the engagement. It is a priori not clear that different scoring, aggregation, and situational-adjustment methodologies will lead to similar derived values. If such different computational procedures were to be used, these derived values should be recomputed and the corresponding functional relationships rederived and replotted.

However, users of the Tactical Numerical Deterministic Model (TNDM) (or for that matter, its predecessor, the Quantified Judgment Model (QJM)) need not worry about this point because it was apparently meticulously observed by Colonel Dupuy in all his work. However, portions of his work have found their way into a surprisingly large number of DOD models (usually not explicitly acknowledged), but the context and range of validity of historical results have been largely ignored by others. The need for recalibration of the historical data and corresponding functional relationships has not been considered in applying Dupuy’s results for some important current DOD models.

Implications for Current DOD Models

A number of important current DOD models (namely, TACWAR and JICM discussed below) make use of some of Dupuy’s historical results without recalibrating functional relationships such as loss rates and rates of advance as a function of some force ratio (e.g. Pa/Pd). As discussed above, it is not clear that such a procedure will capture the essence of past combat experience. Moreover, in calculating losses, Dupuy first determines personnel losses (expressed as a percent loss of personnel strength, i.e., number of combatants on a side) and then calculates equipment losses as a function of this casualty rate (e.g., see Dupuy [1971, pp. 219-223], also [1990, Chapters 5 through 7][11]). These latter functional relationships are apparently not observed in the models discussed below. In fact, only Dupuy (going back to Dupuy [1979][12] takes personnel losses to depend on a force ratio and other pertinent variables, with materiel losses being taken as derivative from this casualty rate.

For example, TACWAR determines personnel losses[13] by computing a force ratio and then consulting an appropriate casualty-rate curve (referred to as empirical data), much in the same fashion as ATLAS did[14]. However, such a force ratio is computed using a linear model with weapon values determined by the so-called antipotential-potential method[15]. Unfortunately, this procedure may not be consistent with how the empirical data (i.e. the casualty-rate curves) was developed. Further research is required to demonstrate that valid casualty estimates are obtained when different weapon scoring, aggregation, and situational-adjustment methodologies are used to develop casualty-rate curves from historical data and to use them to assess losses in aggregated combat models. Furthermore, TACWAR does not use Dupuy’s model for equipment losses (see above), although it does purport, as just noted above, to use “historical data” (e.g., see Kerlin et al. [1975, p. 22]) to compute personnel losses as a function (among other things) of a force ratio (given by a linear relationship), involving close air support values in a way never used by Dupuy. Although their force-ratio determination methodology does have logical and mathematical merit, it is not the way that the historical data was developed.

Moreover, RAND (Allen [1992]) has more recently developed what is called the situational force scoring (SFS) methodology for calculating force ratios in large-scale, aggregated-force combat situations to determine loss and movement rates. Here, SFS refers essentially to a force- aggregation and situation-adjustment methodology, which has many conceptual elements in common with Dupuy‘s methodology (except, most notably, extensive testing against historical data, especially documentation of such efforts). This SFS was originally developed for RSAS[16] and is today used in JICM[17]. It also apparently uses a weapon-scoring system developed at RAND[18]. It purports (no documentation given [citation of unpublished work]) to be consistent with historical data (including the ATLAS casualty-rate curves) (Allen [1992, p.41]), but again no consideration is given to recalibration of historical results for different weapon scoring, force-aggregation, and situational-adjustment methodologies. SFS emphasizes adjusting force strengths according to operational circumstances (the “situation”) of the engagement (including surprise), with many innovative ideas (but in some major ways has little connection with previous work of others[19]). The resulting model contains many more details than historical combat data would support. It also is methodology that differs in many essential ways from that used previously by any investigator. In particular, it is doubtful that it develops force ratios in a manner consistent with Dupuy’s work.

Final Comments

Use of (sophisticated) mathematics for modeling past historical combat (and extrapolating it into the future for planning purposes) is no reason for ignoring Dupuy’s work. One would think that the current Military OR community would try to understand Dupuy’s work before trying to improve and extend it. In particular, Colonel Dupuy’s various computational procedures (including constants) must be considered as an organic whole (i.e. a system) supporting the development of functional relationships. If one ignores this computational system and simply tries to use some isolated aspect, the result may be interesting and even logically sound, but it probably lacks any scientific validity.

REFERENCES

P. Allen, “Situational Force Scoring: Accounting for Combined Arms Effects in Aggregate Combat Models,” N-3423-NA, The RAND Corporation, Santa Monica, CA, 1992.

L. B. Anderson, “A Briefing on Anti-Potential Potential (The Eigen-value Method for Computing Weapon Values), WP-2, Project 23-31, Institute for Defense Analyses, Arlington, VA, March 1974.

B. W. Bennett, et al, “RSAS 4.6 Summary,” N-3534-NA, The RAND Corporation, Santa Monica, CA, 1992.

B. W. Bennett, A. M. Bullock, D. B. Fox, C. M. Jones, J. Schrader, R. Weissler, and B. A. Wilson, “JICM 1.0 Summary,” MR-383-NA, The RAND Corporation, Santa Monica, CA, 1994.

P. K. Davis and J. A. Winnefeld, “The RAND Strategic Assessment Center: An Overview and Interim Conclusions About Utility and Development Options,” R-2945-DNA, The RAND Corporation, Santa Monica, CA, March 1983.

T.N, Dupuy, Numbers. Predictions and War: Using History to Evaluate Combat Factors and Predict the Outcome of Battles, The Bobbs-Merrill Company, Indianapolis/New York, 1979,

T.N. Dupuy, Numbers Predictions and War, Revised Edition, HERO Books, Fairfax, VA 1985.

T.N. Dupuy, Understanding War: History and Theory of Combat, Paragon House Publishers, New York, 1987.

T.N. Dupuy, Attrition: Forecasting Battle Casualties and Equipment Losses in Modem War, HERO Books, Fairfax, VA, 1990.

General Research Corporation (GRC), “A Hierarchy of Combat Analysis Models,” McLean, VA, January 1973.

Historical Evaluation and Research Organization (HERO), “Average Casualty Rates for War Games, Based on Historical Data,” 3 Volumes in 1, Dunn Loring, VA, February 1967.

E. P. Kerlin and R. H. Cole, “ATLAS: A Tactical, Logistical, and Air Simulation: Documentation and User’s Guide,” RAC-TP-338, Research Analysis Corporation, McLean, VA, April 1969 (AD 850 355).

E.P. Kerlin, L.A. Schmidt, A.J. Rolfe, M.J. Hutzler, and D,L. Moody, “The IDA Tactical Warfare Model: A Theater-Level Model of Conventional, Nuclear, and Chemical Warfare, Volume II- Detailed Description” R-21 1, Institute for Defense Analyses, Arlington, VA, October 1975 (AD B009 692L).

R. McQuie, “Military History and Mathematical Analysis,” Military Review 50, No, 5, 8-17 (1970).

S.M. Robinson, “Shadow Prices for Measures of Effectiveness, I: Linear Model,” Operations Research 41, 518-535 (1993).

J.G. Taylor, Lanchester Models of Warfare. Vols, I & II. Operations Research Society of America, Alexandria, VA, 1983. (a)

J.G. Taylor, “A Lanchester-Type Aggregated-Force Model of Conventional Ground Combat,” Naval Research Logistics Quarterly 30, 237-260 (1983). (b)

NOTES

[1] For example, see Taylor [1983a, Section 7.18], which contains a number of examples. The basic references given there may be more accessible through Robinson [I993].

[2] This term was apparently coined by L.B. Anderson [I974] (see also Kerlin et al. [1975, Chapter I, Section D.3]).

[3] The Tactical Warfare (TACWAR) model is a theater-level, joint-warfare, computer-based combat model that is currently used for decision support by the Joint Staff and essentially all CINC staffs. It was originally developed by the Institute for Defense Analyses in the mid-1970s (see Kerlin et al. [1975]), originally referred to as TACNUC, which has been continually upgraded until (and including) the present day.

[4] For example, see Kerlin and Cole [1969], GRC [1973, Fig. 6-6], or Taylor [1983b, Fig. 5] (also Taylor [1983a, Section 7.13]).

[5] The only apparent difference between Dupuy [1979] and Dupuy [1985] is the addition of an appendix (Appendix C “Modified Quantified Judgment Analysis of the Bekaa Valley Battle”) to the end of the latter (pp. 241-251). Hence, the page content is apparently the same for these two books for pp. 1-239.

[6] Technically speaking, one also has the engagement type and possibly several other descriptors (denoted in Fig. 1 as reduced list of operational circumstances) as other inputs to a historical battle.

[7] In Dupuy [1979, e.g. pp. 43-46] only environmental variables are mentioned, although basically the same formulas underlie both Dupuy [1979] and Dupuy [1987]. For simplicity, Fig. 1 and 2 follow this usage and employ the term “environmental circumstances.”

[8] In Dupuy [1979, e.g. pp. 46-47] only operational variables are mentioned, although basically the same formulas underlie both Dupuy [1979] and Dupuy [1987]. For simplicity, Fig. 1 and 2 follow this usage and employ the term “operational circumstances.”

[9] Chris Lawrence has kindly brought to my attention that since the same value for troop dispersion from an historical period (e.g. see Dupuy [1987, p. 84]) is used for both the attacker and also the defender, troop dispersion does not actually affect the determination of relative combat power PM/Pd.

[10] Eight different weapon types are considered, with three being classified as infantry weapons (e.g. see Dupuy [1979, pp, 43-44], [1981 pp. 85-86]).

[11] Chris Lawrence has kindly informed me that Dupuy‘s work on relating equipment losses to personnel losses goes back to the early 1970s and even earlier (e.g. see HERO [1966]). Moreover, Dupuy‘s [1992] book Future Wars gives some additional empirical evidence concerning the dependence of equipment losses on casualty rates.

[12] But actually going back much earlier as pointed out in the previous footnote.

[13] See Kerlin et al. [1975, Chapter I, Section D.l].

[14] See Footnote 4 above.

[15] See Kerlin et al. [1975, Chapter I, Section D.3]; see also Footnotes 1 and 2 above.

[16] The RAND Strategy Assessment System (RSAS) is a multi-theater aggregated combat model developed at RAND in the early l980s (for further details see Davis and Winnefeld [1983] and Bennett et al. [1992]). It evolved into the Joint Integrated Contingency Model (JICM), which is a post-Cold War redesign of the RSAS (starting in FY92).

[17] The Joint Integrated Contingency Model (JICM) is a game-structured computer-based combat model of major regional contingencies and higher-level conflicts, covering strategic mobility, regional conventional and nuclear warfare in multiple theaters, naval warfare, and strategic nuclear warfare (for further details, see Bennett et al. [1994]).

[18] RAND apparently replaced one weapon-scoring system by another (e.g. see Allen [1992, pp. 9, l5, and 87-89]) without making any other changes in their SFS System.

[19] For example, both Dupuy’s early HERO work (e.g. see Dupuy [1967]), reworks of these results by the Research Analysis Corporation (RAC) (e.g. see RAC [1973, Fig. 6-6]), and Dupuy’s later work (e.g. see Dupuy [1979]) considered daily fractional casualties for the attacker and also for the defender as basic casualty-outcome descriptors (see also Taylor [1983b]). However, RAND does not do this, but considers the defender’s loss rate and a casualty exchange ratio as being the basic casualty-production descriptors (Allen [1992, pp. 41-42]). The great value of using the former set of descriptors (i.e. attacker and defender fractional loss rates) is that not only is casualty assessment more straight forward (especially development of functional relationships from historical data) but also qualitative model behavior is readily deduced (see Taylor [1983b] for further details).

Comparing the RAND Version of the 3:1 Rule to Real-World Data

[The article below is reprinted from the Winter 2010 edition of The International TNDM Newsletter.]

Comparing the RAND Version of the 3:1 Rule to Real-World Data

Christopher A. Lawrence

For this test, The Dupuy Institute took advantage of two of its existing databases for the DuWar suite of databases. The first is the Battles Database (BaDB), which covers 243 battles from 1600 to 1900. The second is the Division-level Engagement Database (DLEDB), which covers 675 division-level engagements from 1904 to 1991.

The first was chosen to provide a historical context for the 3:1 rule of thumb. The second was chosen so as to examine how this rule applies to modern combat data.

We decided that this should be tested to the RAND version of the 3:1 rule as documented by RAND in 1992 and used in JICM [Joint Integrated Contingency Model] (with SFS [Situational Force Scoring]) and other models. This rule, as presented by RAND, states: “[T]he famous ‘3:1 rule,’ according to which the attacker and defender suffer equal fractional loss rates at a 3:1 force ratio if the battle is in mixed terrain and the defender enjoys ‘prepared’ defenses…”

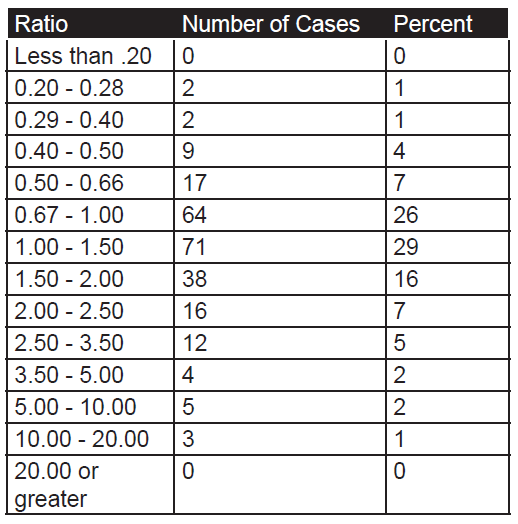

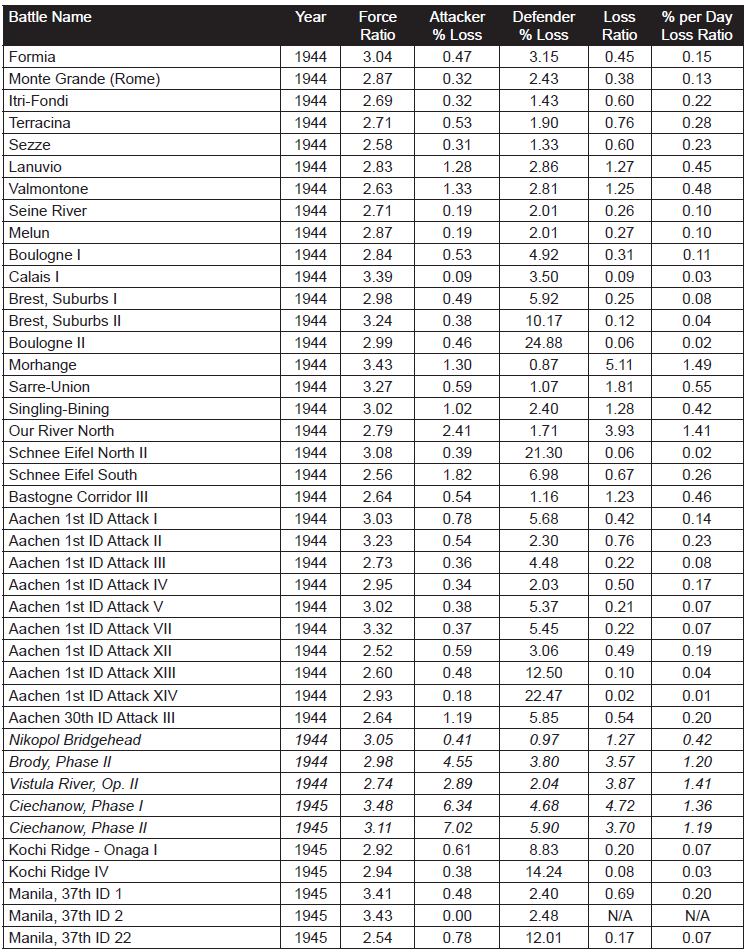

Therefore, we selected out all those engagements from these two databases that ranged from force ratios of 2.5 to 1 to 3.5 to 1 (inclusive). It was then a simple matter to map those to a chart that looked at attackers losses compared to defender losses. In the case of the pre-1904 cases, even with a large database (243 cases), there were only 12 cases of combat in that range, hardly statistically significant. That was because most of the combat was at odds ratios in the range of .50-to-1 to 2.00-to-one.

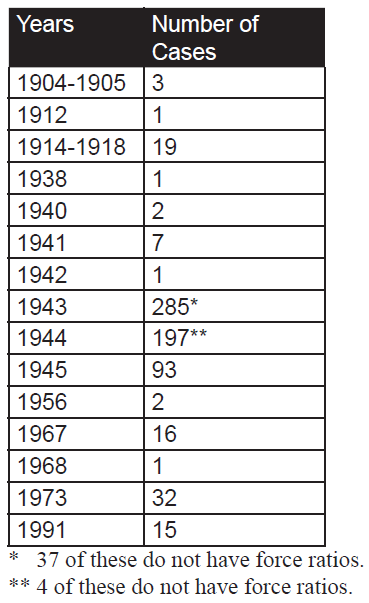

The count of number of engagements by odds in the pre-1904 cases:

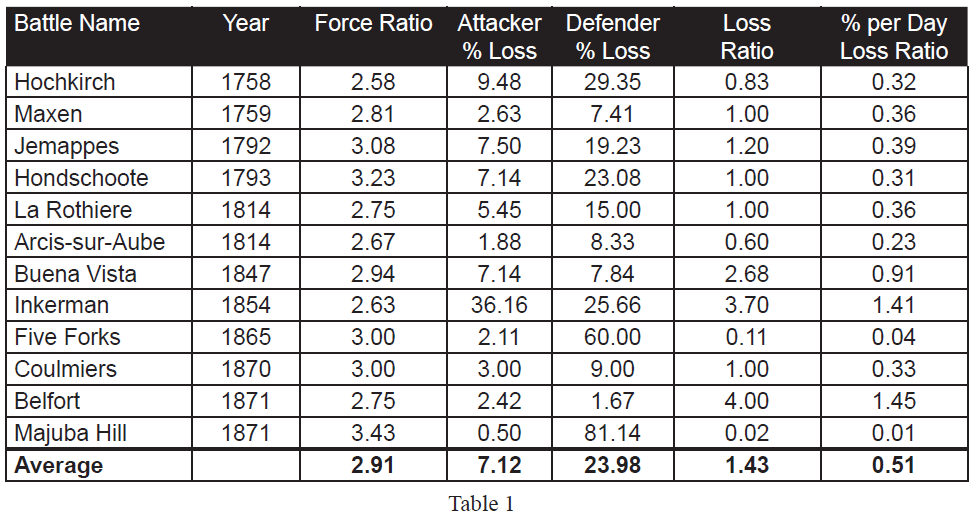

As the database is one of battles, then usually these are only joined at reasonably favorable odds, as shown by the fact that 88 percent of the battles occur between 0.40 and 2.50 to 1 odds. The twelve pre-1904 cases in the range of 2.50 to 3.50 are shown in Table 1.

As the database is one of battles, then usually these are only joined at reasonably favorable odds, as shown by the fact that 88 percent of the battles occur between 0.40 and 2.50 to 1 odds. The twelve pre-1904 cases in the range of 2.50 to 3.50 are shown in Table 1.

If the RAND version of the 3:1 rule was valid, one would expect that the “Percent per Day Loss Ratio” (the last column) would hover around 1.00, as this is the ratio of attacker percent loss rate to the defender percent loss rate. As it is, 9 of the 12 data points are noticeably below 1 (below 0.40 or a 1 to 2.50 exchange rate). This leaves only three cases (25%) with an exchange rate that would support such a “rule.”

If the RAND version of the 3:1 rule was valid, one would expect that the “Percent per Day Loss Ratio” (the last column) would hover around 1.00, as this is the ratio of attacker percent loss rate to the defender percent loss rate. As it is, 9 of the 12 data points are noticeably below 1 (below 0.40 or a 1 to 2.50 exchange rate). This leaves only three cases (25%) with an exchange rate that would support such a “rule.”

If we look at the simple ratio of actual losses (vice percent losses), then the numbers comes much closer to parity, but this is not the RAND interpretation of the 3:1 rule. Six of the twelve numbers “hover” around an even exchange ratio, with six other sets of data being widely off that central point. “Hover” for the rest of this discussion means that the exchange ratio ranges from 0.50-to-1 to 2.00-to 1.

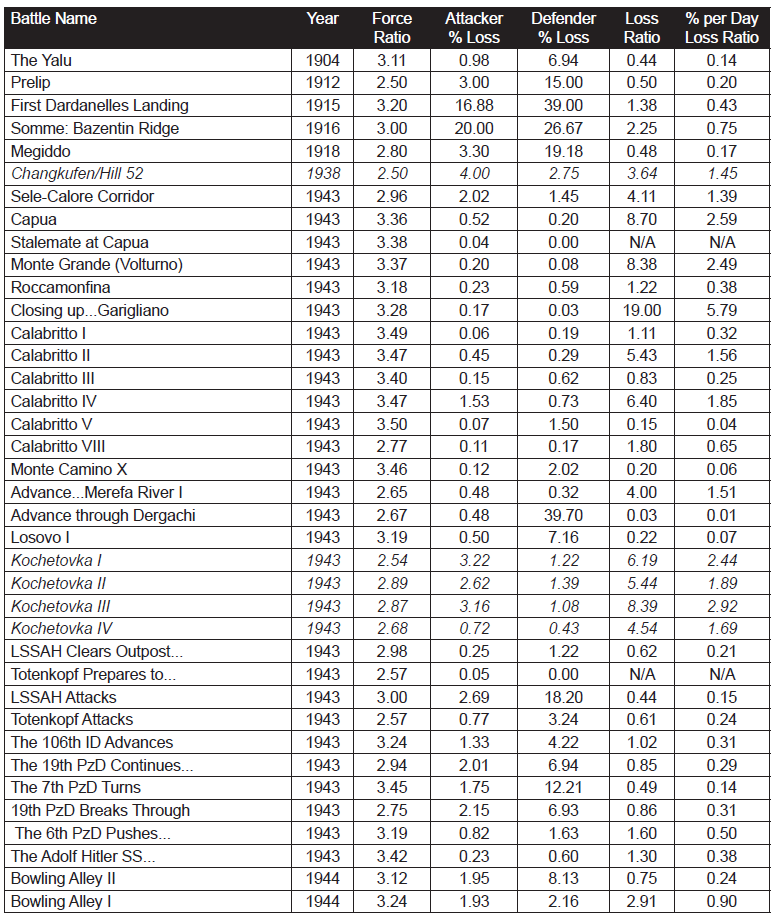

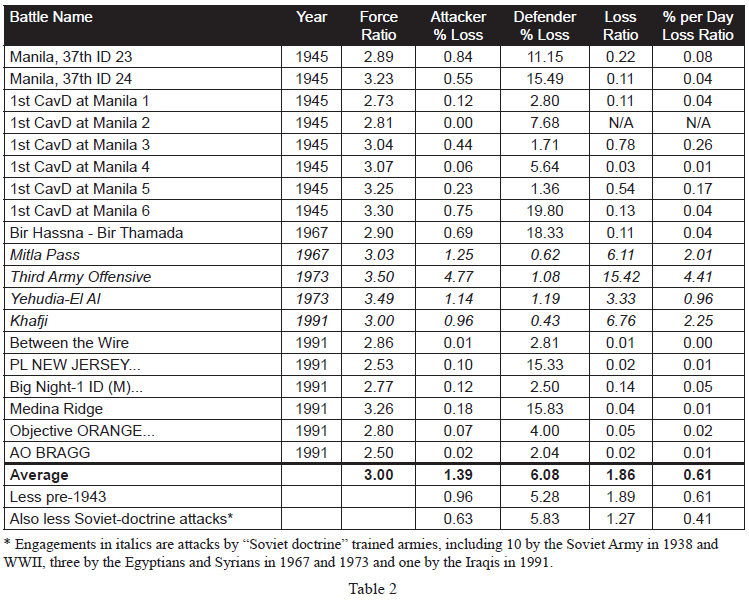

Still, this is early modern linear combat, and is not always representative of modern war. Instead, we will examine 634 cases in the Division-level Database (which consists of 675 cases) where we have worked out the force ratios. While this database covers from 1904 to 1991, most of the cases are from WWII (1939- 1945). Just to compare:

As such, 87% of the cases are from WWII data and 10% of the cases are from post-WWII data. The engagements without force ratios are those that we are still working on as The Dupuy Institute is always expanding the DLEDB as a matter of routine. The specific cases, where the force ratios are between 2.50 and 3.50 to 1 (inclusive) are shown in Table 2:

As such, 87% of the cases are from WWII data and 10% of the cases are from post-WWII data. The engagements without force ratios are those that we are still working on as The Dupuy Institute is always expanding the DLEDB as a matter of routine. The specific cases, where the force ratios are between 2.50 and 3.50 to 1 (inclusive) are shown in Table 2:

This is a total of 98 engagements at force ratios of 2.50 to 3.50 to 1. It is 15 percent of the 634 engagements for which we had force ratios. With this fairly significant representation of the overall population, we are still getting no indication that the 3:1 rule, as RAND postulates it applies to casualties, does indeed fit the data at all. Of the 98 engagements, only 19 of them demonstrate a percent per day loss ratio (casualty exchange ratio) between 0.50-to-1 and 2-to-1. This is only 19 percent of the engagements at roughly 3:1 force ratio. There were 72 percent (71 cases) of those engagements at lower figures (below 0.50-to-1) and only 8 percent (cases) are at a higher exchange ratio. The data clearly was not clustered around the area from 0.50-to- 1 to 2-to-1 range, but was well to the left (lower) of it.

This is a total of 98 engagements at force ratios of 2.50 to 3.50 to 1. It is 15 percent of the 634 engagements for which we had force ratios. With this fairly significant representation of the overall population, we are still getting no indication that the 3:1 rule, as RAND postulates it applies to casualties, does indeed fit the data at all. Of the 98 engagements, only 19 of them demonstrate a percent per day loss ratio (casualty exchange ratio) between 0.50-to-1 and 2-to-1. This is only 19 percent of the engagements at roughly 3:1 force ratio. There were 72 percent (71 cases) of those engagements at lower figures (below 0.50-to-1) and only 8 percent (cases) are at a higher exchange ratio. The data clearly was not clustered around the area from 0.50-to- 1 to 2-to-1 range, but was well to the left (lower) of it.

Looking just at straight exchange ratios, we do get a better fit, with 31 percent (30 cases) of the figure ranging between 0.50 to 1 and 2 to 1. Still, this figure exchange might not be the norm with 45 percent (44 cases) lower and 24 percent (24 cases) higher. By definition, this fit is 1/3rd the losses for the attacker as postulated in the RAND version of the 3:1 rule. This is effectively an order of magnitude difference, and it clearly does not represent the norm or the center case.

The percent per day loss exchange ratio ranges from 0.00 to 5.71. The data tends to be clustered at the lower values, so the high values are very much outliers. The highest percent exchange ratio is 5.71, the second highest is 4.41, the third highest is 2.92. At the other end of the spectrum, there are four cases where no losses were suffered by one side and seven where the exchange ratio was .01 or less. Ignoring the “N/A” (no losses suffered by one side) and the two high “outliers (5.71 and 4.41), leaves a range of values from 0.00 to 2.92 across 92 cases. With an even distribution across that range, one would expect that 51 percent of them would be in the range of 0.50-to-1 and 2.00-to-1. With only 19 percent of the cases being in that range, one is left to conclude that there is no clear correlation here. In fact, it clearly is the opposite effect, which is that there is a negative relationship. Not only is the RAND construct unsupported, it is clearly and soundly contradicted with this data. Furthermore, the RAND construct is theoretically a worse predictor of casualty rates than if one randomly selected a value for the percentile exchange rates between the range of 0 and 2.92. We do believe this data is appropriate and accurate for such a test.

As there are only 19 cases of 3:1 attacks falling in the even percentile exchange rate range, then we should probably look at these cases for a moment:

One will note, in these 19 cases, that the average attacker casualties are way out of line with the average for the entire data set (3.20 versus 1.39 or 3.20 versus 0.63 with pre-1943 and Soviet-doctrine attackers removed). The reverse is the case for the defenders (3.12 versus 6.08 or 3.12 versus 5.83 with pre-1943 and Soviet-doctrine attackers removed). Of course, of the 19 cases, 2 are pre-1943 cases and 7 are cases of Soviet-doctrine attackers (in fact, 8 of the 14 cases of the Soviet-doctrine attackers are in this selection of 19 cases). This leaves 10 other cases from the Mediterranean and ETO (Northwest Europe 1944). These are clearly the unusual cases, outliers, etc. While the RAND 3:1 rule may be applicable for the Soviet-doctrine offensives (as it applies to 8 of the 14 such cases we have), it does not appear to be applicable to anything else. By the same token, it also does not appear to apply to virtually any cases of post-WWII combat. This all strongly argues that not only is the RAND construct not proven, but it is indeed clearly not correct.

One will note, in these 19 cases, that the average attacker casualties are way out of line with the average for the entire data set (3.20 versus 1.39 or 3.20 versus 0.63 with pre-1943 and Soviet-doctrine attackers removed). The reverse is the case for the defenders (3.12 versus 6.08 or 3.12 versus 5.83 with pre-1943 and Soviet-doctrine attackers removed). Of course, of the 19 cases, 2 are pre-1943 cases and 7 are cases of Soviet-doctrine attackers (in fact, 8 of the 14 cases of the Soviet-doctrine attackers are in this selection of 19 cases). This leaves 10 other cases from the Mediterranean and ETO (Northwest Europe 1944). These are clearly the unusual cases, outliers, etc. While the RAND 3:1 rule may be applicable for the Soviet-doctrine offensives (as it applies to 8 of the 14 such cases we have), it does not appear to be applicable to anything else. By the same token, it also does not appear to apply to virtually any cases of post-WWII combat. This all strongly argues that not only is the RAND construct not proven, but it is indeed clearly not correct.

The fact that this construct also appears in Soviet literature, but nowhere else in US literature, indicates that this is indeed where the rule was drawn from. One must consider the original scenarios run for the RSAC [RAND Strategy Assessment Center] wargame were “Fulda Gap” and Korean War scenarios. As such, they were regularly conducting battles with Soviet attackers versus Allied defenders. It would appear that the 3:1 rule that they used more closely reflected the experiences of the Soviet attackers in WWII than anything else. Therefore, it may have been a fine representation for those scenarios as long as there was no US counterattacking or US offensives (and assuming that the Soviet Army of the 1980s performed at the same level as in did in the 1940s).

There was a clear relative performance difference between the Soviet Army and the German Army in World War II (see our Capture Rate Study Phase I & II and Measuring Human Factors in Combat for a detailed analysis of this).[1] It was roughly in the order of a 3-to-1-casualty exchange ratio. Therefore, it is not surprising that Soviet writers would create analytical tables based upon an equal percentage exchange of losses when attacking at 3:1. What is surprising, is that such a table would be used in the US to represent US forces now. This is clearly not a correct application.

Therefore, RAND’s SFS, as currently constructed, is calibrated to, and should only be used to represent, a Soviet-doctrine attack on first world forces where the Soviet-style attacker is clearly not properly trained and where the degree of performance difference is similar to that between the Germans and Soviets in 1942-44. It should not be used for US counterattacks, US attacks, or for any forces of roughly comparable ability (regardless of whether Soviet-style doctrine or not). Furthermore, it should not be used for US attacks against forces of inferior training, motivation and cohesiveness. If it is, then any such tables should be expected to produce incorrect results, with attacker losses being far too high relative to the defender. In effect, the tables unrealistically penalize the attacker.

As JICM with SFS is now being used for a wide variety of scenarios, then it should not be used at all until this fundamental error is corrected, even if that use is only for training. With combat tables keyed to a result that is clearly off by an order of magnitude, then the danger of negative training is high.

NOTES

[1] Capture Rate Study Phases I and II Final Report (The Dupuy Institute, March 6, 2000) (2 Vols.) and Measuring Human Factors in Combat—Part of the Enemy Prisoner of War Capture Rate Study (The Dupuy Institute, August 31, 2000). Both of these reports are available through our web site.