This is in response to long comment by Clinton Reilly about Breakpoints (Forced Changes in Posture) on this thread:

Reilly starts with a very nice statement of the issue:

Clearly breakpoints are crucial when modelling battlefield combat. I have read extensively about it using mostly first hand accounts of battles rather than high level summaries. Some of the major factors causing it appear to be loss of leadership (e.g. Harald’s death at Hastings), loss of belief in the units capacity to achieve its objectives (e.g. the retreat of the Old Guard at Waterloo, surprise often figured in Mongol successes, over confidence resulting in impetuous attacks which fail dramatically (e.g. French attacks at Agincourt and Crecy), loss of control over the troops (again Crecy and Agincourt) are some of the main ones I can think of off hand.

The break-point crisis seems to occur against a background of confusion, disorder, mounting casualties, increasing fatigue and loss of morale. Casualties are part of the background but not usually the actual break point itself.

He then states:

Perhaps a way forward in the short term is to review a number of first hand battle accounts (I am sure you can think of many) and calculate the percentage of times these factors and others appear as breakpoints in the literature.

This has been done. In effect this is what Robert McQuie did in his article and what was the basis for the DMSI breakpoints study.

Mr. Reilly then concludes:

Why wait for the military to do something? You will die of old age before that happens!

That is distinctly possible. If this really was a simple issue that one person working for a year could produce a nice definitive answer for…..it would have already been done !!!

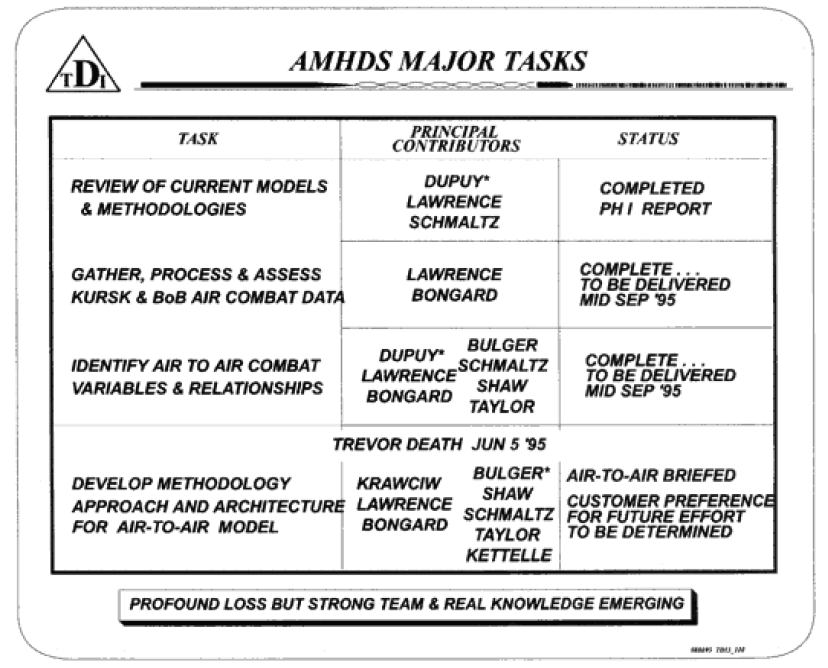

Let us look at the 1988 Breakpoints study. There was some effort leading up to that point. Trevor Dupuy and DMSI had already looked into the issue. This included developing a database of engagements (the Land Warfare Data Base or LWDB) and using that to examine the nature of breakpoints. The McQuie article was developed from this database, and his article was closely coordinated with Trevor Dupuy. This was part of the effort that led to the U.S. Army’s Concepts Analysis Agency (CAA) to issue out a RFP (Request for Proposal). It was competitive. I wrote the proposal that won the contract award, but the contract was given to Dr. Janice Fain to lead. My proposal was more quantitative in approach than what she actually did. Her effort was more of an intellectual exploration of the issue. I gather this was done with the assumption that there would be a follow-on contract (there never was). Now, up until that point at least a man-year of effort had been expended, and if you count the time to develop the databases used, it was several man-years.

Now the Breakpoints study was headed up by Dr. Janice B. Fain, who worked on it for the better part of a year. Trevor N. Dupuy worked on it part-time. Gay M. Hammerman conducted the interview with the veterans. Richard C. Anderson researched and created an additional 24 engagements that had clear breakpoints in them for the study (that is DMSI report 117B). Charles F. Hawkins was involved in analyzing the engagements from the LWDB. There were several other people also involved to some extent. Also, 39 veterans were interviewed for this effort. Many were brought into the office to talk about their experiences (that was truly entertaining). There were also a half-dozen other staff members and consultants involved in the effort, including Lt. Col. James T. Price (USA, ret), Dr. David Segal (sociologist), Dr. Abraham Wolf (a research psychologist), Dr. Peter Shapiro (social psychology) and Col. John R. Brinkerhoff (USA, ret). There were consultant fees, travel costs and other expenses related to that. So, the entire effort took at least three “man-years” of effort. This was what was needed just get to the point where we are able to take the next step.

This is not something that a single scholar can do. That is why funding is needed.

As to dying of old age before that happens…..that may very well be the case. Right now, I am working on two books, one of them under contract. I sort of need to finish those up before I look at breakpoints again. After that, I will decide whether to work on a follow-on to America’s Modern Wars (called Future American Wars) or work on a follow-on to War by Numbers (called War by Numbers II…being the creative guy that I am). Of course, neither of these books are selling well….so perhaps my time would be better spent writing another Kursk book, or any number of other interesting projects on my plate. Anyhow, if I do War by Numbers II, then I do plan on investing several chapters into addressing breakpoints. This would include using the 1,000+ cases that now populate our combat databases to do some analysis. This is going to take some time. So…….I may get to it next year or the year after that, but I may not. If someone really needs the issue addressed, they really need to contract for it.