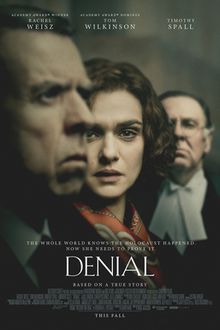

There is a new movie being released this month called Denial. It is about the libel lawsuit pursued by controversial historian David Irving against U.S. academic historian Deborah Lipstadt. David Irving was a British historian who specialized in the military history of the Third Reich. His writings downplayed the Holocaust and made the claim that there was no evidence that Hitler knew about it. She took David Irving to task in her 1993 book, Denying the Holocaust. David Irving took her to court in the UK, where their libel laws place the burden of proof on her. She had the legal requirement to prove that the Holocaust actually occurred and that Hitler ordered it.

Spoiler alert: He lost.

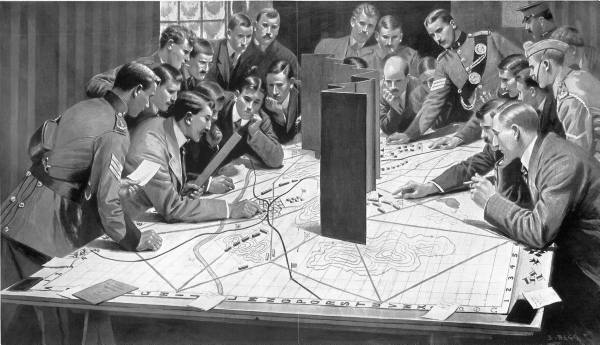

I did reference David Irving’s work twice in my book Kursk: The Battle of Prokhorovka. I was well aware of this controversy. On 4 May 1943 there was a meeting called by Adolf Hitler and attended by Colonel General Heinz Guderian, Field Marshal Erich von Manstein, Colonel General Hans Jeschonnek and many others. This rather famous meeting, part of the German planning for the Battle of Kursk, has been discussed in most books on Kursk.

The problem is that there are only three accounts of the meeting. There is a detailed account of the meeting in Heinz Guderian’s book, Panzer Leader, which provides a three-page narrative of the meeting. There is only a brief discussion in Manstein’s book, Lost Victories, which mentions the meeting but provides no details. These are the two sources that most people have used. Many historians have just accepted the Guderian account. One Kursk book started with the narrative of this meeting based upon Guderian’s account.

But there is third source. This is an entry in Field Marshal Wolfram Baron von Richthofen’s diary based upon a conversation he had with General Jeschonnek, the Chief of Staff of the Luftwaffe. It stated:

“[On 27 April] General Model declared he was not strong enough and would probably get bogged down or take too long. The Fuehrer took the view that the attack must be punched through without fail in shortest time possible. [Early in May] General Guderian offered to furnish enough tank units within six weeks to guarantee this. The Fuehrer thus decided on a postponement of six weeks. To get the blessing of all sides on this decision, he called a conference [on 4 May] with Field Marshals von Kluge and von Manstein. At first they agreed on a postponement; but when they heard that the Fuehrer had already made his mind up to that effect, they spoke out for an immediate opening of the attack—apparently in order to avoid the odium of being blamed for the postponement themselves.”

This account directly contradicts the Guderian account. The problem is that this reference to the diary entry and its translation from German was done by David Irving in his controversial book, Hitler’s War, originally published in 1977. Wolfram von Richthofen was a cousin of the famous World War I ace Manfred von Richthofen, the highest scoring ace in World War I with 80 claimed kills. Wolfram Richthofen served with the German air unit, the Condor Legion, in the Spanish Civil War and planned the bombing of Guernica. He had led a number of German air formations throughout the war and in May 1943 was the commander of the VIII Air Corps which was to participate in the Battle of Kursk. The command of this air corps was to be taken over by General Jeschonnek for the upcoming battle (this never happened). Richthofen’s diary has been quoted from extensively by David Irving. To date, I do not know of anyone else that has translated it.

So, before I used the quote, I wrote to David Irving in 2002. I specifically asked him about the diary and where it was located. It noted this in the footnote to this passage:

“David Irving, page 514 (or pages 583–584 in his 2001 version of the book that is available on the web). According to emails received from David Irving in 2002 and 2008, this passage is a directly translated quote from the diary, and the diary was stored at the Militargeschichtliches Forschungsamt at Freiburg im Breisgau, Germany. A xerox of the page in question is stored in the Irving Collection at the Institut fur Zeitgeschechte in Munich, Germany. We have not checked these files and cannot confirm the translation.”

So, I could access the files. It was possible to see the diary and check the translation. This was considered. Of course, to do so would have required me to travel to Germany, with a translator, to examine the diary. This would have taken at least a week of my time and cost a few thousand dollars. For fairly obvious reasons, I choose not to do this. Instead, I stated in the footnote that we had not confirmed the translation.

As David Irving headed to trial, I continued to wonder about this passage. There was no reason to assume that it was faked, or deliberately grossly mistranslated. On the other hand, it was something I was not 100% sure of. But, there was also no reason to assume that Guderian’s or Manstein’s account was 100% correct either, and people had freely used them without much question. So, do I build my narrative on those two well-known first-person accounts and ignore the contradictory second-hand account from Richthofen’s dairy just because it came from David Irving? I decided that all accounts needed to be presented and I left it to the reader to decide which they believed.

As I noted in my book (on page 69):

As no stenogram exists of this conference, one is left only with the memoirs of two generals and the diary of a person who did not participate. There also are what appear to be the Inspector General of Armored Troops’ notes for the meeting for 3 May. These notes clearly show the beneficial effects on tank strength of a six-week delay in the offensive. Guderian’s memoirs are quite explicit as to what happened at the conference but appear to be confused as to attendees and dates. Manstein mentions the conference and the issues in a very general sense. The Richthofen entry contradicts the other two memoirs, claiming that Guderian was the source of the six-week delay and that Manstein and Kluge supported the delay. It is impossible to resolve these differences.

The problem is that both Guderian’s and Manstein’s memoirs were written after the war. It is hard to say what passages may have been self-serving or written with an eye towards future historians. The story by Jeschonnek was recorded at the time by Richthofen. Jeschonnek committed suicide in August 1943 and Richthofen died from a brain tumor in July 1945. Which account is more “real?”