Much has changed since James Quinliven kicked off discussion over manpower and counterinsurgency. One of the most significant differences is the availability now of useful collections of historical data for analysis.

Much has changed since James Quinliven kicked off discussion over manpower and counterinsurgency. One of the most significant differences is the availability now of useful collections of historical data for analysis.

Previous posts in this series:

Force Ratios and Counterinsurgency

Force Ratios and Counterinsurgency II

Force Ratios and Counterinsurgency III

Dataset origins

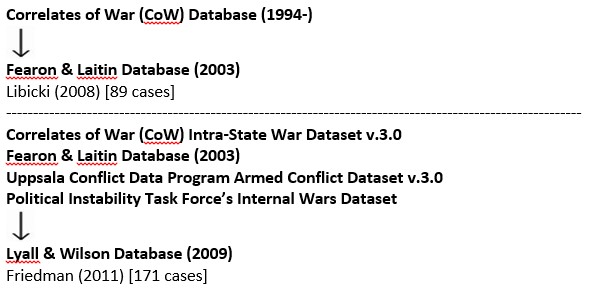

Detailed below are the lineages for the data sets used in six of the seven analyses I have discussed. The cases used by Libicki and Friedman were drawn from databases created by several academic organizations and work by James Fearon and Daivd Laitin [1] and, Jason Lyall and Isaiah Wilson III [2]. Both Libicki and Friedman contributed additional research of their own to complete their datasets.

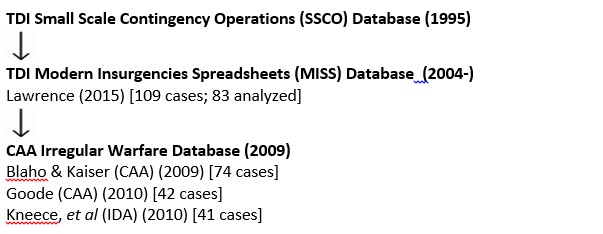

The data used by Lawrence, CAA, and IDA was all researched and compiled by The Dupuy Institute (TDI). Both TDI’s Modern Insurgencies Spreadsheets (MISS) Database and CAA’s Irregular Warfare Database contain data on at least 75 variables for each historical case.

The details of the dataset created and used by Hossack at Dstl have not been addressed in public forums, but it is likely to be similar.

Future directions for research

Given the general consensus of all of the studies that counterinsurgent manpower levels do correlate with outcome, the apparent disagreement over force ratio and troop density measures may not be as relevant as previously thought. More data collection and testing should be done to verify the validity of the postulated relationship between counterinsurgent force levels and the local population within an active area of operation.

Though there was consensus on the advantage of counterinsurgent manpower, there was no agreement as to its overall importance. More analysis is needed to examine just how decisive manpower advantages may be. Hossack and Goode suggested that a counterinsurgent manpower advantage may be important largely to prevent insurgent military success. Hossack and Friedman suggested that there may be points of diminishing manpower returns and Lawrence indicated that a force ratio advantage was decisive only against insurgencies with broad popular support. Given the potential difficulties in generating significant additional counterinsurgent manpower, it may be applicable and useful only under particular circumstances.

Due to the limitations of the available data, all of the studies based their analysis on data averages. The figures used for insurgent and counterinsurgent force sizes were usually selected from the highest annual totals across years or decades. All of the studies indicated the need to obtain more detailed data on individual cases to allow for more discreet and dynamic analysis to look for undetected links and patterns. Lawrence in particular called for examination of conditions before insurgencies begin and when they are just getting underway.

Friedman noted the value of quantitative analysis in helping to drive forward discussion and debate on defense and security topics. Research and analysis on insurgency and counterinsurgency was left to languish during the Vietnam War, only to be exhumed under the dire circumstances of the U.S. war in Iraq. It would be deeply unfortunate if promising new lines of inquiry were abandoned again.

Notes

[1] James D. Fearon, and David D. Laitin, “Ethnicity, Insurgency, and Civil War” American Political Science Review 97, 1, Feb 2003

[2] Lyall, Jason and Isaiah Wilson III, “Rage Against the Machines: Explaining Outcomes in Counterinsurgency Wars,” International Organization 63, Winter 2009