Do we need to validate training models? The argument is that as the model is being used for training (vice analysis), it does not require the rigorous validation that an analytical model would require. In practice, I gather this means they are not validated. It is an argument I encountered after 1997. As such, it is not addressed in my letters to TRADOC in 1996: See http://www.dupuyinstitute.org/pdf/v1n4.pdf

Over time, the modeling and simulation industry has shifted from using models for analysis to using models for training. The use of models for training has exploded, and these efforts certainly employ a large number of software coders. The question is, if the core of the analytical models have not been validated, and in some cases, are known to have problems, then what are the models teaching people? To date, I am not aware of any training models that have been validated.

Let us consider the case of JICM. The core of the models attrition calculation was the Situational Force Scoring (SFS). Its attrition calculator for ground combat is based upon a version of the 3-to-1 rule comparing force ratios to exchange ratios. This is discussed in some depth in my book War by Numbers, Chapter 9, Exchange Ratios. To quote from page 76:

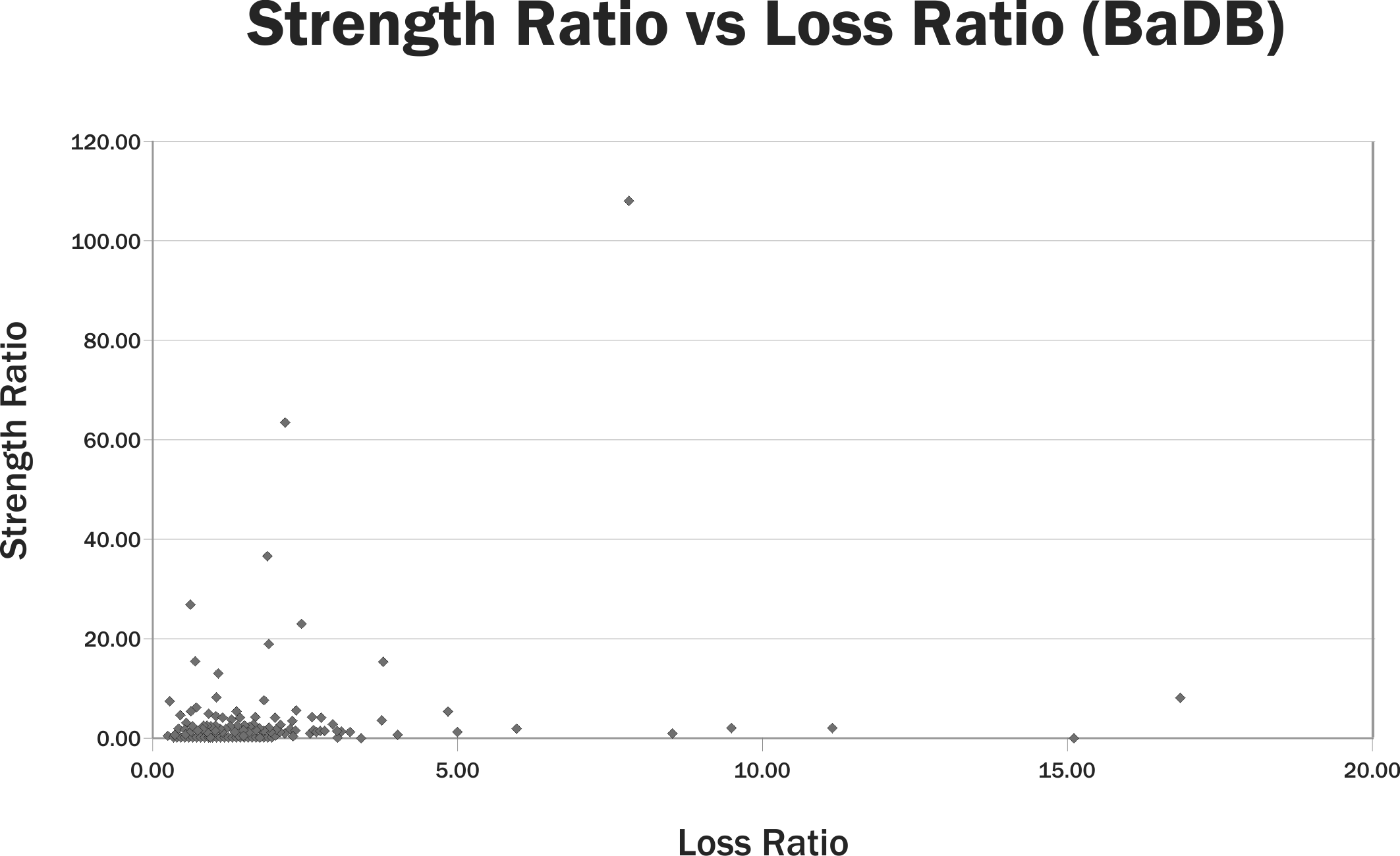

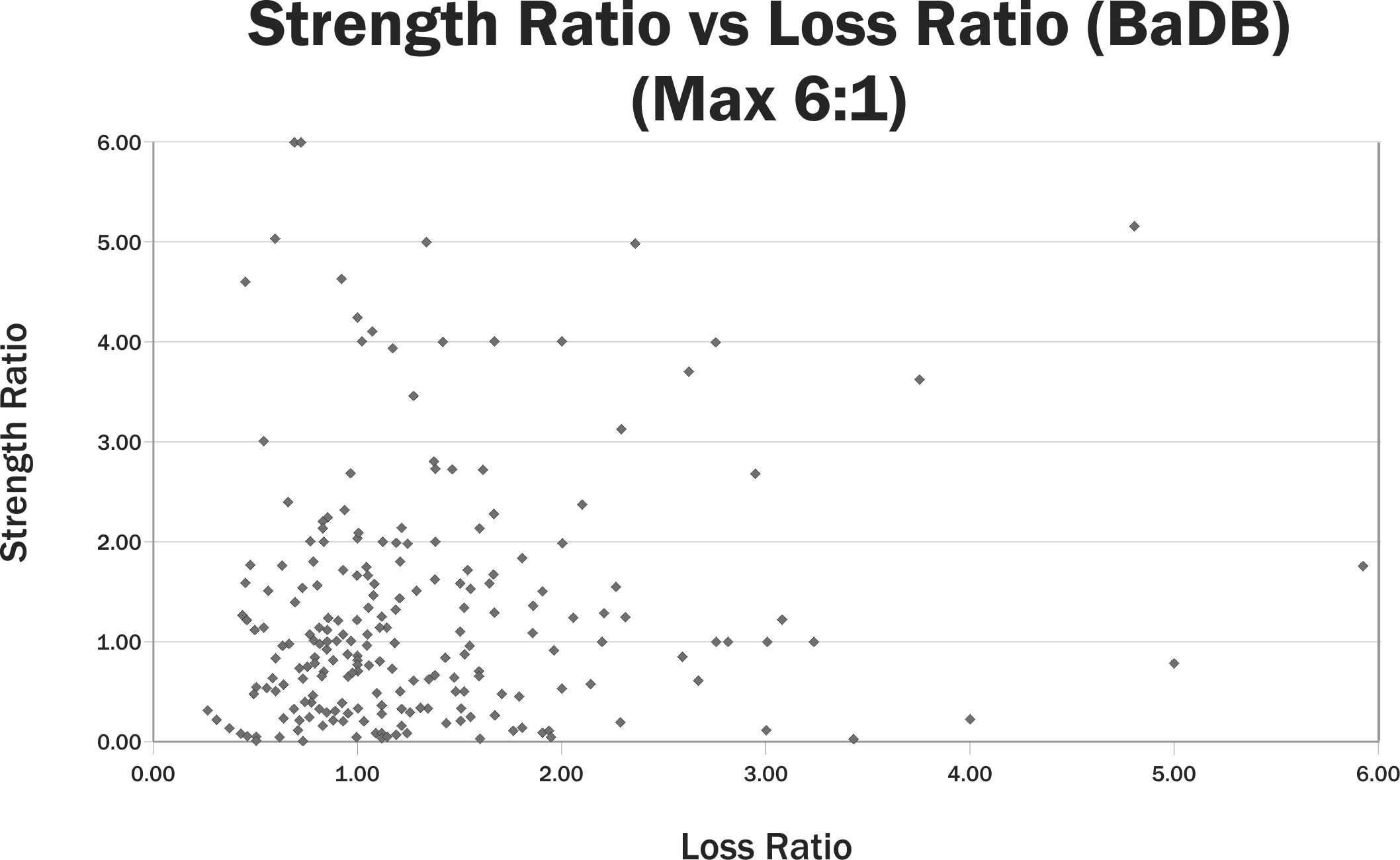

If the RAND version of the 3 to 1 rule is correct, then the data should show a 3 to 1 force ratio and a 3 to 1 casualty exchange ratio. However, there is only one data point that comes close to this out of the 243 points we examined.

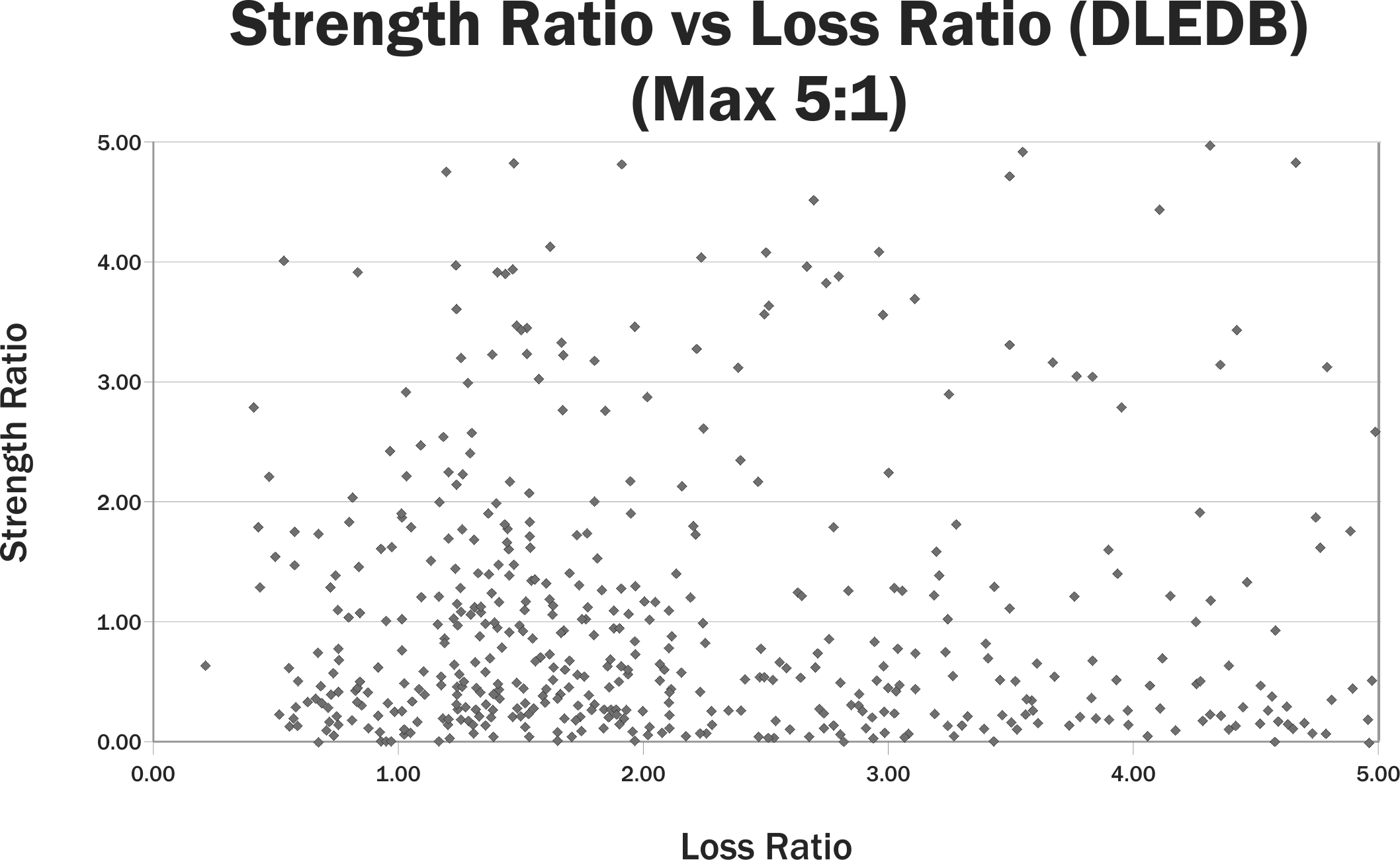

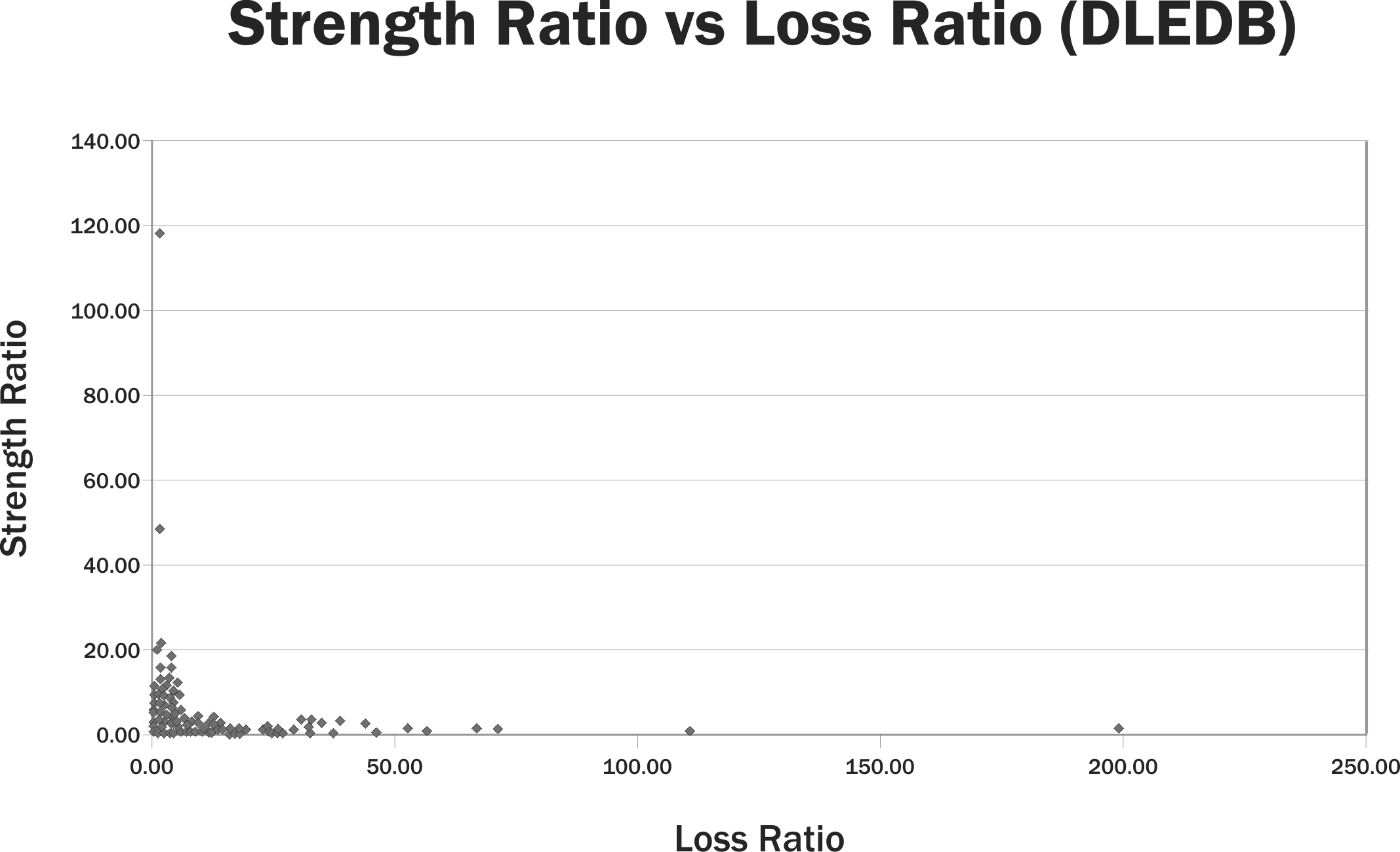

That was 243 battles from 1600-1900 using our Battles Data Base (BaDB). We also tested it to our Division Level Engagement Data Base (DLEDB) from 1904-1991 with the same result. To quote from page 78 of my book:

In the case of the RAND version of the 3 to 1 rule, there is again only one data point (out of 628) that is anywhere close to the crossover point (even fractional exchange ratio) that RAND postulates. In fact it almost looks like the data conspire to leave a noticeable hole at that point.

So, does this create negative learning? If the ground operations are such that an attacking ends up losing 3 times as many troops as the defender when attacking at 3-to-1 odds, does this mean that the model is training people not to attack below those odds, and in fact, to wait until they have much more favorable odds? The model was/is (I haven’t checked recently) being used at the U.S. Army War College. This is the advanced education institute that most promotable colonels attend before advancing to be a general officer. Is such a model teaching them incorrect relationships, force ratios and combat requirements?

You fight as you train. If we are using models to help train people, then it is certainly valid to ask what those models are doing. Are they properly training our soldiers and future commanders? How do we know they are doing this. Have they been validated?

Does a Model have to be complicated?

No….but it depends on how much you want to explain. War has a lot of factors involved, some more significant than others. So, I can make a simple model, but then there are a lot of elements not covered.

Regardless if simple or complex, I still think they need to be validated.

WARSIM and other constructive simulations were and are validated by the Army National Simulation Center assisted by the Battle (now Mission) Command Training Program, but not in terms of modeling historical engagements and comparing the outcomes of combat or weapons performance. SMEs face validated behaviors by comparing their execution to doctrine and results by a SME judgement. Not all simulations validate everything the first time through.

It would be interesting to compare the whole of National Training Simulation results to say gunnery results for individual, platoon, and company qualification scores (comparing the laser data to the training round data) and to battalion and brigade sized engagements from Desert Storm and Operation Iraqi Freedom.

Also interesting would be comparing the whole of BCTP/MCTP exercises to similar NTC engagements and to combat operations.

The intangibles are also important. How would me measure, compare, and contrast the ability of a simulation operator acting at the behest of the training audience with NTC and combat operations? Perhaps the skill of the simulation operator (red and blue) would have an untoward effect upon the outcome.

Also important is the experience factor of the BCTP/MCTP/NTC OPFOR against the Blue units in simulation and operations on known ground.