Humans are a competitive lot. With machines making so much rapid progress (see Moore’s Law), the singularity approaches—see the discussion between Michio Kaku and Ray Kurzweil, two prominent futurologists. This is the “hypothesis that the invention of artificial super intelligence (ASI) will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization.” (Wikipedia). This was also referred to as general artificial intelligence (GAI) by The Economist, and previously discussed in this blog.

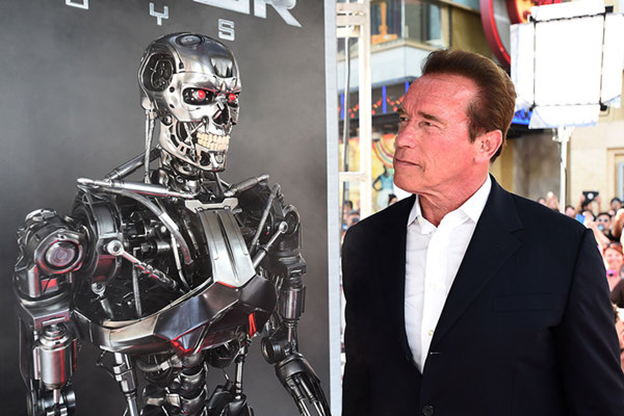

We humans also exhibit a tendency to anthropomorphize, or to endow any observed object with human qualities. The image above illustrates Arnold Schwarzenegger sizing up his robotic doppelgänger. This is further evidenced by statements made about the ability of military networks to spontaneously become self-aware:

The idea behind the Terminator films – specifically, that a Skynet-style military network becomes self-aware, sees humans as the enemy, and attacks – isn’t too far-fetched, one of the nation’s top military officers said this week. Nor is that kind of autonomy the stuff of the distant future. ‘We’re a decade or so away from that capability,’ said Gen. Paul Selva, vice chairman of the Joint Chiefs of Staff.

This exhibits a fundamental fear, and I believe a misconception, about the capabilities of these technologies. This is exemplified by Jay Tuck’s TED talk, “Artificial Intelligence: it will kill us.” His examples of AI in use today include airline and hotel revenue management, aircraft autopilot, and medical imaging. He also holds up the MQ-9 Reaper’s Argus (aka Gorgon Stare) imaging systems, as well as the X-47B Pegasus, previously discussed, as an example of modern AI, and the pinnacle in capability. Among several claims, he states that the X-47B has an optical stealth capability, which is inaccurate:

[X-47B], a descendant of an earlier killer drone with its roots in the late 1990s, is possibly the least stealthy of the competitors, owing to Northrop’s decision to build the drone big, thick and tough. Those qualities help it survive forceful carrier landings, but also make it a big target for enemy radars. Navy Capt. Jamie Engdahl, manager of the drone test program, described it as ‘low-observable relevant,’ a careful choice of words copping to the X-47B’s relative lack of stealth. (Emphasis added).

Such questions limit the veracity of these claims. I believe that this is little more than modern fear mongering, playing on ignorance. But, Mr. Tuck is not alone. From the forefront of technology, Elon Musk is often held up as an example of commercial success in the field of AI, and he recently addressed the national governors association meeting on this topic, specifically in the need for regulation in the commercial sphere.

On the artificial intelligence [AI] front, I have exposure to the most cutting edge AI, and I think people should be really concerned about it. … AI is a rare case, I think we should be proactive in terms of regulation, rather that reactive about it. Because by the time we are reactive about it, its too late. … AI is a fundamental risk to human civilization, in a way that car crashes, airplane crashes, faulty drugs or bad food were not. … In space, we get regulated by the FAA. But you know, if you ask the average person, ‘Do you want to get rid of the FAA? Do you want to take a chance on manufacturers not cutting corners on aircraft because profits were down that quarter? Hell no, that sounds terrible.’ Because robots will be able to do everything better than us, and I mean all of us. … We have companies that are racing to build AI, they have to race otherwise they are going to be made uncompetitive. … When the regulators are convinced it is safe they we can go, but otherwise, slow down. [Emphasis added]

Mr. Musk also hinted at American exceptionalism: “America is the distillation of the human spirit of exploration.” Indeed, the link between military technology and commercial applications is an ongoing virtuous cycle. But, the kind of regulation that exists in the commercial sphere from within the national, subnational, and local governments of humankind do not apply so easily in the field of warfare, where no single authority exists. Any agreements to limit technology are a consensus-based agreement, such as a treaty.

In a recent TEDx talk, Peter Haas describes his work in AI, and some of challenges that exist within the state of the art of this technology. As illustrated above, when asked to distinguish between a wolf and a dog, the machine classified the Husky in the above photo as a wolf. The humans developing the AI system did not know why this happened, so they asked the AI system to show the regions of the image that were used to make this decision, and the result is depicted on the right side of the image. The fact that this dog was photographed with snow in the background is a form of bias – are fact that snow exists in a photo does not yield any conclusive proof that any particular animal is a dog or a wolf.

Right now there are people – doctors, judges, accountants – who are getting information from an AI system and treating it like it was information from a trusted colleague. It is this trust that bothers me. Not because of how often AI gets it wrong; AI researchers pride themselves on the accuracy of results. It is how badly it gets it wrong when it makes a mistake that has me worried. These systems do not fail gracefully.

AI systems clearly have drawbacks, but they also have significant advantages, such as in the curation of shared model of the battlefield.

In a paper for the Royal Institute of International Affairs in London, Mary Cummings of Duke University says that an autonomous system perceives the world through its sensors and reconstructs it to give its computer ‘brain’ a model of the world which it can use to make decisions. The key to effective autonomous systems is ‘the fidelity of the world model and the timeliness of its updates.‘ [Emphasis added]

Perhaps AI systems might best be employed in the cyber domain, where their advantages are naturally “at home?” Mr. Haas noted that machines at the current time have a tough time doing simple tasks, like opening a door. As was covered in this blog, former Deputy Defense Secretary Robert Work noted this same problem, and thus called for man-machine teaming as one of the key areas of pursuit within the Third Offset Strategy.

Just as the previous blog post illustrates, “the quality of military men is what wins wars and preserves nations.” Let’s remember Paul Van Ripper’s performance in Millennium Challenge 2002:

Red, commanded by retired Marine Corps Lieutenant General Paul K. Van Riper, adopted an asymmetric strategy, in particular, using old methods to evade Blue’s sophisticated electronic surveillance network. Van Riper used motorcycle messengers to transmit orders to front-line troops and World-War-II-style light signals to launch airplanes without radio communications. Red received an ultimatum from Blue, essentially a surrender document, demanding a response within 24 hours. Thus warned of Blue’s approach, Red used a fleet of small boats to determine the position of Blue’s fleet by the second day of the exercise. In a preemptive strike, Red launched a massive salvo of cruise missiles that overwhelmed the Blue forces’ electronic sensors and destroyed sixteen warships.

We should learn lessons on the over reliance on technology. AI systems are incredibly fickle, but which offer incredible capabilities. We should question and inspect results by such systems. They do not exhibit emotions, they are not self-aware, they do not spontaneously ask questions unless specifically programmed to do so. We should recognize their significant limitations and use them in conjunction with humans who will retain command decisions for the foreseeable future.